- 1Graduate School of Systems Design, Tokyo Metropolitan University, Hachioji, Japan

- 2Machine Intelligence Laboratory, School of Engineering and Applied Sciences, National University of Mongolia, Ulaanbaatar, Mongolia

- 3The First Central Hospital of Mongolia, Ulaanbaatar, Mongolia

- 4Graduate School of Informatics and Engineering, The University of Electro-Communications, Chofu, Japan

- 5Department of General Medicine, National Defense Medical College, Tokorozawa, Japan

Background: To conduct a rapid preliminary COVID-19 screening prior to polymerase chain reaction (PCR) test under clinical settings, including patient’s body moving conditions in a non-contact manner, we developed a mobile and vital-signs-based infection screening composite-type camera (VISC-Camera) with truncus motion removal algorithm (TMRA) to screen for possibly infected patients.

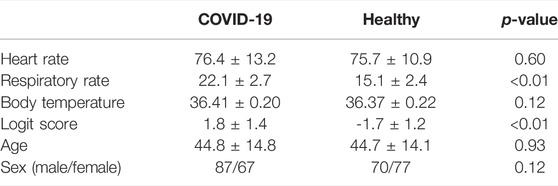

Methods: The VISC-Camera incorporates a stereo depth camera for respiratory rate (RR) determination, a red–green–blue (RGB) camera for heart rate (HR) estimation, and a thermal camera for body temperature (BT) measurement. In addition to the body motion removal algorithm based on the region of interest (ROI) tracking for RR, HR, and BT determination, we adopted TMRA for RR estimation. TMRA is a reduction algorithm of RR count error induced by truncus non-respiratory front-back motion measured using depth-camera-determined neck movement. The VISC-Camera is designed for mobile use and is compact (22 cm × 14 cm × 4 cm), light (800 g), and can be used in continuous operation for over 100 patients with a single battery charge. The VISC-Camera discriminates infected patients from healthy people using a logistic regression algorithm using RR, HR, and BT as explanatory variables. Results are available within 10 s, including imaging and processing time. Clinical testing was conducted on 154 PCR positive COVID-19 inpatients (aged 18–81 years; M/F = 87/67) within the initial 48 h of hospitalization at the First Central Hospital of Mongolia and 147 healthy volunteers (aged 18–85 years, M/F = 70/77). All patients were on treatment with antivirals and had body temperatures <37.5°C. RR measured by visual counting, pulsimeter-determined HR, and BT determined by thermometer were used for references.

Result: 10-fold cross-validation revealed 91% sensitivity and 90% specificity with an area under receiver operating characteristic curve of 0.97. The VISC-Camera-determined HR, RR, and BT correlated significantly with those measured using references (RR: r = 0.93, p < 0.001; HR: r = 0.97, p < 0.001; BT: r = 0.72, p < 0.001).

Conclusion: Under clinical settings with body motion, the VISC-Camera with TMRA appears promising for the preliminary screening of potential COVID-19 infection for afebrile patients with the possibility of misdiagnosis as asymptomatic.

Introduction

As of March 2022, the number of people who have been infected with COVID-19 worldwide was 434 million, resulting in approximately 5.9 million deaths (WHO, 2022). Although nucleic acid amplification tests, such as the reverse transcriptase-polymerase chain reaction (RT-PCR), are recommended to identify active infection as a gold standard, the tests require specialty resources and time-intensive tasks (WHO, 2020a). RT-PCR test takes approximately 2 h, including specimen collection and handling time.

As a preliminary daily screening prior to the RT-PCT test, an infrared thermometer-based body temperature (BT) screening has been widely used to conduct rapid infection screening at entrances of mass gathering places such as schools, offices, and hospitals (WHO, 2020b). However, recent cohort studies have reported a high rate (70–75%) of afebrile cases in patients with COVID-19 infection, which will be undetected in BT screening and can spread the virus (Goyal et al., 2020; Richardson et al., 2020). Rechtman et al. have reported COVID-19 induced vital signs alterations in addition to BT, such as higher heart rate (HR, HR>100 bpm) than that of normal controls and higher respiratory rate (RR, RR>24 bpm) (Rechtman et al., 2020). Moreover, a cohort study in the United Kingdom revealed that patients with COVID-19 experienced significant increases in RR and slight increases in HR; however, they had no significant increases in BT (Pimentel et al., 2020).

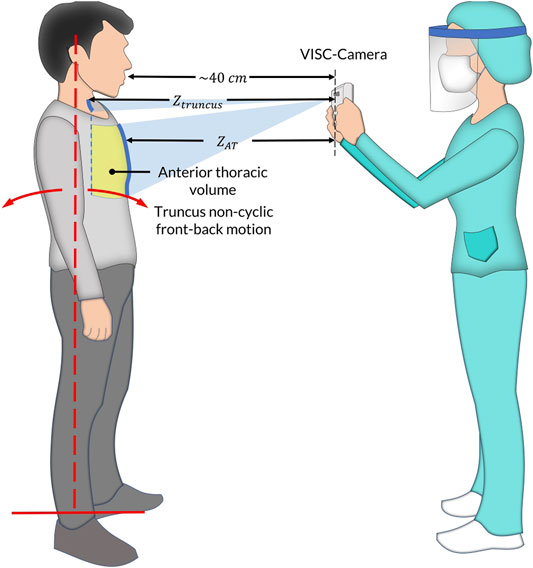

We have developed vital signs (RR, HR, and BT) based infection screening system which can detect afebrile patients (Matsui et al., 2010; Dagdanpurev et al., 2019). However, the proposed system had a limitation of accuracy in orthostatic RR measurement because of the patient’s non-respiratory motion, such as truncus front-back motion, for maintaining upright posture (Figure 1). Using Doppler radars, we have previously developed non-contact screening systems for sepsis and pneumonia (Matsui et al., 2020; Otake et al., 2021). However, Doppler radars have limitations in noise reduction induced by body movements. Therefore, in this paper, we adopted image sensors used in our previous studies instead of Doppler radars, such as a depth camera (Takamoto et al., 2020) and a red–green–blue (RGB) camera (Unursaikhan et al., 2021).

FIGURE 1. The vital-signs-based infection screening composite-type camera (VISC-Camera) measurement setup in the standing position. In addition to respiratory anterior thorax (

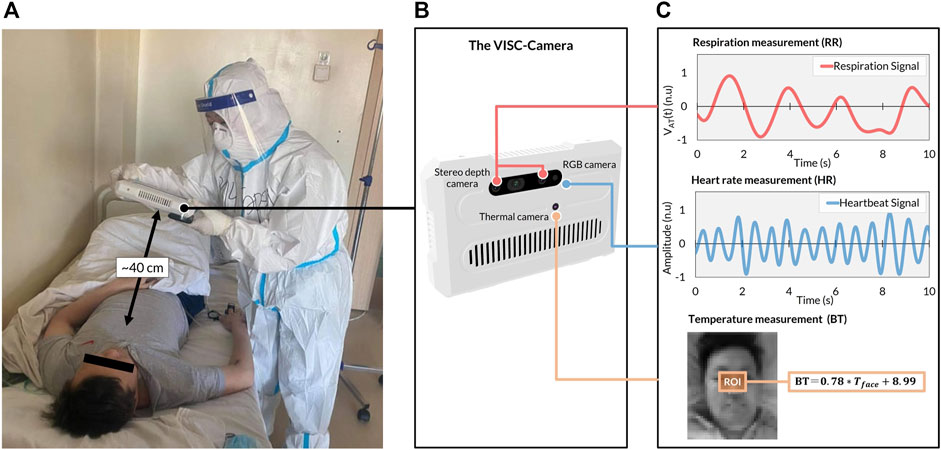

In order to conduct an accurate orthostatic RR assessment, we propose a truncus motion removal algorithm (TMRA). A truncus motion is measured using depth-camera-determined neck movement. Adopting TMRA in addition to the ordinary region of interest (ROI) tracking algorithms, we developed a mobile and vital-signs-based infection screening composite-type camera (VISC-Camera). VISC-Camera detects COVID-19 possibly infected patients within 10 s using a stereo depth camera for RR determination, an RGB camera for HR estimation, and a thermal camera for BT measurement (Figure 2). These three vital signs are indices used to determine the systemic inflammatory response syndrome (SIRS) score (Kaukonen et al., 2015).

FIGURE 2. The VISC-Camera structure and vital signs-related signals were derived in the COVID-19 isolation unit. (A) VISC-Camera screening for a patient with COVID-19 within 10 s (B) The three cameras of VISC-Camera: stereo depth camera for respiratory rate (RR) monitoring, RGB camera for heart rate (HR) determination, thermal camera for body temperature (BT) measurement. (C) Vital signs-related signals of the patient comprising respiration signal, heartbeat signal, and facial region of interest (ROI, orange rectangle) for BT determination.

To conduct a rapid preliminary COVID-19 screening, we developed a portable vital-signs based COVID-19 screening system using multiple image sensors (VISC-Camera) with body (trunk) motion removal algorithm (TMRA). The clinical study in a Mongolian hospital revealed that VISC-camera enabled accurate afebrile COVID-19 patients screening due to patients’ respiratory rate (RR) increase induced by inflammatory responses in relation to COVID-19. Clinical testing was conducted on 154 PCR positive COVID-19 inpatients (aged 18–81 years; M/F = 87/67) within the initial 48 h of hospitalization at the First Central Hospital of Mongolia and 147 healthy volunteers (aged 18–85 years, M/F = 70/77).

Materials and Methods

The VISC-Camera System Structure

The VISC-Camera with a seven-inch touchscreen display incorporates three kinds of image sensors, i.e., a stereo depth camera with a Red-Green-Blue (RGB) camera (424 × 240 pixels, 30 [frames/s], Intel Real sense D435i), and a thermal camera [80 × 60 pixels, 9 (frames/s), FLIR Lepton 2.5] (Figure 2B). A circular polarizer lens is installed in front of the RGB camera to reduce the reflection of glossy skin. The VISC-Camera has a built-in single-board computer (Raspberry Pi 4B) for data processing. Images of a stereo depth camera with an RGB camera and a thermal camera are transferred to the single board computer through USB 3.0 and serial peripheral interface (SPI), respectively. A built-in Li-ion battery enables continuous operation for over 100 measurements by a single battery charge. The housing of the device is printed by a 3D printer with overall dimensions of 22 cm (L) × 14 cm (W) × 4 cm (H). The total weight of the VISC-Camera is 800 g.

The VISC-Camera Data Processing Algorithms

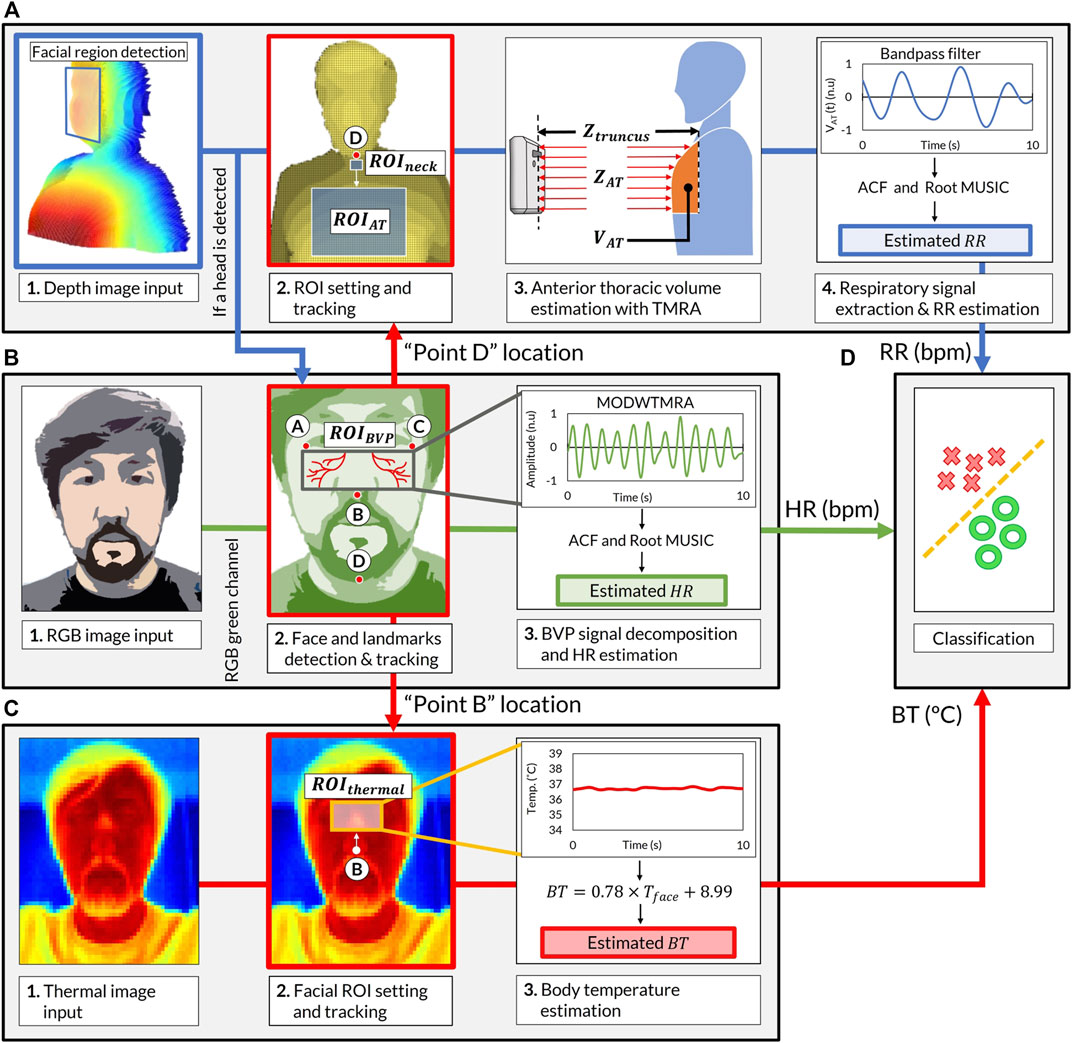

The software of the VISC-Camera is stand-alone and was developed in Python programming language (Python Software Foundation). The overview of the software block diagram is shown in Figure 3 (Supplementary Video). We used the Open computer vision (OpenCV) library for image processing and the multiprocessing module from Python to execute the image analyses simultaneously (Bradski, 2000). The VISC-Camera executes parallel processes in a quad-core processor, i.e., image capturing and RR, HR, and BT determinations. As shown in The Truncus Motion Removal Algorithm for Respiratory Rate Estimation, the VISC-Camera extracts RR from depth images while measuring the distance from the VISC-camera to the examinee. RGB images enable HR estimation through facial landmarks detection with a facial tracking algorithm. BT is derived from RGB-determined ROI using the thermal images. Logistic regression analysis (LRA) was adopted from the Python Scikit-Learn machine-learning library (Pedregosa et al., 2011) to classify COVID-19 infection. The linear equation determined by LRA is expressed as follows:

where

FIGURE 3. Software block diagram of the VISC-Camera screening procedure. (A) Respiratory rate (RR) estimation procedure. (Chart 1) Stereo depth camera capture [424 × 240 pixels, 30 (frames/s)]. (Chart 2)

In order to keep the computational load as minimum as possible, we adopted the following procedures to determine regions of interest.

Heart Rate Estimation

The VISC-Camera determines HR by sensing facial skin tone changes induced by an arterial pulsation called blood volume pulse (BVP) using an RGB image-based remote photoplethysmography (rPPG) method (Figure 3B). To extract the BVP signal, the VISC-Camera detects the human face using the Haar cascade classifier from the OpenCV library (Viola and Jones, 2004). The median flow object tracking algorithm was adopted from the OpenCV library to track facial ROI for HR measurement without being affected by body motion, including headshake (Kalal et al., 2010). To efficiently acquire the BVP signal, we selected

The Truncus Motion Removal Algorithm for Respiratory Rate Estimation

The VISC-Camera determines RR by monitoring the examinee’s volume of the anterior thorax [

where

The facial landmark point D, which was coordinated in the HR estimation process, determines the location of the

Body Temperature Estimation

The VISC-Camera estimates BT from the

where

Image Quality Assessment

Taking stable images from a proper position is essential in measuring reliable signals using image-based remote methods. Therefore, the VISC-Camera screening is according to the following procedures. First, with 3D visual assistance on the screen, the examiner can orientate the device to the examinee. Next, to begin measurement, the VISC-Camera assesses the examinee’s posture with respect to angles, i.e., roll and yaw, using facial landmark points A-C. After measurement starts, the VISC-Camera detects sudden motions using a second derivative-based blur detection method while capturing images (Pech-Pacheco et al., 2000). We used the Laplacian operator as a second derivative operator with the following 3 × 3 kernel:

The pre-defined motion detection threshold value of the variance of the absolute value was

Clinical Testing of VISC-Camera at the First Central Hospital of Mongolia for COVID-19 Patients

We conducted clinical testing of the VISC-Camera in 154 patients who tested positive for COVID-19 according to RT-PCR (aged 18–81 years; 87 males, 67 females) within the initial 48 h of hospitalization at the First Central Hospital of Mongolia. A control set comprised 147 healthy volunteers (aged 18–85 years; 70 males, 77 females). All patients were afebrile (BT < 37.5°C) following administration of antiviral agents (umifenovir 200 mg/day or favipiravir 200 mg/day). Febrile patients in the intensive-care unit were not included. All healthy volunteers were examined using COVID-19 symptoms-based questionnaires and showed no symptoms for 1 week before and after VISC-Camera screening. We did not use criteria in body temperature, which excludes an examinee with and without COVID-19. A summary of the demographic of the participants is shown in Table 1. Figure 1 shows the VISC-Camera measurement setup in the standing position. We did not give any instructions on posture to examinees. Standing, sitting, and supine examinees are included in our study. There are possible effects of posture on RR and HR; however, the RR of COVID-19 patients drastically increased compared to healthy volunteers. The clinical tests were conducted indoors with moderate illumination levels (600–1,100 lux). For reference, a respiratory belt, visual respiratory counting, a fingertip pulsimeter, and a thermometer were used. We used a respiratory belt and visual respiratory counting as references of RR for healthy volunteers and COVID-19 patients, respectively. Because person-to-person contact was strictly limited in the isolation unit of COVID-19. Prior to a clinical study, we tested the concordance between respiratory belt-derived RR and that determined by visual respiratory counting. In order to evaluate false positive cases induced by another infectious disease with systemic inflammation, we recruited 33 pediatric pneumonia inpatients (aged 1–14 years; 18 males, 15 females) measured by the VISC-Camera at the Central Hospital of Songinokhairkhan District in Mongolia. This study was approved by the Tokyo Metropolitan University ethics committee (approval number H20-038). All participants gave written informed consent. We obtained the photograph licensing of Figure 1 from the patient.

Statistical Analysis

Pearson’s correlation coefficient and Bland–Altman plots were used to analyze the correlation between the measurement of the VISC-Camera and the references. The LRA classification model results were used to calculate the sensitivity, specificity, negative predictive value (NPV), and positive predictive value (PPV). A t-test was conducted to statistically assess the vital signs’ mean rate of the two groups. Ten-fold cross-validation was performed, and the receiver operating characteristic curve was calculated to evaluate the accuracy of LRA models.

Results

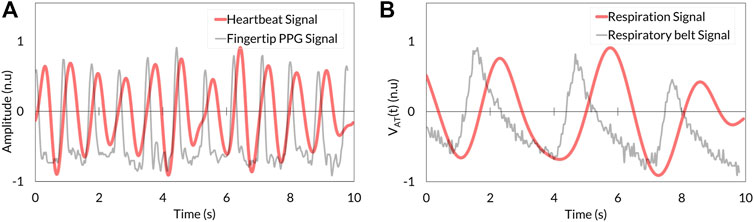

The heartbeat signal measured by the RGB camera showed similar periodic fluctuation to that determined by fingertip photoplethysmography (Figure 4A). Respiration signal determined by stereo depth camera indicated cyclic oscillation same as that derived by the respiratory belt (Figure 4B).

FIGURE 4. Comparison between VISC-Camera-derived vital signals (red) and references (grey). (A) Heartbeat signal. (B) Respiration signal. PPG, photoplethysmography.

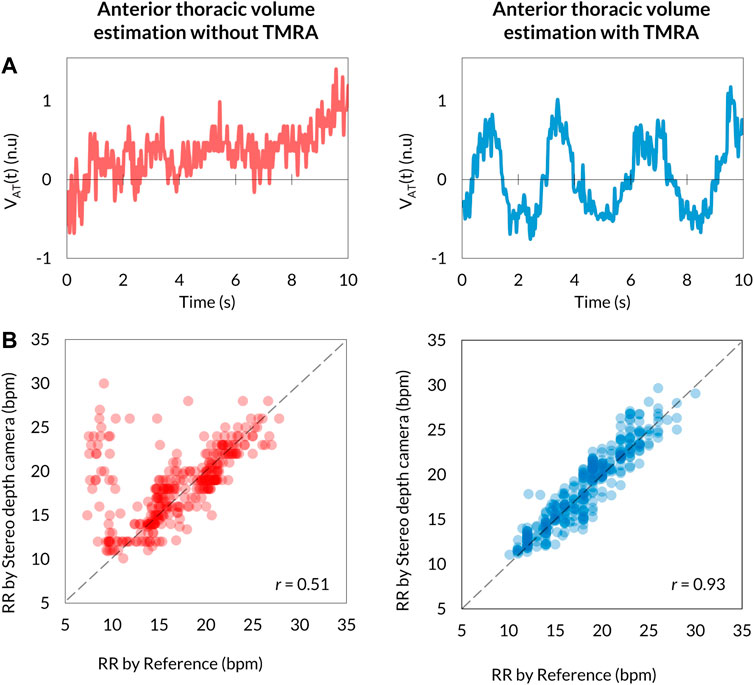

Respiratory signals determined as

FIGURE 5. Comparison of

BT, defined as the same as the axillary temperature, is determined using thermal camera-monitoring of the temperature (

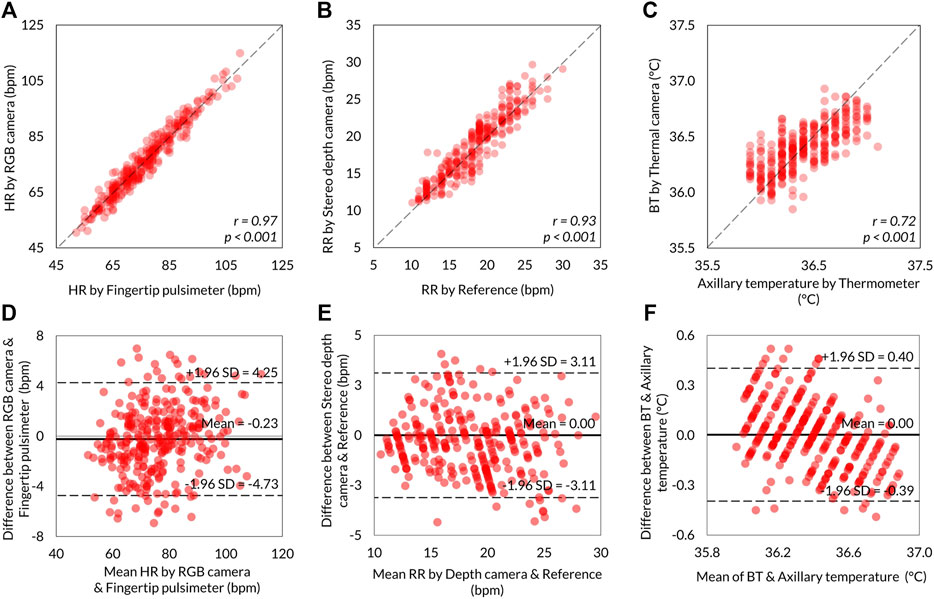

The level of agreements between the VISC-Camera and the references were assessed using the Pearson correlation coefficient (n = 301) and the Bland–Altman plot. Correlation scatter plots for HR, RR, and BT are shown in Figures 6A,B,C, respectively. VISC-Camera-determined HR, RR, and BT significantly correlated with those measured using references (HR: r = 0.97, p < 0.001; RR: r = 0.93, p < 0.001; BT: r = 0.72, p < 0.001). The root mean squared errors of HR, RR, and BT were 2.7, 1.6, and 0.2, respectively. Figures 6D,E,F show the Bland–Altman plots for HR, RR, and BT determined by the VISC-Camera and the references. The 95% limits of agreement of HR, RR, and BT measurements ranged from −4.7 to 4.2 bpm (σ = 2.3), -3.1 to 3.1 bpm (σ = 1.6), and -0.39 to 0.40°C (σ = 0.2), respectively.

FIGURE 6. Summary of the VISC-Camera’s vital signs measurement accuracy (n = 301). Scatter plots showing the relationship between VISC-Camera determined vital signs and reference vital sign values. (A) HR with r = 0.97, p < 0.001, RMSE = 2.7. (B) RR with r = 0.93, p < 0.001, RMSE = 1.6. (C) BT with r = 0.72, p < 0.001, RMSE = 0.2. The Bland–Altman plots illustrating 95% limits of agreements of RR, HR, and BT measurements by the VISC-Camera and the references. (D) For HR, the RGB camera and fingertip pulsimeter ranged from −4.7 to 4.2 bpm vs. mean (σ = 2.3). (E) For RR, the stereo depth camera and the reference ranged from −3.1 to 3.1 bpm vs. mean (σ = 1.6). (F) BT and axillary temperature ranged from −0.39 to 0.40°C vs. mean (σ = 0.2).

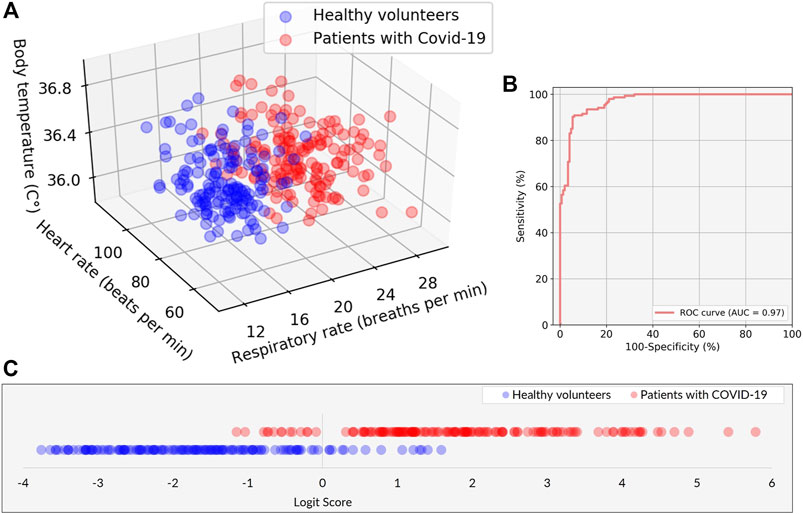

The 3D plot of RR, HR, and BT determined by the VISC-Camera is shown in Figure 7A. Although all patients with COVID-19 were afebrile, the 3D plot shows two groups: the patient and the healthy volunteer groups. This is because the RRs of infected patients were significantly higher than those of healthy volunteers (patients with COVID-19: mean RR = 22.1 bpm (σ = 2.7); healthy volunteers: mean RR = 15.1 bpm (σ = 2.4); two-tailed p < 0.05). To separate patients from healthy volunteers more accurately, the following logit regression analysis (LRA) equation was determined by adopting RR, HR, and BT as explanatory variables:

where

FIGURE 7. Performance of the classification model in discriminating between patients with COVID-19 and healthy volunteers. (A) Scatter plots of the three explanatory variables of RR, HR, and BT in patients with COVID-19 (red) and healthy volunteers (blue). (B) The receiver operating characteristic (ROC) curve with area under the curve (AUC) of 0.97. (C) The classification result is represented by the logit scores (Logit Score ≥0 ⇒Suspected as COVID-19, Logit Score <0 ⇒Healthy).

Discussions

The VISC-Camera can be used as an Internet of Things device for an infectious disease surveillance platform, as proposed in our previous study (Sun et al., 2020). It can also be used routinely in hospitals as a daily vital signs recording tool because it determines RR, HR, and BT within 10 s and correlates significantly with reference values. Generally, healthcare professionals take more than 3 min to measure and record vital signs. Therefore, in addition to screening, the proposed system may reduce the everyday burden on medical staff responsible for the care of patients with COVID-19.

In addition, sequential organ failure assessment (SOFA) has long been used to predict ICU mortality. However, in recent years, and in particular, following the publication of the Sepsis-3 guidelines (Singer et al., 2016), quick SOFA (qSOFA) has been more commonly used in clinical practice than SIRS or SOFA scores, which are better suited to evaluation during the early stages of sepsis screening. The qSOFA approach is also used when a sudden change in patient status is suspected. Although qSOFA uses only three parameters (RR, blood pressure, and consciousness), the traditional RR measurement is relatively laborious for medical staff. Therefore, a device to measure RR quickly and easily could be a helpful tool in an emergency clinical setting.

Although limited to supine position, Dong et al. recently reported non-contact COVID-19 screening using continuous-wave radar-based non-contact sleep monitoring equipment via XGBoost and LRA model (Dong et al., 2022). However, the VISC-Camera enables non-contact COVID-19 screening in standing and sitting postures, as well as in supine positions. In addition, our study focuses on not only technical backgrounds but also clinical findings, such as the success of non-febrile COVID-19 patients screening by non-contact vital signs monitoring.

In our study, the patients were treated with antiviral medication in advance; thus, their conditions differed from the initial characteristics. Despite their lack of fever, we found a drastic increase in RR in patients with COVID-19 infection. Therefore, the ability of the proposed system to accurately measure RR during preliminary screening may contribute to the reduction of false-negative test results for COVID-19 infection.

Our study has some limitations. Critically ill patients with COVID-19 were excluded, and all participants were recruited from one country. Therefore, further data are needed that encompass examinees with various degrees of COVID-19 severity, diverse ethnicities from various countries, and a wide range of ages to increase the general-purpose versatility of the VISC-Camera. In this study, the VISC-Camera was used in air-conditioned areas with moderate illumination levels (600–1,100 lux). Therefore, the VISC-Camera should also be tested in other, less optimal environments. The VISC-Camera detects COVID-19-induced inflammatory responses rather than the SARS-CoV-2 virus itself. Therefore, there are possibilities to misdiagnose the other infectious diseases as COVID-19, such as pneumonia or influenza. The VISC-Camera was developed for preliminary screening and not to define the diagnosis.

The portable VISC-Camera described here, which conducts infection screening within 10 s in a no-contact manner, appears promising for the preliminary screening of potential COVID-19 infection in afebrile patients.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Tokyo Metropolitan University ethics committee (the approval number H20-038). The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

BU and TM designed the research. BU, KH, and TM wrote the manuscript. GA and KH supervised the medical aspects of screening, and GA performed clinical testing. GS, OP, and LC contributed to the image-processing, signal-processing methods, and 3D designing. All authors reviewed the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We express our sincere thanks to all the participants from the First Central Hospital of Mongolia. The authors sincerely thank Khishigjargal Batsukh, the vice director of clinical affairs of the First Central Hospital of Mongolia, for permission to carry out the clinical testing. We sincerely thank Saeko Nozawa for her contributions to manuscript preparation.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fphys.2022.905931/full#supplementary-material

Supplementary Video S1 | A demonstration video of the VISC-Camera’s vital signs-related signals extraction procedure.

References

Barabell A. (1983). Improving the Resolution Performance of Eigenstructure-Based Direction-Finding Algorithms. ICASSP '83. IEEE International Conference on Acoustics, Speech, and Signal Processing. Boston, MA, USA, 14-16 April. doi:10.1109/icassp.1983.1172124

Bellemare F., Jeanneret A., Couture J. (2003). Sex Differences in Thoracic Dimensions and Configuration. Am. J. Respir. Crit. Care Med. 168, 305–312. doi:10.1164/rccm.200208-876oc

Dagdanpurev S., Abe S., Sun G., Nishimura H., Choimaa L., Hakozaki Y., et al. (2019). A Novel Machine-Learning-Based Infection Screening System via 2013-2017 Seasonal Influenza Patients' Vital Signs as Training Datasets. J. Infect. 78, 409–421. doi:10.1016/j.jinf.2019.02.008

Dong C., Qiao Y., Shang C., Liao X., Yuan X., Cheng Q., et al. (2022). Non-contact Screening System Based for Covid-19 on XGBoost and Logistic Regression. Comput. Biol. Med. 141, 105003. doi:10.1016/j.compbiomed.2021.105003

Goyal P., Choi J. J., Pinheiro L. C., Schenck E. J., Chen R., Jabri A., et al. (2020). Clinical Characteristics of Covid-19 in New York City. N. Engl. J. Med. 382, 2372–2374. doi:10.1056/nejmc2010419

Kalal Z., Mikolajczyk K., Matas J. (2010). Forward-backward Error: Automatic Detection of Tracking Failures. 2010 20th International Conference on Pattern Recognition. Istanbul, Turkey, 23-26 Aug. 2010. doi:10.1109/icpr.2010.675

Kaukonen K.-M., Bailey M., Pilcher D., Cooper D. J., Bellomo R. (2015). Systemic Inflammatory Response Syndrome Criteria in Defining Severe Sepsis. N. Engl. J. Med. 372, 1629–1638. doi:10.1056/nejmoa1415236

Matsui T., Hakozaki Y., Suzuki S., Usui T., Kato T., Hasegawa K., et al. (2010). A Novel Screening Method for Influenza Patients Using a Newly Developed Non-contact Screening System. J. Infect. 60, 271–277. doi:10.1016/j.jinf.2010.01.005

Matsui T., Kobayashi T., Hirano M., Kanda M., Sun G., Otake Y., et al. (2020). A Pneumonia Screening System Based on Parasympathetic Activity Monitoring in Non-contact Way Using Compact Radars beneath the Bed Mattress. J. Infect. 81, e142–e144. doi:10.1016/j.jinf.2020.06.002

Otake Y., Kobayashi T., Hakozaki Y., Matsui T. (2021). Non-contact Heart Rate Variability Monitoring Using Doppler Radars Located beneath Bed Mattress: A Case Report. Eur. Heart J. - Case Rep. 5. doi:10.1093/ehjcr/ytab273

Pech-Pacheco J. L., Cristobal G., Chamorro-Martinez J., Fernandez-Valdivia J. (2000). Diatom Autofocusing in Brightfield Microscopy: A Comparative Study. Proceedings 15th International Conference on Pattern Recognition. ICPR-2000. Barcelona, Spain, 3-7 Sept. doi:10.1109/icpr.2000.903548

Pedregosa F., Varoquaux G., Gramfort A., Michel V., Thirion B., Grisel O., et al. (2011). Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 12, 2825–2830.

Pimentel M. A. F., Redfern O. C., Hatch R., Young J. D., Tarassenko L., Watkinson P. J. (2020). Trajectories of Vital Signs in Patients with COVID-19. Resuscitation 156, 99–106. doi:10.1016/j.resuscitation.2020.09.002

Rechtman E., Curtin P., Navarro E., Nirenberg S., Horton M. K. (2020). Vital Signs Assessed in Initial Clinical Encounters Predict COVID-19 Mortality in an NYC Hospital System. Sci. Rep. 10, 21545. doi:10.1038/s41598-020-78392-1

Richardson S., Hirsch J. S., Narasimhan M., Crawford J. M., McGinn T., Davidson K. W., et al. (2020). Presenting Characteristics, Comorbidities, and Outcomes Among 5700 Patients Hospitalized with Covid-19 in the New York City Area. JAMA 323, 2052. doi:10.1001/jama.2020.6775

Singer M., Deutschman C. S., Seymour C. W., Shankar-Hari M., Annane D., Bauer M., et al. (2016). The Third International Consensus Definitions for Sepsis and Septic Shock (Sepsis-3). JAMA 315, 801. doi:10.1001/jama.2016.0287

Sun G., Trung N. V., Hoi L. T., Hiep P. T., Ishibashi K., Matsui T. (2020). Visualisation of Epidemiological Map Using an Internet of Things Infectious Disease Surveillance Platform. Crit. Care 24, 400. doi:10.1186/s13054-020-03132-w

Takamoto H., Nishine H., Sato S., Sun G., Watanabe S., Seokjin K., et al. (2020). Development and Clinical Application of a Novel Non-contact Early Airflow Limitation Screening System Using an Infrared Time-Of-Flight Depth Image Sensor. Front. Physiol. 11, 552942. doi:10.3389/fphys.2020.552942

Unursaikhan B., Tanaka N., Sun G., Watanabe S., Yoshii M., Funahashi K., et al. (2021). Development of a Novel Web Camera-Based Contact-free Major Depressive Disorder Screening System Using Autonomic Nervous Responses Induced by a Mental Task and its Clinical Application. Front. Physiol. 12, 642986. doi:10.3389/fphys.2021.642986

Viola P., Jones M. J. (2004). Robust Real-Time Face Detection. Int. J. Comput. Vis. 57, 137–154. doi:10.1023/b:visi.0000013087.49260.fb

von Arx T., Tamura K., Yukiya O., Lozanoff S. (2018). The Face–A Vascular Perspective. A Literature Review. Swiss Dent. J. 128 (5), 382–392.

World Health Organization (2020a). Diagnostic Testing for SARS-COV-2. World Health Organization. Available at: https://www.who.int/publications/i/item/diagnostic-testing-for-sars-cov-2 (Accessed January 18, 2022).

World Health Organization (2020b). Public Health Surveillance for COVID-19: Interim Guidance. World Health Organization. Available at: https://apps.who.int/iris/handle/10665/333752 (Accessed January 18, 2022).

World Health Organization (2022). Weekly Operational Update on COVID-19. World Health Organization. Available at: https://www.who.int/publications/m/item/weekly-operational-update-on-covid-19---1-march-2022 (Accessed March 10, 2022).

Keywords: infection screening, COVID-19, non-contact vital signs measurement, mobile screening system, remote photoplethysmograph, body temperature measurement, respiratory rate measurement, heart rate measurement

Citation: Unursaikhan B, Amarsanaa G, Sun G, Hashimoto K, Purevsuren O, Choimaa L and Matsui T (2022) Development of a Novel Vital-Signs-Based Infection Screening Composite-Type Camera With Truncus Motion Removal Algorithm to Detect COVID-19 Within 10 Seconds and Its Clinical Validation. Front. Physiol. 13:905931. doi: 10.3389/fphys.2022.905931

Received: 28 March 2022; Accepted: 23 May 2022;

Published: 22 June 2022.

Edited by:

Jinseok Lee, Kyung Hee University, South KoreaReviewed by:

Bersain A. Reyes, Universidad Autónoma de San Luis Potosí, MexicoYoungsun Kong, University of Connecticut, United States

Copyright © 2022 Unursaikhan, Amarsanaa, Sun, Hashimoto, Purevsuren, Choimaa and Matsui. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Takemi Matsui, tmatsui@tmu.ac.jp

Batbayar Unursaikhan

Batbayar Unursaikhan Gereltuya Amarsanaa

Gereltuya Amarsanaa Guanghao Sun

Guanghao Sun Kenichi Hashimoto

Kenichi Hashimoto Otgonbat Purevsuren2

Otgonbat Purevsuren2 Lodoiravsal Choimaa

Lodoiravsal Choimaa Takemi Matsui

Takemi Matsui