Abstract

Purpose

The SARS-CoV-2 / COVID-19 pandemic has raised concerns about the potential mental health impact on frontline clinical staff. However, given that poor mental health is common in acute medical staff, we aimed to estimate the additional burden of work involving high exposure to infected patients.

Methods

We report a rapid review, meta-analysis, and living meta-analysis of studies using validated measures from outbreaks of COVID-19, Ebola, H1N1 influenza, Middle East respiratory syndrome (MERS), and severe acute respiratory syndrome (SARS).

Results

A random effects meta-analysis found that high-exposure work is not associated with an increased prevalence of above cut-off scoring (anxiety: RR = 1.30, 95% CI 0.87–1.93, Total N = 12,473; PTSD symptoms: RR = 1.16, 95% CI 0.75–1.78, Total N = 6604; depression: RR = 1.50, 95% CI 0.57–3.95, Total N = 12,224). For continuous scoring, high-exposure work was associated with only a small additional burden of acute mental health problems compared to low-exposure work (anxiety: SMD = 0.16, 95% CI 0.02–0.31, Total N = 6493; PTSD symptoms: SMD = 0.20, 95% CI 0.01–0.40, Total N = 5122; depression: SMD = 0.13, 95% CI -0.04–0.31, Total N = 4022). There was no evidence of publication bias.

Conclusion

Although epidemic and pandemic response work may add only a small additional burden, improving mental health through service management and provision of mental health services should be a priority given that baseline rates of poor mental health are already very high. As new studies emerge, they are being added to a living meta-analysis where all analysis code and data have been made freely available: https://osf.io/zs7ne/.

Similar content being viewed by others

Introduction

The recent SARS-CoV-2/COVID-19 pandemic has seen an increased demand on clinical staff, who need to treat large numbers of patients, often in newly-purposed wards, with little disease-specific evidence to guide treatment. Many clinical staff have been moved into new roles and may be managing acutely unwell patients using unfamiliar equipment. Stresses caused by high patient mortality rates, staffing shortages, concerns about infecting self or family members, and changing guidance on personal protective equipment can add to work pressure. This has raised concerns about the potential impact on the mental health of epidemic and pandemic responders [1, 2].

Importantly however, high rates of poor mental health are common in clinical staff working in acute medicine generally [3,4,5] and so estimating the additional impact of epidemic and pandemic response, not solely the extent of poor mental health, is also important in guiding decisions to protect staff mental health. Recently, a meta-analysis by Kisely et al. [6] reported that healthcare workers in direct contact with affected patients had an approximately 1.71–1.74 odds ratio of reporting acute mental health problems compared to healthcare workers not in direct contact with infected patients, and when analysing continuous outcomes, increased scores equivalent to an effect size (standardised mean difference or Hedge’s g) of approximately 0.2–0.4.

Given that the SARS-CoV-2/COVID-19 pandemic is likely to evolve in location, course and severity over time, traditional meta-analyses are only likely to give a snapshot of the current state of the evidence. Living systematic reviews or meta-analyses are a new innovation that allow estimates to be updated as new evidence emerges [7, 8] but have rarely been used to date.

Consequently, we report a rapid review and meta-analysis that forms the basis of a living meta-analysis of the mental health of clinical staff dealing with epidemics and pandemics of high-risk infectious diseases, including studies from coronavirus disease 2019 (COVID-19), Ebola virus disease, H1N1 influenza, severe acute respiratory syndrome (SARS), and middle east respiratory syndrome (MERS) to understand the potential impact on mental health and to inform policy on supporting staff during the current COVID-19 pandemic. The meta-analysis will be updated as new evidence become available and is published on the Open Science Framework.

Methods

There is no standardised procedure for conducting rapid reviews, although several approaches have been used to reduce the complexity of the review process [9]. We used an iterative rapid review procedure to identify relevant articles and then used the reference lists of already included studies to identify further articles. We searched PubMed, Medline, PsychInfo and Embase for articles including ‘mental health’ or ‘psychosocial’ or ‘emotional’ and ‘staff’ and a number of disease-specific key words (epidemic, epidemics, pandemic, flu, SARS, MERS, COVID-19, Ebola, Marburg, H1N1, H7N6) in the title. We also searched pre-print servers MedRXviv and SSRN for pre-prints relating to COVID-19.

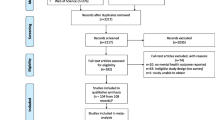

Duplicate articles were removed and titles and abstracts were screened for relevance. The full text of the remaining articles was read and reference lists of these papers were searched for additional relevant articles. These were reviewed for inclusion using the same method as articles found through database searches. Articles were included if they were peer-reviewed articles published in English or Spanish (the languages of the authors) that reported mental health outcomes in clinical staff managing high-risk infectious disease outbreaks. We considered studies on all healthcare staff in all settings as relevant. One article in Chinese with numeric results reported in the English-language abstract was also included. Studies of methodologies including quantitative studies, qualitative studies, and anecdotal accounts, reporting original results were included. Following methods for improving rapid review study selection [10], selection was conducted by the first author and checked by the second author. A flowchart of the review process is presented in (Fig. 1).

The final count of articles included by condition was: COVID-19: 14, Ebola: 11, H1N1: 4, MERS: 6, SARS: 39. No relevant studies on Marburg or H7N6 flu were found. Studies were conducted in China (17), Hong Kong (12), Taiwan (11), Canada (8), Singapore (7), Sierra Leone (4), South Korea (4), Saudi Arabia (3), Germany (1), Greece (1), Israel (1), Japan (1), Liberia (1), Mexico (1), Uganda and the Republic of Congo (1), United States (2), and two studies that recruited aid workers who had worked in various West African countries.

Numeric data from studies reporting (i) above cut-off prevalence or, (ii) means and standard deviations—from validated anxiety, post-traumatic stress disorder (PTSD) and depression symptom scales were extracted by the lead author and checked by the second. Anxiety and depression measures were included on the basis of being validated measures of anxiety or depression, either as the sole focus of a scale or a validated subscale. PTSD symptoms were measured by PTSD-specific scales.

Due to studies using differing cut-offs for determining caseness even when using the same scale, meta-analysis for prevalence was not possible. However, two types of meta-analytic estimates were possible: a comparison of risk ratios for studies that compared above-cut off scoring between high- and low-exposure groups, and meta-analysis for differences in mean scale scores between high and low exposures—both of which were used to help determine the mental health impact of work involving high exposure to infected patients in epidemic and pandemic health emergencies. Individual studies varied in the extent to which cut-off they used for particular scales. When prevalence for several cut-offs was reported (e.g. for mild, moderate, severe), we included the prevalence for the ‘moderate’-level cut-off to select the prevalence likely to reflect a clinically significant problem.

We defined high and low exposures as direct contact with infected patients, or work in wards where direct contact was considered highly likely (e.g. critical care, designated treatment wards, emergency departments) compared to clinicians working without direct contact or work in hospital areas where direct contact was unlikely. A narrative synthesis of risk factors was conducted across all studies regardless of methodology.

As part of the rapid review process, a risk of bias assessment was only conducted for studies included in the meta-analyses using tools to assess risk of bias in cohort studies [11] and case–control studies [12]. The risks of bias assessment evaluations were conducted by one author and checked by the other.

We conducted a power analysis for meta-analysis [13] to determine the minimum number of studies required to detect a statistically significant difference in standardised mean difference between high- and low-exposure groups. We calculated power to detect a small effect size and based on results of the Brooks et al. [14] systematic review of SARS response studies, assumed medium study heterogeneity and an average group size of N = 150. This indicated that a minimum study count of 5 was needed. Effect sizes were calculated as standardised mean differences and we used a random effects model to estimate pooled effect sizes. The possibility of publication bias was assessed using and Egger’s test [15]. All analyses were conducted with R (version 4.0.2) using the ‘meta’ package (version 4.13.0) and were conducted on a Linux x86_64 platform. All codes and data for the fixed reference analysis reported in this paper are available online in the following archive: https://osf.io/xtecb/.

Living meta-analysis methods

The living meta-analysis is updated based on the same protocol reported here but only includes studies reporting quantitative data for the purposes of updating the meta-analysis. Literature searches will be repeated at least monthly by the authors. Studies included in the ongoing meta-analysis will be monitored for retractions using the automatic retraction notification function of reference manager Zotero. Reviewers will also monitor the literature and will replace studies included as pre-prints with their final versions when they are published in the peer review literature. We aim to continue with the living meta-analysis as long as it is feasible and will add a notice to the living review if the research team is no longer updating it.

The living meta-analysis has been implemented using Jupyter notebooks which are a type of executable document that allows analysis code and text to be combined in a single document [16]. Following recommendations for research responding to the COVID-19 pandemic [17] all data, and Jupyter notebooks including analysis code and output used in the living meta-analysis have been made freely available at an Open Science Framework archive. This approach also allows other researchers to replicate, modify, or continue the project independently of the authors.

The living meta-analysis is available online at the following address: https://osf.io/zs7ne/.

Results

The full details of included studies are in Tables S1–3 in the supplementary material and a summary of quantitative and qualitative papers included are shown in Tables 1 and 2 below. All studies included in the living meta-analysis of quantitative studies are included in the data tables published in the online archive.

Meta-analytic estimates of impact of working in high exposure roles are presented in Tables 3 and 4. The forest plots for each analysis reported in this paper are reported in the supplementary material. Forest plots for all quantitative studies included in the living meta-analysis are published in the Jupyter notebooks on the online archive.

The confidence intervals for estimates of risk ratio crossed 1 (signifying equal ratio and therefore no estimate difference between high- and low-exposure groups) for all differences based on prevalence of above cut-off scoring. The confidence intervals for standardised mean difference crossed zero only for depression. However, study heterogeneity was larger than we assumed during the power calculation and there were the fewest number of studies included for the depression meta-analysis (k = 8), meaning it was likely under-powered to detect small effects.

For those estimates where we had the minimum number of 10 studies [92] to test for publication bias (i.e. the estimates of SMDs for anxiety and PTSD symptoms), Egger’s test indicated no evidence for publication bias (anxiety: p = 0.614; PTSD symptoms: p = 0.212).

The assessment of individual study risk of bias (see supplementary materials) indicated that included studies showed moderate to high risk of bias.

Risk factors, protective factors and additional work stresses

Although it was not possible to examine risk and protective factors meta-analytically, a narrative synthesis was conducted to identify likely candidates.

Nurses typically reported higher levels of symptoms and distress than doctors [32, 35, 42, 56, 59, 63, 71, 81, 93] with a few studies reporting no difference [30, 57, 68] and one study reporting higher rates in doctors [22].

Several studies noted that seeing colleagues infected was a particular source of distress [30, 40, 88]. Furthermore, being quarantined after infection was reported as a predictor of psychological distress and poor mental health [20, 30, 31, 52, 55, 66, 76, 77] which was also reflected in one qualitative study [94] although three studies found no negative impact of quarantine [27, 66, 95].

Notably, numerous studies reported that clinical staff dealing with high-risk infectious disease experienced stigma from friends, family and the public [20, 32, 33, 57, 59, 61, 63, 66, 72, 85,86,87, 90, 96, 97] and perceived stigma was found to be a predictor of poor mental health in the three studies that looked at this association statistically [57, 61, 97].

Three studies found that clinical staff who were conscripted, were not willing, or had not volunteered for high-exposure roles reported particularly poor mental health outcomes [26, 30, 69, 98].

Although small in number, longitudinal studies suggested that symptoms of poor mental health tend to peak early during outbreaks but resolve for the majority of responders as time goes on [5, 26]. This pattern of initially high levels of anxiety and distress that reduce over time was also reflected in some of the qualitative studies [83, 89]. One cross-sectional study conducted at two time points and sampling from the same clinical teams found high levels of self-reported poor mental health for high-exposure workers 1 year after high-exposure work [57] although a study on a subsample of participants using a structured interview assessment found the incidence of new onset mental health problems was essentially no different to that found in the general population [43].

In terms of protective factors that reduced the chance of poor mental health or psychological distress, social support, team cohesion or organisational support were identified by numerous studies [22, 24, 31, 40, 45, 55, 68, 72, 78, 81]. This theme was reflected in several qualitative studies [87, 88].

Furthermore, the use, availability, training with, and faith in, infection prevention measures were identified as reducing distress [28, 40, 45, 45, 55, 57, 59, 65, 81]

A sense of professional duty and altruistic acceptance of risk was found to be a protective factor in several studies [32, 52, 60, 77] which was a theme that was strongly reflected in qualitative studies [83, 85, 88, 91] although one study found accepting the risk of SARS infection as part of the job was not associated with reduced psychopathology [97].

Notably, all studies that asked about positive aspects of working in epidemic and pandemic response reported that participants described several factors related development as an individual and a team, particularly in terms of learning new skills and medical knowledge, better adherence to medical procedures, and closer team working and collegiality [28, 33, 59].

Role of formal psychological support services

Although a recent anecdotal report noted clinicians did not find mental health support particularly useful during COVID-19 response [1], several studies found that participants reported formal psychological services to be a useful source of support [32, 45, 72, 87, 90]. One study specifically asked whether staff needed ‘psychological treatment’ and 8.6% of healthcare workers dealing with COVID-19 reported they did [93]. Conversely, however, Chung and Yeung [29] reported that only 2% of staff responding to COVID-19 requested psychological support and all “were reassured after a single phone contact by the psychiatric nurse” although this was a small study with just 69 participants.

Notably, two large COVID-19 studies suggest that the staff who are most in need of psychological support are the least likely to request or receive it. One study found that high exposure staff were likely to say they needed psychological treatment at half the rate of low exposure staff despite reporting higher levels of psychopathology [93]. In another, clinicians with mental health problems were less likely to receive psychological support than clinicians without [53].

Discussion

To estimate the impact of high-exposure work in epidemic and pandemic health emergencies, we completed a rapid review and meta-analysis of studies reporting on the mental health of clinical staff working in high-risk epidemic and pandemic health emergencies, including studies from the recent COVID-19 pandemic. These comparisons suggest that the impact of epidemic and pandemic response work on mental health is likely to be small, but in the case of meta-analytic assessment of mean scale scores, it is statistically detectable. However, it is worth noting that this small additional burden is in addition to already high levels of poor mental health that are common in acute medical staff. A narrative synthesis of potential risk factors for poor mental health in epidemic and pandemic response identified being a nurse, experiencing stigma from others, seeing colleagues infected, and being personally quarantined as predictors of worse outcomes. Protective factors included social and occupational support, effectiveness and faith in infection control measures, a sense of professional duty and altruistic acceptance of risk. Formal psychological services were identified as a valuable form of support.

It is worth noting some of the shortcomings of the evidence used to inform this review. More studies reported results from the analysis of mental health measures than reported sufficient detail (prevalence, mean scores etc.) to allow them to be included in the numeric assessment of results in this review. In terms of the assessment of individual studies, there was at least a moderate risk of bias although there was no suggestion of publication bias in the literature where this could be tested statistically. Furthermore, studies almost exclusively used self-report measures rather than structured interview assessments. Occasionally, some studies reported two categorisations that would potentially count as ‘high risk’—for example, ‘working in isolation wards’ and ‘working directly with infected patients’. In these situations, we chose what seemed like the most likely to reflect ‘direct contact’ and have opted for transparency through making our decisions clear in the open datasets. We also note potential shortcomings of the methods employed here. The rapid review approach is necessarily less thorough than a full systematic review and it is possible that the search strategy employed here may not exhaustively identify all relevant articles. However, we also note that the living meta-analysis approach has the potential to allow us to include ‘missed’ articles as they are identified. Additionally, there may be data from articles published in languages not accessible to the research team and this may reduce the international coverage of the analysis.

Notably, the results reported here largely echo those reported in a recent rapid-review and meta-analysis by Kisely et al. [6], although some differences are worth highlighting. In contrast to the analysis reported here, Kisely et al. created meta-analytic summaries of: (i) acute/post-traumatic stress, and (ii) psychological distress—that combined measures of burnout, depression, and general psychopathology. However, a similar pattern emerges. The effect sizes were relatively small and somewhat more pronounced for psychological distress, which is more likely to reflect acute reactions rather than longer-term mental health problems. One benefit of our living meta-analysis approach is that we will be able to see whether this pattern of results remains intact. Indeed, given the varying course of the COVID-19 pandemic it is possible that we may see increases in mental health problems in clinical staff if the pandemic endures for a far greater time than comparable outbreaks, or differs in severity between countries, where national differences may arise.

The results of this review and meta-analysis raise several issues with regard to provide ongoing and long-term support for clinical staff responding to such health emergencies. One is the extent to which epidemic and pandemic response is uniquely ‘traumatising’ and might lead to high levels of posttraumatic stress disorder, thereby implying that a trauma-focus for staff support should be the dominant approach to addressing outcomes of poor mental health. With regard to the published studies, although there are seemingly high rates of staff who score above cut-off on measures of PTSD symptoms, interpretation of results is not straightforward. Cut-off values used for defining a positive ‘case’ varied considerably even between studies using the same measure. The most widely used scale in these studies is the Impact of Events Scale-Revised for which a cut-off of 33–34 has been found to be the most predictive of diagnosable PTSD [99, 100] and yet most studies use a cut-off of considerably less. This suggests that an important proportion of those reported under the prevalence figures are likely to have transitory, sub-syndromal PTSD symptoms, or non-specific distress, that may be a risk for PTSD but are unlikely to reach the level of a diagnosable case. Indeed, the prevalences reported here are comparable to prevalences found in clinical staff more widely. For example, the reported prevalence of > 33 scoring on the IES-R is 15% in acute medical staff [101], 16% in surgical trainees [102], and 17% in cancer physicians [103]. Studies included in this review using similar IES-R cut-offs to these general medicine studies tended to report lower prevalence rates with only one study [81] reporting higher.

Furthermore, studies often did not differentiate between PTSD symptoms arising from pandemic and epidemic response work and those from other events, meaning it is not clear to what extent epidemic or pandemic response work was the key causal factor. Finally, symptoms were almost exclusively measured by self-report measures which are known to inflate the rate of true cases [104]. Indeed, high rates on self-report measures but low rates on structured interview assessments have been found in SARS responders [43].

This suggests that although there is potential for trauma and this should be included in considerations for staff support, it is currently not clear at the moment that PTSD is an outcome particularly associated with epidemic and pandemic response meaning support efforts should be ‘trauma-ready’ rather than ‘trauma-focused’. However, we note here the importance of contextual factors. The capacity of the healthcare system, and indeed the population, are key contextual factors in determining the impact of the medical response on the staff responsible for delivering it. Although there is no strong evidence from the data we report here for the differential impact of managing specific infectious diseases, indicators, such as mortality and morbidity, infectiousness, and social perception, should be monitored as potential risk factors for poor mental health.

Indeed, some of these contextual factors were reflected in the risk and protective factors identified in the review. These chime with previous work on epidemic response work [14] and the wider literature on mental health outcomes in high-risk work [105] and suggest similar measures to support staff, namely promoting good leadership and team cohesion, maintaining high standards of infection control and training. In addition, this review highlights that additional attention should be given to nurses, those affected by seeing colleagues infected, where staff are quarantined after being infected, and where individuals experience stigma from others.

It is worth highlighting that formal psychological support was considered useful by clinical staff but that it was least requested and less frequently received by those with higher levels of mental health difficulties. Although voluntary engagement with mental health services is considered the ideal model to avoid potential iatrogenic effects [106], particular attention should be paid to pathways to formal support to make these as accessible as possible for those who need them. This is particularly important as staff working in acute medicine may already have high levels of poor mental health and access to effective treatment during health emergencies should be a priority. Considering there are no evidence-based interventions that have been shown to reduce poor mental health outcomes in those responding to healthcare emergencies, we agree with Greenberg et al. [2] that structural and systemic factors are likely to be key—namely, good leadership, the effective use and availability of protective measures, promoting team cohesion, and quality aftercare. Nevertheless, additional research on how epidemic response work impacts on mental health and on the most effective provision and timing of effective interventions is clearly a research priority.

Role of living meta-analysis

The authors will be updating the living meta-analysis as new data becomes available to give the most up-to-date estimate of potential impact on the mental health of clinical staff. However, we realise that meta-analyses involve decisions at the inclusion, data extraction and analysis stages that may differ by the objectives of the researchers. For this reason, we have also made the data and the analysis code fully open to allow for full transparency, but also to facilitate others re-running the analysis with alternative decisions to examine the impact on outcome. We suggest that prioritising transparency and re-use of previous work is important in time critical health emergencies and we encourage other researchers to adopt this approach.

Priorities for research

The majority of studies are cross-sectional measuring mental health in staff working in epidemic and pandemic response. These studies typically have large sample sizes and good response rates. However, there remains a need for: (i) standardisation of measures, reporting, and criteria for prevalence; (ii) adequately sampled case–control studies that compare epidemic and pandemic response staff to control groups of other staff in acute medicine; and (iii) longitudinal studies to examine the course of psychological distress over time. We also note that (i) would effectively be solved if open data were available from the relevant studies, and we strongly encourage researchers to make these data available, particularly when researching infectious disease health emergencies.

References

Chen Q, Liang M, Li Y et al (2020) Mental health care for medical staff in China during the COVID-19 outbreak. Lancet Psychiatry 7:e15–e16. https://doi.org/10.1016/s2215-0366(20)30078-x

Greenberg N, Docherty M, Gnanapragasam S, Wessely S (2020) Managing mental health challenges faced by healthcare workers during covid-19 pandemic. BMJ. https://doi.org/10.1136/bmj.m1211

Carrieri D, Briscoe S, Jackson M et al (2018) ‘Care Under Pressure’: a realist review of interventions to tackle doctors’ mental ill-health and its impacts on the clinical workforce and patient care. BMJ Open 8:e021273. https://doi.org/10.1136/bmjopen-2017-021273

He J, Liao R, Yang Y et al (2020) The research of mental health status among medical personnel in china: a cross-sectional study. Social Science Research Network, Rochester

Su J-A, Weng H-H, Tsang H-Y, Wu J-L (2009) Mental health and quality of life among doctors, nurses and other hospital staff. J Int Soc Investig Stress 25:423–430. https://doi.org/10.1002/smi.1261

Kisely S, Warren N, McMahon L et al (2020) Occurrence, prevention, and management of the psychological effects of emerging virus outbreaks on healthcare workers: rapid review and meta-analysis. BMJ. https://doi.org/10.1136/bmj.m1642

Elliott JH, Synnot A, Turner T et al (2017) Living systematic review: 1. Introduction—the why, what, when, and how. J Clin Epidemiol 91:23–30. https://doi.org/10.1016/j.jclinepi.2017.08.010

Vandvik PO, Brignardello-Petersen R, Guyatt GH (2016) Living cumulative network meta-analysis to reduce waste in research: a paradigmatic shift for systematic reviews? BMC Med 14:59. https://doi.org/10.1186/s12916-016-0596-4

Haby MM, Chapman E, Clark R et al (2016) What are the best methodologies for rapid reviews of the research evidence for evidence-informed decision making in health policy and practice: a rapid review. Health Res Policy Syst 14:83. https://doi.org/10.1186/s12961-016-0155-7

Taylor-Phillips S, Geppert J, Stinton C et al (2017) Comparison of a full systematic review versus rapid review approaches to assess a newborn screening test for tyrosinemia type 1. Res Synth Methods 8:475–484. https://doi.org/10.1002/jrsm.1255

Busse JW, Guyatt GH (2015) Tool to assess risk of bias in cohort studies. Ottawa: Evidence Partners Available Accessed 26 Apr 2020

Busse JW, Guyatt GH (2015) Tool to assess risk of bias in case-control studies. Ottawa: Evidence Partners Accessible ici: www evidencepartners com/resources/methodological-resources/(consulté le 29 avril 2020)

Valentine JC, Pigott TD, Rothstein HR (2010) How many studies do you need?: a primer on statistical power for meta-analysis. J Educ Behav Stat 35:215–247. https://doi.org/10.3102/1076998609346961

Brooks SK, Dunn R, Amlôt R, et al (2018) A Systematic, Thematic Review of Social and Occupational Factors Associated With Psychological Outcomes in Healthcare Employees During an Infectious Disease Outbreak. https://www.ingentaconnect.com/content/wk/jom/2018/00000060/00000003/art00014. Accessed 8 Apr 2020

Egger M, Smith GD (1998) Meta-analysis bias in location and selection of studies. BMJ 316:61–66

Rule A, Birmingham A, Zuniga C et al (2019) Ten simple rules for writing and sharing computational analyses in Jupyter Notebooks. PLoS Comput Biol 15:e1007007. https://doi.org/10.1371/journal.pcbi.1007007

Holmes EA, O’Connor RC, Perry VH et al (2020) Multidisciplinary research priorities for the COVID-19 pandemic: a call for action for mental health science. Lancet Psychiatry. https://doi.org/10.1016/S2215-0366(20)30168-1

Alsubaie S, Temsah MH, Al-Eyadhy AA et al (2019) Middle East Respiratory Syndrome Coronavirus epidemic impact on healthcare workers’ risk perceptions, work and personal lives. J Infect Dev Ctries 13:920–926. https://doi.org/10.3855/jidc.11753

Austria-Corrales F, Cruz-Valdés B, Kiengelher LH et al (2011) Burnout syndrome among medical residents during the Influenza A H1N1 sanitary contigency in Mexico. Gac Med Mex 147:97–103

Bai Y, Lin C-C, Lin C-Y et al (2004) Survey of stress reactions among health care workers involved with the SARS outbreak. Psychiatr Serv 55:1055–1057. https://doi.org/10.1176/appi.ps.55.9.1055

Bukhari EE, Temsah MH, Aleyadhy AA et al (2016) Middle East respiratory syndrome coronavirus (MERS-CoV) outbreak perceptions of risk and stress evaluation in nurses. J Infect Dev Ctries 10:845–850. https://doi.org/10.3855/jidc.6925

Chan AOM, Huak CY (2004) Psychological impact of the 2003 severe acute respiratory syndrome outbreak on health care workers in a medium size regional general hospital in Singapore. Occup Med (Lond) 54:190–196. https://doi.org/10.1093/occmed/kqh027

Chan SSC, Leung GM, Tiwari AFY et al (2005) The impact of work-related risk on nurses during the SARS outbreak in Hong Kong. Family Community Health 28:274–287

Chang K, Gotcher DF, Chan M (2006) Does social capital matter when medical professionals encounter the SARS crisis in a Hospital Setting. Health Care Manag Rev 31:26–33

Chen C-S, Wu H-Y, Yang P, Yen C-F (2005) Psychological Distress of Nurses in Taiwan Who Worked During the Outbreak of SARS. PS 56:76–79. https://doi.org/10.1176/appi.ps.56.1.76

Chen R, Chou K-R, Huang Y-J et al (2006) Effects of a SARS prevention programme in Taiwan on nursing staff’s anxiety, depression and sleep quality: a longitudinal survey. J Nurs Stud 43:215–225. https://doi.org/10.1016/j.ijnurstu.2005.03.006

Chong M-Y, Wang W-C, Hsieh W-C et al (2004) Psychological impact of severe acute respiratory syndrome on health workers in a tertiary hospital. Br J Psychiatry 185:127–133. https://doi.org/10.1192/bjp.185.2.127

Chua SE, Cheung V, Cheung C et al (2004) Psychological effects of the SARS Outbreak in Hong Kong on High-Risk Health Care Workers. Can J Psychiatry 49:391–393. https://doi.org/10.1177/070674370404900609

Chung J, Yeung W (2020) Staff mental health self-assessment during the COVID-19 outbreak. East Asian Arch Psychiatry 30:34

Dai Y, Hu G, Xiong H, et al (2020) Psychological impact of the coronavirus disease 2019 (COVID-19) outbreak on healthcare workers in China. medRxiv. https://doi.org/10.1101/2020.03.03.20030874

Fiksenbaum L, Marjanovic Z, Greenglass ER, Coffey S (2006) Emotional exhaustion and state anger in nurses who worked during the Sars outbreak: the role of perceived threat and organizational support. Can J Community Mental Health 25:89–103. https://doi.org/10.7870/cjcmh-2006-0015

Goulia P, Mantas C, Dimitroula D et al (2010) General hospital staff worries, perceived sufficiency of information and associated psychological distress during the A/H1N1 influenza pandemic. BMC Infect Dis 10:322. https://doi.org/10.1186/1471-2334-10-322

Grace SL, Hershenfield K, Robertson E, Stewart DE (2005) The occupational and psychosocial impact of SARS on academic physicians in three affected hospitals. Psychosomatics 46:385–391. https://doi.org/10.1176/appi.psy.46.5.385

Ho SM, Kwong-Lo RS, Mak CW, Wong JS (2005) Fear of severe acute respiratory syndrome (SARS) among health care workers. J Consult Clin Psychol 73:344

Huang JZ, Han MF, Luo TD, et al (2020) Mental health survey of 230 medical staff in a tertiary infectious disease hospital for COVID-19. [Chinese]. Zhonghua lao dong wei sheng zhi ye bing za zhi = Zhonghua laodong weisheng zhiyebing zazhi = Chinese journal of industrial hygiene and occupational diseases 38:E001. https://doi.org/10.3760/cma.j.cn121094-20200219-00063

Huang L, Xu F ming, Liu H rong (2020) Emotional responses and coping strategies of nurses and nursing college students during COVID-19 outbreak. medRxiv. https://doi.org/10.1101/2020.03.05.20031898

Iancu I, Strous R, Poreh A et al (2005) Psychiatric inpatients’ reactions to the SARS epidemic: an Israeli survey. J Psychiatry Relat Sci 42:258–262

Ji D, Ji Y-J, Duan X-Z, et al (2017) Prevalence of psychological symptoms among Ebola survivors and healthcare workers during the 2014–2015 Ebola outbreak in Sierra Leone: a cross-sectional study. Oncotarget 8:12784–12791. https://doi.org/10.18632/oncotarget.14498

Jung H, Jung SY, Lee MH, Kim MS (2020) Assessing the presence of post-traumatic stress and turnover intention among nurses post–middle east respiratory syndrome outbreak: the importance of supervisor support: workplace health & safety. https://doi.org/10.1177/2165079919897693

Khalid I, Khalid TJ, Qabajah MR et al (2016) Healthcare workers emotions, perceived stressors and coping strategies during a MERS-CoV outbreak. Clin Med Res 14:7–14. https://doi.org/10.3121/cmr.2016.1303

Koh D, Lim MK, Chia SE et al (2005a) Risk perception and impact of severe acute respiratory syndrome (SARS) on work and personal lives of healthcare workers in Singapore: what can we learn? Med Care 43:676–682. https://doi.org/10.1097/01.mlr.0000167181.36730.cc

Lai J, Ma S, Wang Y et al (2020) Factors associated with mental health outcomes among health care workers exposed to coronavirus disease 2019. JAMA Netw Open 3:e203976–e203976. https://doi.org/10.1001/jamanetworkopen.2020.3976

Lancee WJ, Maunder RG, Goldbloom DS (2008) Prevalence of psychiatric disorders among toronto hospital workers one to two years after the SARS outbreak. PS 59:91–95. https://doi.org/10.1176/ps.2008.59.1.91

Lee SM, Kang WS, Cho A-R et al (2018a) Psychological impact of the 2015 MERS outbreak on hospital workers and quarantined hemodialysis patients. Compr Psychiatry. https://doi.org/10.1016/j.comppsych.2018.10.003

Lee S-H, Juang Y-Y, Su Y-J et al (2005) Facing SARS: Psychological impacts on SARS team nurses and psychiatric services in a Taiwan general hospital. Gen Hosp Psychiatry 27:352–358. https://doi.org/10.1016/j.genhosppsych.2005.04.007

Lehmann M, Bruenahl CA, Addo MM et al (2016) Acute Ebola virus disease patient treatment and health-related quality of life in health care professionals: a controlled study. J Psychosom Res 83:69–74. https://doi.org/10.1016/j.jpsychores.2015.09.002

Li L, Wan C, Ding R et al (2015) Mental distress among Liberian medical staff working at the China Ebola Treatment Unit: a cross sectional study. Health Qual Life Outcomes 13:156. https://doi.org/10.1186/s12955-015-0341-2

Li Z, Ge J, Yang M et al (2020) Vicarious traumatization in the general public, members, and non-members of medical teams aiding in COVID-19 control. Brain Behav Immun. https://doi.org/10.1016/j.bbi.2020.03.007

Liang Y, Chen M, Zheng X, Liu J (2020) Screening for Chinese medical staff mental health by SDS and SAS during the outbreak of COVID-19. J Psychosom Res 133:110102. https://doi.org/10.1016/j.jpsychores.2020.110102

Lin C-Y, Peng Y-C, Wu Y-H et al (2007) The psychological effect of severe acute respiratory syndrome on emergency department staff. Emerg Med J 24:12–17. https://doi.org/10.1136/emj.2006.035089

Liu C, Yang Y, Zhang XM, et al (2020) The prevalence and influencing factors for anxiety in medical workers fighting COVID-19 in China: a cross-sectional survey. medRxiv. https://doi.org/10.1101/2020.03.05.20032003

Liu X, Kakade M, Fuller CJ et al (2012) Depression after exposure to stressful events: lessons learned from the severe acute respiratory syndrome epidemic. Compr Psychiatry 53:15–23. https://doi.org/10.1016/j.comppsych.2011.02.003

Liu Z, Han B, Jiang R et al (2020) Mental Health Status of Doctors and Nurses During COVID-19 Epidemic in China. Social Science Research Network, Rochester

Lung F-W, Lu Y-C, Chang Y-Y, Shu B-C (2009) Mental symptoms in different health professionals during the SARS attack: a follow-up study. Psychiatr Q 80:107–116. https://doi.org/10.1007/s11126-009-9095-5

Marjanovic Z, Greenglass ER, Coffey S (2007) The relevance of psychosocial variables and working conditions in predicting nurses’ coping strategies during the SARS crisis: an online questionnaire survey. Int J Nurs Stud 44:991–998. https://doi.org/10.1016/j.ijnurstu.2006.02.012

Matsuishi K, Kawazoe A, Imai H et al (2012) Psychological impact of the pandemic (H1N1) 2009 on general hospital workers in Kobe. Psychiatry Clin Neurosci 66:353–360. https://doi.org/10.1111/j.1440-1819.2012.02336.x

Maunder RG, Lancee WJ, Balderson KE et al (2006) Long-term psychological and occupational effects of providing hospital healthcare during SARS outbreak. Emerg Infect Dis 12:1924–1932. https://doi.org/10.3201/eid1212.060584

McAlonan GM, Lee AM, Cheung V et al (2007) Immediate and sustained psychological impact of an emerging infectious disease outbreak on health care workers. Can J Psychiatry. https://doi.org/10.1177/070674370705200406

Nickell LA, Crighton EJ, Tracy CS et al (2004) Psychosocial effects of SARS on hospital staff: survey of a large tertiary care institution. CMAJ 170:793–798. https://doi.org/10.1503/cmaj.1031077

Oh N, Hong N, Ryu DH et al (2017) Exploring nursing intention, stress, and professionalism in response to infectious disease emergencies: the experience of local public hospital nurses during the 2015 MERS Outbreak in South Korea. Asian Nurs Res 11:230–236. https://doi.org/10.1016/j.anr.2017.08.005

Park J-S, Lee E-H, Park N-R, Choi YH (2018) Mental health of nurses working at a government-designated hospital during a MERS-CoV outbreak: a cross-sectional study. Arch Psychiatr Nurs 32:2–6. https://doi.org/10.1016/j.apnu.2017.09.006

Phua DH, Tang HK, Tham KY (2005) Coping responses of emergency physicians and nurses to the 2003 severe acute respiratory syndrome outbreak. Acad Emerg Med 12:322–328. https://doi.org/10.1197/j.aem.2004.11.015

Poon E, Liu KS, Cheong DL et al (2004) Impact of severe respiratory syndrome on anxiety levels of front-line health care workers. Hong Kong Med J 10:325–330

Qi J, Xu J, Li B, et al (2020) The Evaluation of Sleep Disturbances for Chinese Frontline Medical Workers under the Outbreak of COVID-19. medRxiv. https://doi.org/10.1101/2020.03.06.20031278

Sim K, Chong PN, Chan YH, Soon WSW (2004) Severe acute respiratory syndrome-related psychiatric and posttraumatic morbidities and coping responses in medical staff within a primary health care setting in Singapore. J Clin Psychiatry 65:1120–1127. https://doi.org/10.4088/JCP.v65n0815

Styra R, Hawryluck L, Robinson S et al (2008) Impact on health care workers employed in high-risk areas during the Toronto SARS outbreak. J Psychosom Res 64:177–183. https://doi.org/10.1016/j.jpsychores.2007.07.015

Su T-P, Lien T-C, Yang C-Y et al (2007) Prevalence of psychiatric morbidity and psychological adaptation of the nurses in a structured SARS caring unit during outbreak: a prospective and periodic assessment study in Taiwan. J Psychiatr Res 41:119–130. https://doi.org/10.1016/j.jpsychires.2005.12.006

Sun N, Xing J, Xu J, et al (2020) Study of the mental health status of medical personnel dealing with new coronavirus pneumonia. medRxiv. https://doi.org/10.1101/2020.03.04.20030973

Tam CWC, Pang EPF, Lam LCW, Chiu HFK (2004) Severe acute respiratory syndrome (SARS) in Hong Kong in 2003: stress and psychological impact among frontline healthcare workers. Psychol Med 34:1197–1204. https://doi.org/10.1017/S0033291704002247

Tan BYQ, Chew NWS, Lee GKH et al (2020) Psychological impact of the COVID-19 pandemic on health care workers in Singapore. Ann Intern Med. https://doi.org/10.7326/M20-1083

Tham K-Y, Tan Y, Loh O et al (2004) Psychiatric morbidity among emergency department doctors and nurses after the SARS outbreak. Ann Acad Med Singapore 33:S78–S79. https://doi.org/10.1177/102490790501200404

von Strauss E, Paillard-Borg S, Holmgren J, Saaristo P (2017) Global nursing in an Ebola viral haemorrhagic fever outbreak: before, during and after deployment. Glob Health Action 10:1371427. https://doi.org/10.1080/16549716.2017.1371427

Waterman S, Hunter ECM, Cole CL et al (2018) Training peers to treat Ebola centre workers with anxiety and depression in Sierra Leone. J Soc Psychiatry 64:156–165. https://doi.org/10.1177/0020764017752021

Wong TW, Yau JKY, Chan CLW et al (2005) The psychological impact of severe acute respiratory syndrome outbreak on healthcare workers in emergency departments and how they cope. Eur J Emerg Med 12:13–18

Wong WCW, Lee A, Tsang KK, Wong SYS (2004) How did general practitioners protect themselves, their family, and staff during the SARS epidemic in Hong Kong? J Epidemiol Community Health 58:180–185. https://doi.org/10.1136/jech.2003.015594

Wu P, Liu X, Fang Y et al (2008) Alcohol abuse/dependence symptoms among hospital employees exposed to a SARS outbreak. Alcohol Alcohol 43:706–712. https://doi.org/10.1093/alcalc/agn073

Wu P, Fang Y, Guan Z et al (2009) The psychological impact of the SARS epidemic on hospital employees in China: exposure, risk perception, and altruistic acceptance of risk. J Psychiatry 54:302–311

Xiao H, Zhang Y, Kong D, et al (2020) The Effects of Social Support on Sleep Quality of Medical Staff Treating Patients with Coronavirus Disease 2019 (COVID-19) in January and February 2020 in China. Med Sci Monit 26:e923549–1-e923549–8. https://doi.org/10.12659/MSM.923549

Xu J, Xu Q, Wang C, Wang J (2020) Psychological status of surgical staff during the COVID-19 outbreak. Psychiatry Res. https://doi.org/10.1016/j.psychres.2020.112955

Zhang W, Wang K, Yin L et al (2020) Mental health and psychosocial problems of medical health workers during the COVID-19 epidemic in China. PPS. https://doi.org/10.1159/000507639

Zhu Z, Xu S, Wang H, et al (2020) COVID-19 in Wuhan: Immediate Psychological Impact on 5062 Health Workers. medRxiv. https://doi.org/10.1101/2020.02.20.20025338

Chiang H-H, Chen M-B, Sue I-L (2007) Self-state of nurses in caring for sars survivors. Nurs Ethics 14:18–26. https://doi.org/10.1177/0969733007071353

Chung BPM, Wong TKS, Suen ESB, Chung JWY (2005) SARS: caring for patients in Hong Kong. J Clin Nurs 14:510–517. https://doi.org/10.1111/j.1365-2702.2004.01072.x

Cunningham T, Rosenthal D, Catallozzi M (2017) Narrative medicine practices as a potential therapeutic tool used by expatriate Ebola caregivers. Intervention 15:106–119

Hewlett BL, Hewlett BS (2005) Providing care and facing death: nursing during Ebola outbreaks in Central Africa. J Transcult Nurs 16:289–297. https://doi.org/10.1177/1043659605278935

McMahon SA, Ho LS, Brown H et al (2016) Healthcare providers on the frontlines: a qualitative investigation of the social and emotional impact of delivering health services during Sierra Leone’s Ebola epidemic. Health Policy Plan 31:1232–1239. https://doi.org/10.1093/heapol/czw055

Meyer D, Kirk Sell T, Schoch-Spana M et al (2018) Lessons from the domestic Ebola response: Improving health care system resilience to high consequence infectious diseases. Am J Infect Control 46:533–537. https://doi.org/10.1016/j.ajic.2017.11.001

Raven J, Wurie H, Witter S (2018) Health workers’ experiences of coping with the Ebola epidemic in Sierra Leone’s health system: a qualitative study. BMC Health Serv Res 18:251. https://doi.org/10.1186/s12913-018-3072-3

Shih F-J, Turale S, Lin Y-S et al (2009) Surviving a life-threatening crisis: Taiwan’s nurse leaders’ reflections and difficulties fighting the SARS epidemic. J Clin Nurs 18:3391–3400. https://doi.org/10.1111/j.1365-2702.2008.02521.x

Smith MW, Smith PW, Kratochvil CJ, Schwedhelm S (2017) The psychosocial challenges of caring for patients with Ebola virus disease. Health Secur 15:104–109. https://doi.org/10.1089/hs.2016.0068

Wong ELY, Wong SYS, Lee N et al (2012) Healthcare workers’ duty concerns of working in the isolation ward during the novel H1N1 pandemic. J Clin Nurs 21:1466–1475. https://doi.org/10.1111/j.1365-2702.2011.03783.x

Ioannidis JPA, Trikalinos TA (2007) The appropriateness of asymmetry tests for publication bias in meta-analyses: a large survey. CMAJ 176:1091–1096. https://doi.org/10.1503/cmaj.060410

Liu C, Yang Y, Zhang XM, et al (2020) The prevalence and influencing factors for anxiety in medical workers fighting COVID-19 in China: A cross-sectional survey. Psychiatry and Clinical Psychology

Robertson E, Hershenfield K, Grace SL, Stewart DE (2004) The psychosocial effects of being quarantined following exposure to SARS: a qualitative study of toronto health care workers. Can J Psychiatry 49:403–407. https://doi.org/10.1177/070674370404900612

Lee SM, Kang WS, Cho AR et al (2018b) Psychological impact of the 2015 MERS outbreak on hospital workers and quarantined hemodialysis patients. Compr Psychiatry 87:123–127. https://doi.org/10.1016/j.comppsych.2018.10.003

Khee KS, Lee LB, Chai OT, et al (2004) The psychological impact of SARS on health care providers. Critical Care and Shock 100–106

Koh D, Lim MK, Chia SE et al (2005b) Risk Perception and Impact of Severe Acute Respiratory Syndrome (SARS) on Work and Personal Lives of Healthcare Workers in Singapore What Can We Learn? Med Care 43:676–682

Chen C-S, Wu H-Y, Yang P, Yen C-F (2005) Psychological distress of nurses in Taiwan who worked during the outbreak of SARS. Psychiatr Serv 56:76–79. https://doi.org/10.1176/appi.ps.56.1.76

Creamer M, Bell R, Failla S (2003) Psychometric properties of the impact of event scale—revised. Behav Res Ther 41:1489–1496. https://doi.org/10.1016/j.brat.2003.07.010

Morina N, Ehring T, Priebe S (2013) Diagnostic utility of the impact of event scale-revised in two samples of survivors of war. PLoS ONE 8:e83916. https://doi.org/10.1371/journal.pone.0083916

Naumann DN, McLaughlin A, Thompson CV, et al (2017) Acute stress and frontline healthcare providers. J Paramed Pract 9:516–521. https://doi.org/10.12968/jpar.2017.9.12.516

Thompson CV, Naumann DN, Fellows JL et al (2017) Post-traumatic stress disorder amongst surgical trainees: an unrecognised risk? Surgeon 15:123–130. https://doi.org/10.1016/j.surge.2015.09.002

McFarland DC, Roth A (2017) Resilience of internal medicine house staff and its association with distress and empathy in an oncology setting. Psycho-Oncol 26:1519–1525. https://doi.org/10.1002/pon.4165

Bonanno GA, Brewin CR, Kaniasty K, Greca AML (2010) Weighing the costs of disaster: consequences, risks, and resilience in individuals, families, and communities. Psychol Sci Public Interest 11:1–49. https://doi.org/10.1177/1529100610387086

Brooks SK, Rubin GJ, Greenberg N (2019) Traumatic stress within disaster-exposed occupations: overview of the literature and suggestions for the management of traumatic stress in the workplace. Br Med Bull 129:25–34. https://doi.org/10.1093/bmb/ldy040

Brooks SK, Dunn R, Amlôt R et al (2018) Training and post-disaster interventions for the psychological impacts on disaster-exposed employees: a systematic review. J Mental Health. https://doi.org/10.1080/09638237.2018.1437610

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bell, V., Wade, D. Mental health of clinical staff working in high-risk epidemic and pandemic health emergencies a rapid review of the evidence and living meta-analysis. Soc Psychiatry Psychiatr Epidemiol 56, 1–11 (2021). https://doi.org/10.1007/s00127-020-01990-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00127-020-01990-x