Jordanian University Instructors’ Practices and Perceptions of Online Testing in the COVID-19 Era

- Department of English Language and Translation, Applied Science Private University, Amman, Jordan

It is widely known that exceptional circumstances inevitably call for the use of matching procedures. As there has been a change in face-to-face teaching methods, there have also been parallel changes in student evaluation and assessment plans or strategies during the COVID-19 era. This study investigates how COVID-19 affected online testing in higher education institutions in Jordan. For this purpose, the researchers developed a five-construct Likert-type questionnaire with 20 items and distributed it to a sample of 426 university instructors. The constructs were the internet and technology, technical and logistic issues, types of questions, test design, and students’ awareness. The results showed that the Internet and technology are essential to guarantee the successful performance of online testing. The study also showed that this type of testing affected the test design and types of questions in a way to eliminate or at least reduce the spread of online cheating. The study recommends that higher education institutions provide instructors with on-the-job training, not only in e-learning techniques and procedures but also in preparing and conducting online exams.

Introduction

The COVID-19 pandemic hit hard in various sectors such as health, economy, and education (Al-Salman and Haider, 2021a,b). The impact on the educational sector was most noticeable worldwide with the closure of schools and universities (Haider and Al-Salman, 2020; Almahasees et al., 2021; Alqudah et al., 2021; Haider and Al-Salman, 2021; Al-Salman et al., 2022). This has affected students and teachers alike and resulted in a change in the teaching–learning methodology and the evaluation process. In Jordan, the government attempted to conform to the COVID-19 emergency through taking different measures related to the different spheres of life, including education.

Guangul et al. (2020) affirmed, in the aftermath of the crisis, that colleges and higher education institutions have faced challenges in activities and particularly in the online or Internet assessment. This has generated problems for universities and colleges which were not adequately prepared for the internet learning pedagogy and assessment. A similar argument is put forward by Oyedotun (2020), who argued that the unexpected switch to online teaching in developing countries has resulted in disparities, difficulties, and some advantages.

It is widely known that exceptional circumstances inevitably call for the use of matching procedures. As there has been a change in face-to-face teaching methods, there have also been parallel changes in student evaluation and assessment plans or strategies during the COVID-19 era. This required the use of the internet assessment and test instruments that are different from those used prior to the emergence of the coronavirus. Performing online tests and examinations in the COVID-19 period required two types of skills and knowledge by the teacher. The first is related to test content and forms, such as choosing and developing suitable assessment methods administering and scoring the test results, among other things. The second, according to Hendricks and Bailey (2014), involves technological knowledge, some of which may be necessary for teachers and students. Such technological knowledge includes typing, platforms such as Microsoft Teams and Blackboard, presentation skills, search engines, the know-how of software downloading from the Web, scanner knowledge, deep web knowledge, and computer security knowledge and interaction with online assessment. Fortunately, most higher education institutions and even schools took up the challenge and provided teachers with the know-how and required internet technology.

This study examines the state of the art of online testing in this critical period by surveying Jordanian university instructors’ modes of testing in the COVID-19 era.

Literature Review

E-learning is double-edged as it has some merits and shortcomings. The advantages of e-learning involve the sustainability of the educational process and the availability of educational content for students. E-learning, however, has its disadvantages as it lacks social interactivity in addition to the unpreparedness of the infrastructure in most third-world educational institutes and universities not only for online teaching but also for online testing. This might be the case in developed and industrial countries where online teaching infrastructure is abundantly available in a way that enables them to cope with the emergency situation imposed by COVID-19.

One major stumbling block facing e-learning assessment in Jordan and perhaps elsewhere in the COVID-19 era is the phenomenon of internet cheating. This takes various forms, where weaker students contact others to obtain the correct answers by using the Internet, WhatsApp, text messaging, or voice mail. Some students scan the exam questions and send them directly to the cheating collaborator to get the correct answers either free of charge or in exchange for some money. Some critics of the Internet testing process view it as “the blessings of distance education” and therefore call against internet teaching and internet testing. What makes the situation even worse is the tendency of some individuals or educational organizations and training centers inside and outside Jordan to offer university students help in online exams and tests in exchange for a paid fee prior to the examination date.

Rahim (2020) conducted a study to assist the faculty members in designing online assessments. For this purpose, Rahim identified nine common themes. The results pointed out that cheating is a concern, especially in summative evaluation in professional courses such as medicine, and that cheating in online assessment is inconclusive. Also, open-book tests were recommended. The study recommended that medical schools balance the use of such types of tests, i.e., open-book and other types of tests. To eliminate cheating, the author suggested that technical strategies should include randomizing questions, shuffling questions and options order, limiting the number of attempts, and putting a constraint on the time for tests.

Montenegro-Rueda et al. (2021) examined the impact of evaluation during the COVID-19 pandemic. The study used the methodology suggested in the PRISMA statement and consisted of 13 studies selected from 51 studies. The results indicated that the faculty and students had faced many difficulties shifting to a remote teaching environment. Regarding the faculty, the main problem was training faculty in the online evaluation and assessment methods. In addition, dishonesty and misconduct were reported in students’ online assessments.

Senel and Senel (2021) attempted to identify the common assessment approaches used in the COVID-19 era and how students perceived the advantages and disadvantages associated with the evaluation quality. To collect the data, the researchers used a survey questionnaire and distributed it to 486 participants from 61 universities in Turkey. The results showed that assignments were the most frequently used tools. In addition, the students were satisfied with the quality of the assessment, especially the online testing.

Meccawy et al. (2021) conducted a study to examine the faculty and students’ perceptions of online evaluation most commonly used during the COVID-19 era due to some constraints such as distancing and closures of academic institutions. The primary result suggests a multi-model approach to eliminate cheating and plagiarism through enhancing students’ awareness and ethics. In addition, the study recommends empowering teachers to detect methods of cheating.

In their study, Almossa and Alzahrani (2022) attempted to upgrade faculty members’ knowledge of various evaluation practices. For this purpose, faculty members in Saudi universities were asked to complete the Approaches to Classroom Inventory survey. The findings showed that providing students with feedback, linking the assessment method to the objectives, using scoring guides, and revising the evaluation approaches were the most frequently used practices. In addition, although the researchers affirmed that faculty members from different schools and disciplines showed patterns of approval of assessment practices, they showed differences in their preferences and needs for educational assessment.

Although it is well-known that online teaching and testing were fairly common before the COVID-19 pandemic, especially in the developed countries, they were not so common in less developed countries. Teaching is constantly responding to the needs and conditions of the digital generation; still, a large portion of instructors use the same outmoded paper-and-pencil manner (Frankl and Bitter, 2012). However, the emergency situation caused by the Coronavirus pandemic shifted the traditional method of testing to remote testing (Mohmmed et al., 2020). This raised some challenges for teachers around the world.

Ilgaz and Adanır (2020) showed the differences between traditional exams and online exams. They highlighted the positive attitudes that some students have toward online exams compared to others who complained about some technical and technological difficulties that posed a challenge to them. In line with this, Clark et al. (2020) stated that the technological challenges constituted a significant obstacle for students. The researchers recommended that educational organizations balance online exams and traditional exams by training students to use technology platforms and warning them about conformity detection devices to detect cheating in exams. Similarly, Hendricks and Bailey (2014, p. 1) stated that educational institutions must provide “students and faculty with the necessary technical skills and training to be successful in the online classroom environment.” Michael and Williams (2013) have affirmed that with the expansion of the electronic base and distance education, the faculty members’ challenges and obstacles increased. This prompted them to think of new tactics to prevent cheating and fraud. So the researchers presented a set of ideas to spread awareness of a culture of honesty among both students and teachers, stressing the importance of the prevention aspect.

Chao et al. (2012) examined the criteria for different online synchronous appraisal methods and affirmed the phenomenon of student online cheating and a shortage of technical resources. In this regard, Rogers (2006) stated the concerns raised about the lack of academic honesty and online fraud among students. The research sample included members of the faculty who were asked to list the concerns they had about the etiquette of taking exams online. So each participant was asked about the precautionary measures taken in this regard. The results showed that despite the constant concern of teachers, the majority of them do not take any measures to prevent online cheating and fraud.

One of the advantages of online testing is highlighted by Schmidt et al. (2009), who confirmed the online exams’ superiority over the traditional ones for teachers and students alike. This is attributed to the availability of self-correcting technology for the exam, which shows results for students as soon as they finish the exam Shraim (2019) examined learners’ perceptions of the relative advantages of online examination practices. The instrument for this research was an online questionnaire that was distributed to 342 undergraduate students. The results showed that online exams were rated higher than traditional examinations. The study reported a myriad of challenges facing conducting online tests successfully with regard to some variables such as security, validity and fairness issues.

Methodology

Research Tool

This research made use of a questionnaire to elicit university instructors’ viewpoints and attitudes to online testing. Before developing the questionnaire, the researchers examined different studies designed for similar purposes (George, 2020; Lassoued et al., 2020; Bilen and Matros, 2021; Kharbat and Abu Daabes, 2021; Maison et al., 2021). The questionnaire was sent to a jury of three education experts for their feedback. Subsequently, the researchers modified the questionnaire in line with the jury’s comments before administering it. The questionnaire consisted of 20 items and five constructs, namely, the internet and technology, technical and logistic issues, types of questions, test designing, and students’ awareness.

Respondents

The research questionnaire was distributed to a random sample of 500 university instructors in different faculties from four different Jordanian universities, two public and two private, but only 426 instructors fully completed all items of the questionnaire. The response rate was 85.2%. The male respondents were 266 (62.4%), while the females were 160 (37.6%).

Questionnaire Reliability

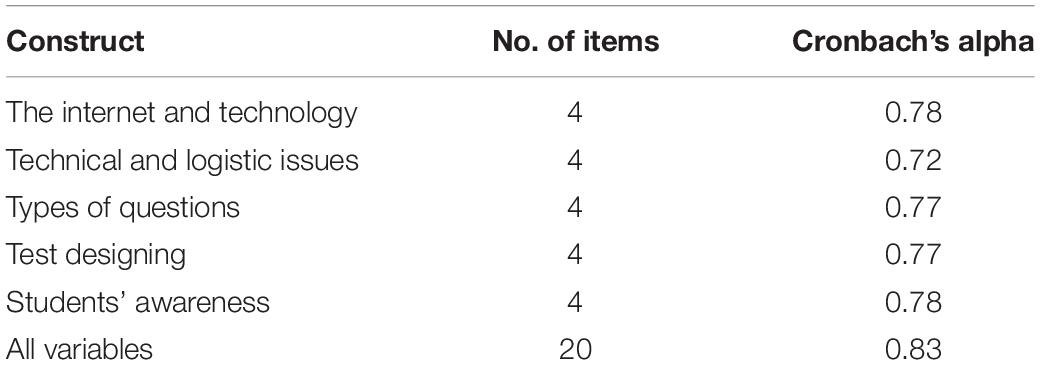

To make sure that the 20 statements are clear and understandable. Reliability analysis and correlation coefficients were conducted. A Cronbach’s alpha test on a sample of 50 respondents was used to validate the reliability of the research instrument (Cronbach, 1951). Table 1 shows the results for the 20 statements of the questionnaire and how closely they are related in the five constructs.

Table 1 indicates a high level of reliability and reflects a relatively high internal consistency. It is noteworthy that a reliability coefficient of 0.70 or higher is considered “acceptable” in social science research (Nunnally, 1978).

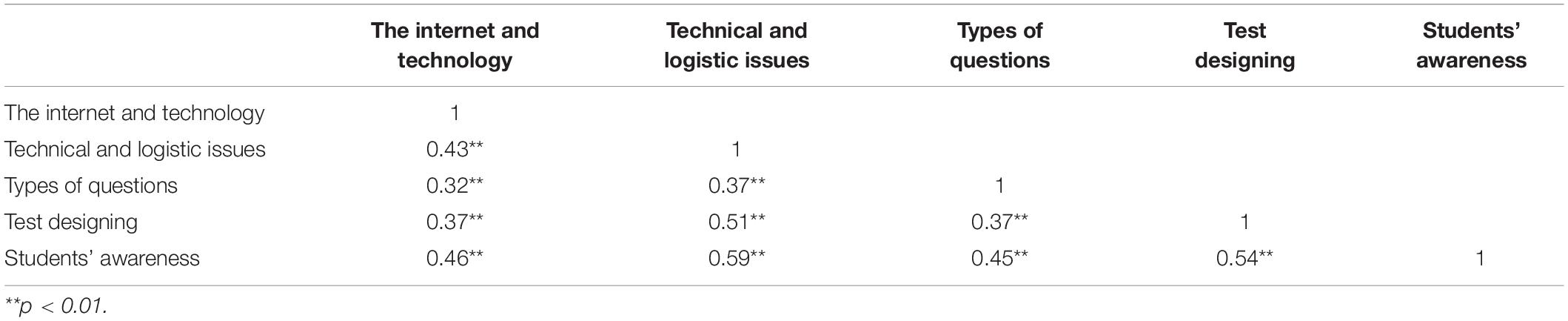

Based on the results above, Pearson correlation analysis was conducted, as Table 2 shows.

Table 2 shows the Pearson Correlation Matrix between the five constructs. The results indicate that there are statistically significant relationships between the five constructs, and the strongest relationship was between the two constructs (Technical and Logistic Issues and Students’ Awareness) with a value of 0.59, followed by the constructs of Test Designing and Students’ Awareness with Pearson correlation value of 0.54. This indicates a very acceptable level of shared variance (Kendal and Stuart, 1973).

Data Analysis

As discussed above, a five-construct Likert-type questionnaire was developed. After receiving the instructors’ responses, the researchers calculated the percentages for the five scales, namely “always,” “often,” “sometimes,” “rarely,” and “never.” Through analyzing the data, “always” and “often” were taken as one unit, and so “rarely” and “never.” We have also calculated the subscale means for each construct and run a multiple linear regression and simple Linear Regressions.

The study aims to unveil the university instructors’ viewpoints and attitudes to online testing. It attempts to examine the Internet and technology with regard to the availability of a good internet connection and the possibility that questions cannot be answered through browsing the Internet. It identifies the technical and logistic issues related to online testing, such as the use of laptops and cameras during the text. It aims to investigate the types of questions used in online exams, such as open-ended and multiple-choice questions. Furthermore, it aims to define online test design with regard to form, instruction, and duration. Finally, it explores techniques used by instructors to raise students’ awareness as to the nature of online tests.

Analysis and Discussion

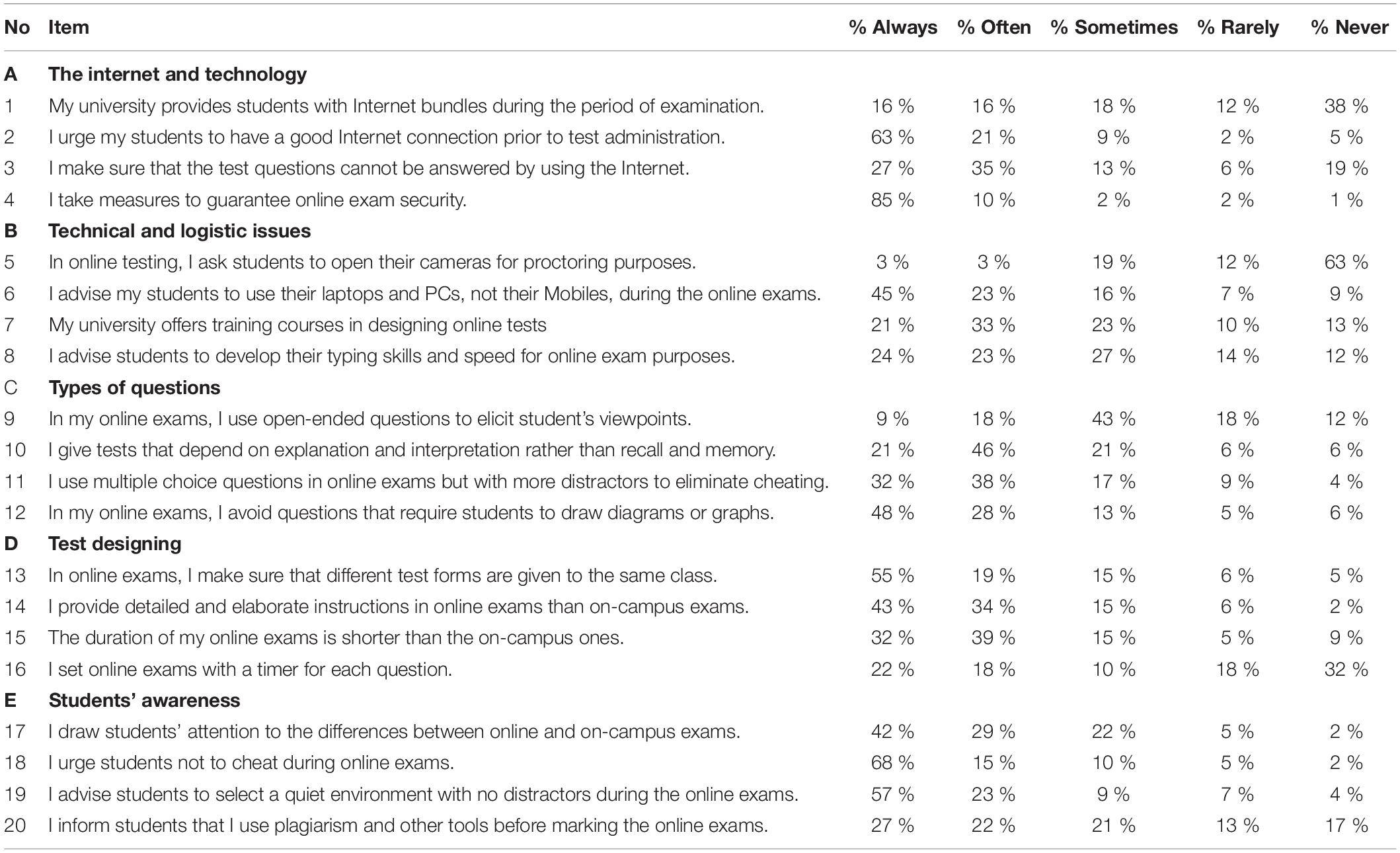

The researchers examined the responses of the instructors, as shown in Table 3. The “%” columns provide the percentages of the instructors’ responses.

Items 1 through 4 of the questionnaire aimed to collect data about the Internet and technology. Fifty percent of the respondents confirmed that their universities “never” or “rarely” provide students with internet connection during the examination period. Eighty-four percent of the faculty members “always” or “often” encourage students to have a good Internet connection before the online test. Sixty-two percent of the instructors “always” or “often” make sure that students cannot cheat by using search engines. Ninety-five percent of the instructors affirmed that they always or often take precautionary measures to guarantee online exam security by adhering to the instructions of the IT Department, which include using a secure browser, IP-based authentication and authorization, and data encryption. This is not in line with Rogers (2006), who argued that most teachers do not take security measures to prevent online cheating and fraud.

Items 5 through 8 aimed to collect data about the technical and logistic issues related to online testing. Seventy-five percent of the instructors confirmed that they have “never” or “rarely” asked students to open their cameras during the exam, whereas only 6% asked students to do so. This could be attributed to the instructors’ heeding of students’ privacy in a conservative society like the Jordanian one, where students usually share a small accommodation with numerous family members. In addition, there may be an overload on the Internet when 60 or 70 students in the same class are required to open their cameras. Furthermore, external proctoring does not seem to apply in this context. Again, this is in line with Rogers (2006), who maintained that most teachers, despite their concerns, do not take the necessary measures to stop cheating in their online exams. Sixty-eight percent% of the respondents “always” or “often” advise their students to use their laptops and PCs, not their mobiles, during the online exams. This is consistent with Ilgaz and Adanır (2020), who stated that students should use the proper means to avoid technical and technological difficulties that might be problematic and challenging. Fifty-four percent of the faculty members affirmed that their universities “always” or “often” offer training courses in online test design, whereas 23% said that their universities “rarely” or “never” offer this service. This is in agreement with Clark et al. (2020), who affirmed that educational institutions should offer students training on how to use the platforms during exams. This can also apply to training teachers on the use of these platforms. Forty-seven percent of the faculty members “always” or “often” advise students to develop their typing skills and speed as a substantial requirement for online exam purposes. Developing a typing skill is believed to be crucial to students who generally engage in online testing, especially in the humanities, but this does not necessarily exclude students in mathematics and other natural sciences who need to be aware of the vast number of symbols along with their meanings.

Items 9 through 12 aimed to collect data about the types of questions used in online exams. Thirty percent of the faculty stated that they “rarely” or “never” use open-ended questions to elicit students’ viewpoints. Such types of questions seem not to be appropriate for scientific exams and can sometimes be difficult to be linked to a marking scheme. Open-ended questions, unlike multiple-choice questions, do not have a static response and generally require a longer response in the form of an essay or paragraph. Sixty-seven percent of the instructors “always” and “often” give tests that elicit explanation rather than recall. This is a way to make students engage with long answers rather than multiple-choice questions. Concerning this type of questions, the data showed that 70% of the respondents “always” or “often” use multiple-choice questions but with more distractors to eliminate cheating. In their online exams, 76% of the instructors confirmed that they avoid questions that require students to draw diagrams or graphs. Although teaching is constantly changing to the needs and conditions of digital natives, testing has always been done in the same outmoded paper-and-pencil manner (Frankl and Bitter, 2012). However, the emergency situation caused by the Coronavirus pandemic shifted the traditional method of testing to remote testing (Mohmmed et al., 2020). This raised some challenges for teachers worldwide and motivated them to change their teaching and evaluation methods.

Items 13 through 16 aimed to collect data about test design. Seventy-four percent of the respondents “always” make sure that different test forms are given to the same class in their online exams. Seventy-seven percent of the instructors stated that they “always” or “often” provide more detailed and elaborate instructions in online than on-campus exams. To have successful online exams with the minimum degree of cheating, 71% of the faculty members asserted that they “always” or “often” make the duration of their online exams shorter than the on-campus ones. Some instructors believe that shorter durations of the exams may reduce cheating as it will not give students plenty of time to consult other resources. This notion is controversial and cannot be easily confirmed or disproved. The shorter duration could be due to the different test forms used in online testing when compared to face-to-face on-campus exams.

Further, 50% of the instructors “rarely” or “never” set online exams with a timer for each question. Michael and Williams (2013) have affirmed that with the expansion of the electronic base and distance education, the faculty members’ challenges and obstacles increase. This prompted them to think of new tactics to prevent cheating and fraud.

Items 17 through 20 aimed to collect data on the instructors’ means of raising the student’s awareness. 71% of the respondents confirmed that they “always” or “often” draw students’ attention to the differences between online and on-campus exams. Likewise, 83% of the instructors “always” or “often” urge students not to cheat during the online exams. In Item 19, most instructors urge students to have the online exam in a quiet environment with no distractors as this might help them focus more on the test questions. To reduce and eliminate plagiarism, almost half of the instructors stated that they informed students that they use plagiarism and other tools to check cheating before marking the online exams.

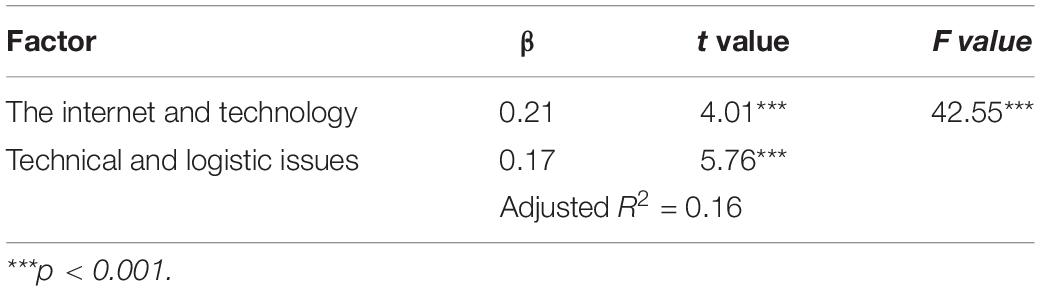

To test whether technology and technical aspects have enhanced online testing methods and procedures, we have calculated the subscale means for each construct and run a multiple linear regression as shown in Table 4.

Table 4 shows that the value of determination coefficient (adjusted R2) was equal to 0.16, which means that 16.4% of the changes in online testing methods and procedures can be explained by the changes in the use of technology. Moreover, the sig. (F) value was 0.000 (less than 0.001). This means that the use of technology has significantly enhanced online testing methods and procedures. Therefore, these two constructs are significant predictors of enhancing online testing methods and procedures since their t values were significant at level 0.001. Further, Beta values showed that the Internet and Technology has the most significant effect on online testing methods and procedures.

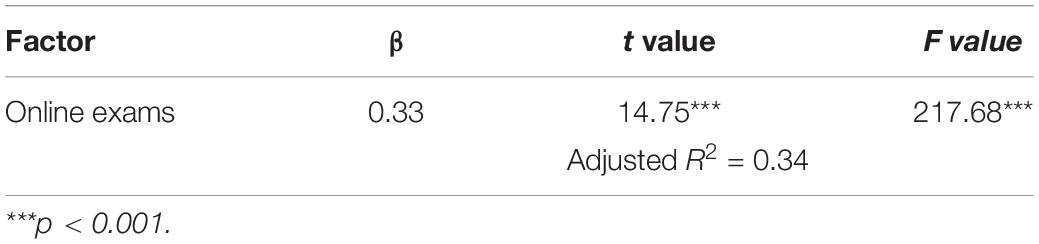

To test if there is a significant impact of online exams on test designing and types of questions, we conducted a regression analysis as shown in Table 5.

Table 5 shows that online exams can explain 33.8% of the question types and test design variation and significantly predict the dependent variable. The t value (14.75) was significant at 0.001 level, which means that online exams significantly impacted question types and test design, where instructors tend to give more test forms, detailed instructions, and limited time.

Concluding Remarks

A five-construct questionnaire was developed to collect data from 426 faculty members in Jordan. These constructs were the internet and technology, technical and logistic issues, types of questions, test design, and students’ awareness. The results showed that the Internet and technology are essential to guarantee the successful performance of the online test. Universities should, therefore, provide students with Internet bundles, and instructors should ensure that students have a good internet connection and guarantee test security. Technical and technological difficulties might be problematic and challenging for them. This is in line with Montenegro-Rueda et al. (2021), who emphasized that technological problems such as an internet disconnection, power outage, and family emergency could affect test-taking. Two-thirds of the faculty “never” asked students to open their cameras during the exam. This is contradictory with Meccawy et al. (2021), who suggested a need for a multi-model approach to the problems of cheating and plagiarism. Concerning offering training courses in designing online tests, a slight majority of the faculty indicated that the university should offer such courses or workshops.

Concerning the question types, approximately two-thirds of the faculty used open-ended questions to elicit discussion and explanation, especially the ones which require lengthy answers, such as essay questions. This is a way to make students engage with long answers rather than multiple-choice questions. Engagement with long answers is one way to stop students cheating, as is the case in multiple-choice questions. When using multiple-choice, faculty members increase the number of distractors to reduce cheating and compromise the validity of the question (Jovanovska, 2018; Allanson and Notar, 2019), and they do not require students to draw diagrams or graphs. Concerning the test design, most instructors provided detailed instructions, used different test forms, and made the test duration shorter. These actions might reduce cheating and guarantee a better success of the test. Most instructors also paid attention to the role of raising awareness in successfully performing the exam, so they recommended students to have the exam in a quiet environment and informed them of the differences between the online and on-campus exams.

Based on the results of this study, the researchers recommend that higher education institutions provide instructors with on-the-job training, not only in e-learning techniques and procedures but also in conducting online exams. This ties in well with Montenegro-Rueda et al. (2021), who affirmed that instructors need to improve their training in techniques and methods for carrying out distance learning and testing. Decision-makers are advised to provide students who live in remote areas and villages with the Internet bundles necessary for e-learning and online testing. Instructors are encouraged to take the necessary steps and procedures to reduce the phenomenon of online test cheating by writing tests that cannot be answered by using the search engines such as Google and asking students to open the camera during the test period.

Instructors are also urged to ask essay questions and avoid multiple-choice questions to eliminate online cheating or at least reduce it as much as possible. Instructors can also give different forms of the same test. Finally, the researchers feel that the university administration’s responsibility is to upgrade student computer skills such as keyboarding, word processing, and the proper use of e-learning platforms such as Microsoft Teams, Zoom, and Moodle for online learning and testing purposes.

This research was conducted in tertiary education institutions, and one wonders if similar results would be obtained if it were conducted on secondary or elementary school teachers. Another limitation stems from the relatively small sample size of faculty members who participated in this study. Finally, only one research instrument, i.e., a survey questionnaire, was used and was not supplemented by another instrument such as structured or unstructured interviewing.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics Statement

The ethical approval of this study was obtained from the Deanship of Scientific Research at the Applied Science Private University with the approval number FAS/2020-2021/245. Written informed consent was obtained from all subjects before the study was conducted.

Author Contributions

AH designed the experiment, analyzed the data, and wrote the manuscript. RH collected the data and wrote the manuscript. HS collected the data and edited the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Allanson, P. E., and Notar, C. E. (2019). Writing multiple choice items that are reliable and valid. Am. Int. J. Humanit. Soc. Sci. 5, 1–9.

Almahasees, Z., Mohsen, K., and Omer, M. (2021). Faculty’s and students’ perceptions of online learning during COVID-19. Front. Educ. 6:638470. doi: 10.3389/feduc.2021.638470

Almossa, S. Y., and Alzahrani, S. M. (2022). Assessment practices in Saudi higher education during the COVID-19 pandemic. Humanit. Soc. Sci. Commun. 9:5.

Alqudah, I., Barakat, M., Muflih, S. M., and Alqudah, A. (2021). Undergraduates’ perceptions and attitudes towards online learning at Jordanian universities during COVID-19. Interact. Learn. Environ. doi: 10.1080/10494820.2021.2018617 [Epub ahead of print].

Al-Salman, S., and Haider, A. S. (2021a). Jordanian university students’ views on emergency online learning during COVID-19. Online Learn. 25, 286–302. doi: 10.24059/olj.v25i1.2470

Al-Salman, S., and Haider, A. S. (2021b). The representation of Covid-19 and China in Reuters’ and Xinhua’s headlines. Search 13, 93–110.

Al-Salman, S., Haider, A. S., and Saed, H. (2022). The psychological impact of COVID-19’s e-learning digital tools on Jordanian university students’ well-being. J. Ment. Health Train. Educ. Pract. doi: 10.1108/JMHTEP-09-2021-0106 [Epub ahead of print].

Bilen, E., and Matros, A. (2021). Online cheating amid COVID-19. J. Econ. Behav. Organ. 182, 196–211. doi: 10.1016/j.jebo.2020.12.004

Chao, K. J., Hung, I. C., and Chen, N. S. (2012). On the design of online synchronous assessments in a synchronous cyber classroom. J. Comput. Assist. Learn. 28, 379–395. doi: 10.1111/j.1365-2729.2011.00463.x

Clark, T. M., Callam, C. S., Paul, N. M., Stoltzfus, M. W., and Turner, D. (2020). Testing in the time of COVID-19: a sudden transition to unproctored online exams. J. Chem. Educ. 97, 3413–3417. doi: 10.1021/acs.jchemed.0c01318

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika 16, 297–334. doi: 10.1007/bf02310555

Frankl, G., and Bitter, S. (2012). Online Exams: Practical Implications and Future Directions. Paper presented at the Proceedings of the European Conference on e-Learning, Groningen, Netherlands.

George, M. L. (2020). Effective teaching and examination strategies for undergraduate learning during COVID-19 school restrictions. J. Educ. Technol. Syst. 49, 23–48. doi: 10.1177/0047239520934017

Guangul, F. M., Suhail, A. H., Khalit, M. I., and Khidhir, B. A. (2020). Challenges of remote assessment in higher education in the context of COVID-19: a case study of Middle East College. Educ. Assess. Eval. Account. 32, 519–535. doi: 10.1007/s11092-020-09340-w

Haider, A. S., and Al-Salman, S. (2020). Dataset of Jordanian university students’ psychological health impacted by using e-learning tools during COVID-19. Data Brief 32:106104. doi: 10.1016/j.dib.2020.106104

Haider, A. S., and Al-Salman, S. (2021). Jordanian university instructors’ perspectives on emergency remote teaching during COVID-19: humanities vs sciences. J. Appl. Res. High. Educ. doi: 10.1108/JARHE-07-2021-0261 [Epub ahead of print].

Hendricks, S., and Bailey, S. (2014). What really matters? Technological proficiency in an online course. Online J. Distance Learn. Admin. 17.

Ilgaz, H., and Adanır, G. A. (2020). Providing online exams for online learners: does it really matter for them? Educ. Inf. Technol. 25, 1255–1269. doi: 10.1007/s10639-019-10020-6

Jovanovska, J. (2018). Designing effective multiple-choice questions for assessing learning outcomes. J. Digit. Humanit. 18, 25–42. doi: 10.18485/infotheca.2018.18.1.2

Kendal, M., and Stuart, A. (1973). The Advanced Theory of Statistics: Inference and Relationship, Vol. 2. New York, NY: Hafner Publishing Company.

Kharbat, F. F., and Abu Daabes, A. S. (2021). E-proctored exams during the COVID-19 pandemic: a close understanding. Educ. Inf. Technol. 26, 6589–6605. doi: 10.1007/s10639-021-10458-7

Lassoued, Z., Alhendawi, M., and Bashitialshaaer, R. (2020). An exploratory study of the obstacles for achieving quality in distance learning during the COVID-19 pandemic. Educ. Sci. 10:232. doi: 10.3390/educsci10090232

Maison, M., Kurniawan, D. A., and Anggraini, L. (2021). Perception, attitude, and student awareness in working on online tasks during the covid-19 pandemic. J. Pendidikan Sains Indones. 9, 108–118. doi: 10.31958/jt.v23i2.2416

Meccawy, Z., Meccawy, M., and Alsobhi, A. (2021). Assessment in ‘survival mode’: student and faculty perceptions of online assessment practices in HE during covid-19 pandemic. Int. J. Educ. Integr. 17, 1–24. doi: 10.1080/02602938.2021.1928599

Michael, T. B., and Williams, M. A. (2013). Student equity: discouraging cheating in online courses. Adm. Issues J. 3:30. doi: 10.5929/2013.3.2.8

Mohmmed, A. O., Khidhir, B. A., Nazeer, A., and Vijayan, V. J. (2020). Emergency remote teaching during coronavirus pandemic: the current trend and future directive at Middle East College Oman. Innov. Infrastruct. Solut. 5:72. doi: 10.1007/s41062-020-00326-7

Montenegro-Rueda, M., Luque-de la Rosa, A., Sarasola Sánchez-Serrano, J. L., and Fernández-Cerero, J. (2021). Assessment in higher education during the COVID-19 pandemic: a systematic review. Sustainability 13:10509. doi: 10.3390/su131910509

Oyedotun, T. D. (2020). Sudden change of pedagogy in education driven by COVID-19: perspectives and evaluation from a developing country. Res. Global. 2:100029. doi: 10.1016/j.resglo.2020.100029

Rahim, A. F. A. (2020). Guidelines for online assessment in emergency remote teaching during the COVID-19 pandemic. Educ. Med. J. 12, 59–68. doi: 10.21315/eimj2020.12.2.6

Rogers, C. F. (2006). Faculty perceptions about e-cheating during online testing. J. Comput. Sci. Coll. 22, 206–212.

Schmidt, S. M., Ralph, D. L., and Buskirk, B. (2009). Utilizing online exams: a case study. J. Coll. Teach. Learn. 6:8. doi: 10.19030/tlc.v6i8.1108

Senel, S., and Senel, H. C. (2021). Remote assessment in higher education during COVID-19 pandemic. Int. J. Assess. Tools Educ. 8, 181–199. doi: 10.21449/ijate.820140

Keywords: online testing, internet, question types, exam security, test design, cheating (education)

Citation: Haider AS, Hussein RF and Saed HA (2022) Jordanian University Instructors’ Practices and Perceptions of Online Testing in the COVID-19 Era. Front. Educ. 7:856129. doi: 10.3389/feduc.2022.856129

Received: 16 January 2022; Accepted: 04 April 2022;

Published: 09 May 2022.

Edited by:

Mona Hmoud AlSheikh, Imam Abdulrahman Bin Faisal University, Saudi ArabiaReviewed by:

Milan Kubiatko, J. E. Purkyne University, CzechiaSpencer A. Benson, Education Innovations International, LLC, United States

Copyright © 2022 Haider, Hussein and Saed. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ahmad S. Haider, ah_haider86@yahoo.com

Ahmad S. Haider

Ahmad S. Haider Riyad F. Hussein

Riyad F. Hussein Hadeel A. Saed

Hadeel A. Saed