The effect of COVID-19 pandemic on college enrollment: How has enrollment in criminal justice programs been affected by the pandemic in comparison to other college programs

- 1Department of Criminal Justice, Tarleton State University, Stephenville, TX, United States

- 2Department of Criminal Justice, Tarleton State University, Fort Worth, TX, United States

The COVID-19 pandemic has affected various aspects of our lives. For many, it has affected their ability to attend school. While some have switched to online classes, others have had to drop or delay college until later. Using official enrollment data for 12 public universities in the State of Texas, this study explores the impact the COVID-19 pandemic has had on student enrollment in criminal justice programs. A series of statistical techniques, including t-tests comparing pre- and post-pandemic enrollment numbers and panel data analysis models, are utilized to investigate the trends and changes in the program enrollments between 2009 and 2021. While in alignment with the existing research on the effect of the COVID-19 pandemic on college enrollment in general the authors have found a negative statistically significant effect of the pandemic on total college enrollment for all universities in the sample, no statistically significant effect of the pandemic was found on enrollment in criminal justice programs at 12 public universities. The effect was also non-existent for engineering and all social science programs combined. In contrast to all other programs studied herein, enrollment in natural science programs was found to be positively associated with the pandemic. Authors offer an explanation for these findings as well as suggest ideas for future research.

1. Introduction

While some believe that at the time of economic difficulties more people choose to get back to or start college to make themselves more marketable for employers when the economic downturn is over (Long, 2004; Bell and Blanchflower, 2011), others claim that as income levels drop, people tend to be more concerned with being able to make enough money for basic necessities rather than go to college (Scafidi et al., 2021). Although these beliefs may have been true for various economic downfalls of the past, the effect of the COVID-19 pandemic, as well as the associated economic problems, on college enrollment has proven to be a more complex problem requiring a more elaborate explanation. As the literature review below will demonstrate, although a great deal of research effort has been invested into studying the overall effect of COVID-19 on schools and students, very limited attention has been given to specific educational programs. The purpose of this research is to fill this gap by exploring the effects of the pandemic on enrollment rates for various types of college educational programs in order to assist administrators and policymakers in the field of education in making data-driven and research-informed decisions addressing the impact of the pandemic.

Prior to discussing COVID-19 pandemic’s impact on college enrollment, it should be noted that a few studies have been carried out examining the effect of the pandemic on K-12 school enrollment. They have found that the numbers of students in public schools declined during the pandemic and post-pandemic periods, while the private schools saw an increase in students (Flanders, 2021; Kamssu and Kouam, 2021; Ogundari, 2022). It appears that the shift from public to private schools for some K-12 students was due to certain school districts’ policy of mandatory virtual learning during the pandemic-related lockdowns which some parents viewed as less effective than the traditional face-to-face learning (Flanders, 2021; Ogundari, 2022). These findings may provide some context behind changing college enrollment trends during the pandemic and in the period immediately succeeding the pandemic.

As to the college enrollment, it appears that generally it has declined in the period of the COVID-19 pandemic and immediately thereafter (Belfield and Brock, 2020; Chatterji and Li, 2021; National Center for Education Statistics, 2021; Prescott, 2021). According to October 2022 data from the National Student Clearinghouse Research Center (2022), the overall enrollment decline persists in 2022, albeit at a slower rate than in 2021 compared to 2020. From Fall 2020 to Fall 2022, the two-year decline for both undergraduate and graduate programs was 4.2% (National Student Clearinghouse Research Center, 2022). The one-year decline among four-year public institutions from Fall 2021 to Fall 2022 was 1.6% (compared to 2.7% the previous year), while enrollment at four-year private nonprofit institutions declined 0.9% (compared to 0.2% the previous year) (National Student Clearinghouse Research Center, 2022). The sharp post-pandemic decline in community college enrollment has also slowed, with enrollment down 0.4% as compared to a 5.0% decline in the previous year (National Student Clearinghouse Research Center, 2022). It is worth noting here that the above-referenced statistics suggest that private colleges have seen a lesser decline in enrollment numbers compared to public institutions of higher education. This trend is somewhat similar to the trend that we witnessed during the pandemic in K-12 education as discussed in the previous paragraph.

Interestingly, while enrollment declined from Fall 2020 to Fall 2022 at four-year public institutions located in towns (−7.5%), cities (−3.5%), suburban (−5.2%), and rural (−5.5%) areas, primarily online institutions have experienced a 3.2% increase in enrollment during the same period (National Student Clearinghouse Research Center, 2022). It should be noted that this is contrasting with research on K-12 enrollment which suggested that public school districts that switched to fully online learning saw a significant decline in the number of students who chose to go to schools with more traditional face-to-face learning (Flanders, 2021; Ogundari, 2022). Most likely this difference in enrollment statistics between K-12 and college education is due to the inherent differences between education for minors vs. adults, e.g., due to the ability of adults to study independently or the need for adults to work while studying. Similarly to fully online schools, some largely in-state public institutions, such as the University of Massachusetts and University of Tennessee at Knoxville, saw large increases in enrollments (10 and 4.9% respectively, Kamssu and Kouam, 2021) which can be explained by the desire to cut down on costs on the part of students who originally targeted prestigious out-of-state schools but had to change their plans due to uncertainty caused by the pandemic (Korn, 2020; SimpsonScarborough, 2020).

The overall decline in college enrollment rates from Fall 2019 (pre-pandemic) to Fall 2020 (pandemic) period was partly explained by an increased number of deferments (Kamssu and Kouam, 2021). Krantz and Fernandes (2020) reported that in Fall 2020, Harvard and MIT saw an increase in admission deferments from 1 to 20 and 8%, respectively. Not less importantly, the COVID-19 pandemic had started outside the United States at the end of 2019 (i.e., much sooner than it became a major concern in the United States) which has clearly reduced the number of incoming international students which had been declining for 3 consecutive years even preceding the pandemic (Crawford et al., 2020; Fischer, 2020; Toquero, 2020). Therefore, it is important to keep in mind that whatever changes in enrollment trends we are seeing these days are not solely due to the pandemic. Some had existed prior to the pandemic and remained during the pandemic, some have been exacerbated by the pandemic, and others, although caused by the pandemic, could very well be of temporary nature.

Several qualitative studies have been conducted with the aim of understanding the factors driving the decline in college enrollment rates from the perspective of students. Steimle et al. (2022) surveyed 398 students majoring in industrial engineering regarding their intentions to enroll in Fall 2020 semester. They divided their sample into three groups based on their personal level of concern regarding COVID-19. Most students were classified as moderately concerned and indicated they planned to enroll provided there was a mixture of face-to-face and online classes available and that safety measures to mitigate COVID-19 transmission were in place. Students who were classified as highly concerned indicated a likelihood to enroll only if online courses were available. The group of not very concerned students indicated a preference for face-to-face classes, with only some interest in mitigation measures of masking and testing. Interestingly, students reported higher levels of confidence that they would follow safety protocols than they attributed to other students. While this study provides a valuable look at the factors students may have considered regarding enrollment during the pandemic, as we move further out of the height of the pandemic, concern about the pandemic may or may not be as influential in student decision-making.

Schudde et al. (2022) examined 56 participants involved in a longitudinal study of educational trajectories. The interviews conducted in Fall 2020 were the sixth year of interviews, but did allow for researchers to examine how COVID-19 had impacted the educational trajectories of the participants, all of whom had been enrolled in community college at the first wave of interviews in Fall 2015, with intentions to transfer to a four-year institution. Eight of the interviewees were classified as “optimizers,” meaning that they were able to maintain their original course toward their occupational and educational goals. These participants were differentiated from the others by having access to a safety net, to include being financially secure, having family support, and/or experiencing working from home as an improvement of their working conditions. For these students, the pandemic did not negatively impact their educational trajectory and may have even improved that trajectory through more flexible course format as well as more flexible work experiences.

The largest group (n = 32) of interviewees was classified as “satisficers,” meaning that they were able to continue their pursuit of higher education, but not in an ideal situation. Satisficers also reported access to a safety net, but did not view themselves as moving forward as much as stagnating. These participants reported postponing making changes to their current employment or educational situation, although that situation might not be ideal. Many of the satisficers reported that online coursework allowed them to continue their pursuit of higher education. Lastly, “strugglers” (n = 16) were not able to sustain the employment or educational trajectories there were on prior to the pandemic. These participants did not have access to a strong safety net and instability in their occupational experience negatively impacted their continued pursuit of higher education. While this is a small study and we should be cautious of generalizing from it, this study does highlight the importance of examining the pandemic’s impact on students’ ability to pursue higher education.

The above literature review underscores the importance of continued exploration of the impact of the COVID-19 pandemic on universities and their students. While several studies have been carried out to examine the effect of the pandemic and pandemic-related shutdowns on college enrollment in general, the authors have been unable to find any studies diving deep into the investigation of how individual educational programs have been affected and whether the pandemic effect has been similar on different programs. Specifically, no studies exploring the impact of the pandemic on college enrollment for criminal justice programs have been identified. Exploring the pandemic’s impact on enrollment in criminal justice programs is important in the context of general decline in enrollment rates for criminal justice programs that has been seen in the past 2 years (National Student Clearinghouse Research Center, 2022). It is important for researchers, school administrators, and policymakers to understand if the decline in enrollment is caused by the pandemic (i.e., temporary) or well-publicized incidents involving police misconduct (e.g., the killings of George Floyd and Breonna Taylor) which can have a longer-lasting effect. To address this gap in the existing literature, this study examines criminal justice program enrollment numbers for various universities in the period from 2009 to 2021. It further compares the enrollment trends in the aforementioned period seen in criminal justice programs to all social science programs combined as well as programs outside of the field of social sciences (specifically, engineering and natural sciences) to get a sense of how different majors might have been affected by the pandemic.

This study aims to explore the following two research questions:

(1) What effect has the COVID-19 pandemic had on college enrollment for criminal justice programs?

(2) Has the impact of COVID-19 pandemic on college enrollment been different for criminal justice programs from the impact of the pandemic on college enrollment in other educational programs?”

2. Materials and methods

2.1. Data sources

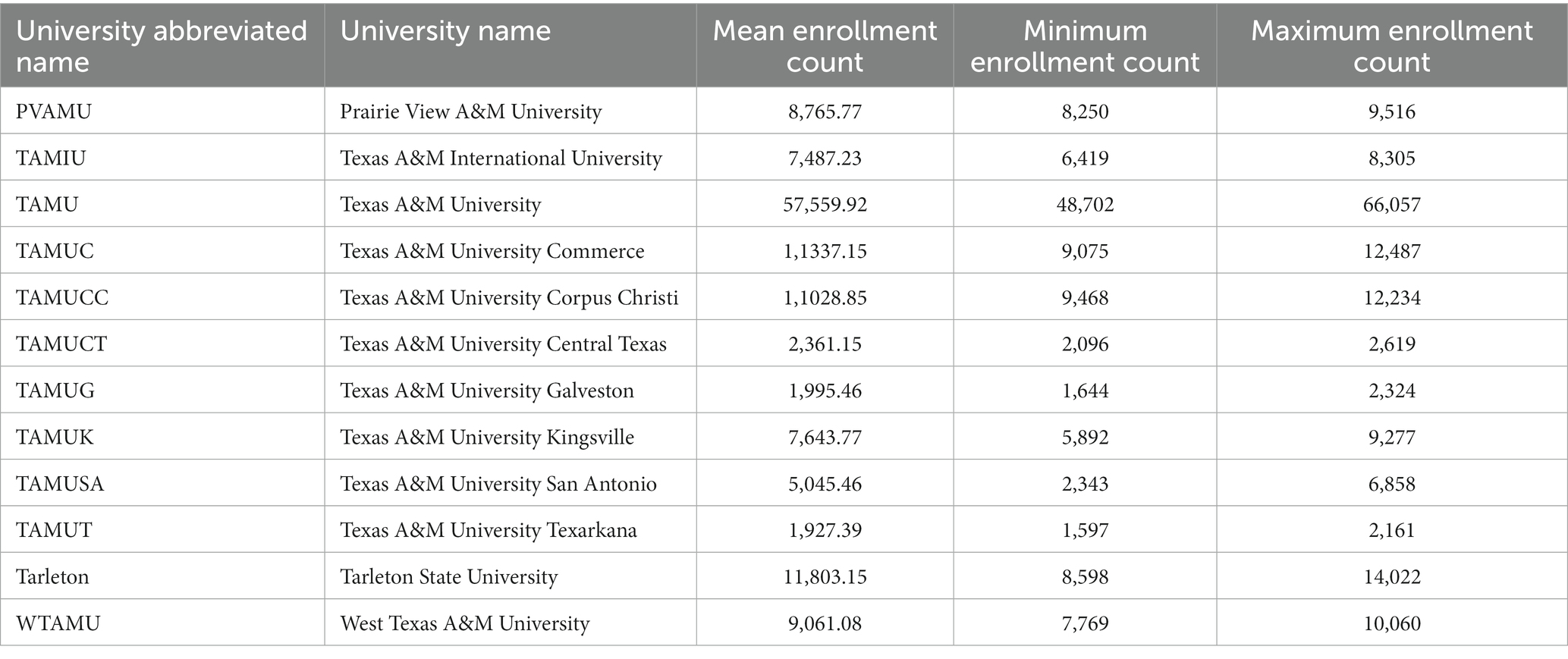

To answer the research questions, authors have used enrollment and program data on 12 public universities in the State of Texas for the period from 2009 to 2021. All of the participating universities are members of the Texas A&M University System. While not a random sample of universities allowing to make generalizations on all public universities in the United States, this dataset is suitable for this study due to the following reasons. First, with rare exceptions that are discussed below, all Texas A&M universities consistently submit their data to the Texas A&M System administrators on a regular basis which has allowed the authors to carry out panel data analysis analyzing 13 years of data. This is particularly valuable for a study examining the effect of the COVID-19 pandemic on college enrollment as no longitudinal data analysis exploring this research question has been conducted to date. Second, this dataset is comprised of universities that possess diverse characteristics. As it can be seen from Table 1 below, schools ranging from the average of 2,361 to 57,560 students during the period of study are included in the sample. As it will be seen from descriptive analysis below, all of the universities in the sample also possess other diverse characteristics beyond their size differences. Such diversity across universities included in the sample ensures that the characteristics of the universities studied herein are representative of the characteristics of all or most public universities in the United States which, in turn, warrants that the findings obtained from the analysis of these data are important and meaningful for research and policymaking purposes. In the absence of a study, or an ability to conduct a study, which would do a longitudinal analysis of enrollment counts for a large and randomly selected sample of colleges across the country, this study offers unique insight into how the pandemic has affected enrollment in various educational programs in US institutions of higher education.

The above-referenced data were obtained by the authors from two sources: (1) the official website of the Texas A&M University System1 which makes enrollment counts for all Texas A&M universities available to the general public and (2) the Texas A&M University System administration which has provided additional data on schools and programs in response to a written request.

2.2. Measurement

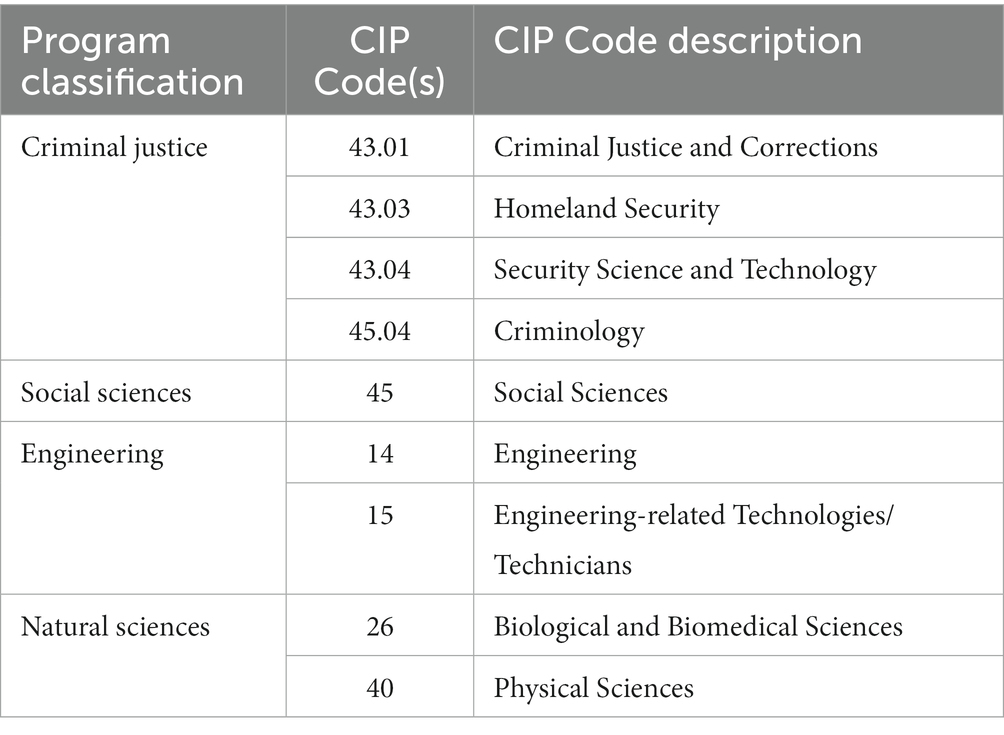

To answer the research questions about the impact of the COVID-19 pandemic on college enrollment for criminal justice and other educational programs, the authors use enrollment counts as the primary dependent variable and a set of independent variables. Since the original dataset provided by the Texas A&M University System is tracking enrollment by using the Classification of Instructional Programs (CIP) codes—a code system used by the United States Department of Education to classify various educational programs (Mau, 2016; Leider et al., 2018)—the authors have had to combine certain CIP codes to generate specific majors (i.e., criminal justice, social sciences, engineering, and natural sciences). All majors analyzed as part of this study are listed in Table 2 below with corresponding CIP codes. It should be noted that the enrollment counts for each year from 2009 to 2021 that are analyzed in this study are actually enrollment counts for the fall semester of every given year rather than a combined or mean enrollment count over the course of all semesters in a calendar or academic year.

Table 2. The Classification of Instructional Programs (CIP) codes used to construct various majors for the purpose of data analysis.

The independent variables include COVID-19 pandemic as the primary predictor and several control variables. The main predictor is a binary yes/no variable with negative values (i.e., “no”) for the years 2009 to 2019 and positive values (i.e., “yes”) for 2020–2021.

The control variables used in this study include the number of students who are (1) first-time, (2) transfer, (3) foreign, (4) in-state, (5) doctoral, (6) masters, (7) part-time, and (8) female students. All of the control variables are continuous discrete variables that can only have integer values ranging from 0 to infinity. Each control variable’s value represents the number of students of a certain type enrolled in the educational program.

2.3. Statistical analysis

This study uses Stata 16 to conduct a two-part statistical analysis consisting of a series of t-tests and panel data regression analysis. T-tests are used to check for the significance of the differences in enrollment counts in certain time periods across all universities in the sample. The idea is to see if enrollment counts pre-pandemic and during the pandemic are statistically significantly different. First, the authors conduct three separate t-tests comparing mean enrollment numbers for 2019 vs. 2020 (1 year before the pandemic and a year into the pandemic), 2018–2019 vs. 2020–2021 (2 years pre-pandemic and 2 years into the pandemic), and 2009–2019 vs. 2020–2021 (the entire 13-year period including 11 years of data pre-pandemic and two years of data into the pandemic). Upon checking for the differences in enrollment counts pre-pandemic vs. into the pandemic for all universities and educational programs, the authors perform the same series of t-tests for each program of study of interest separately (i.e., criminal justice, social sciences, engineering, and natural sciences).

The second part of the statistical analysis includes panel data regression analysis using fixed effects and random effects regression models with post-hoc tests helping the authors to determine which model provides best model fit (Andreß, 2013). Panel data include information on multiple entities (people, organizations, countries, etc.) for a period of time (Torres-Reyna, 2007). Panel data are sometimes referred to as multiple-entity longitudinal data or cross-sectional time-series data (Hsiao, 2003; Park, 2011). Panel data analysis allows the researchers to analyze differences within and between different entities in the sample over a period of time while controlling for unobserved variables (Torres-Reyna, 2007).

Similarly to the t-test analysis approach, the first set of panel data analysis models tests the pandemic’s impact on total enrollment counts across all universities and educational programs. The second set of models is then used to determine the effects of the pandemic on enrollment in each individual program (i.e., criminal justice, social sciences, engineering, and natural sciences). For each set of panel data analysis models, the authors run fixed and random effects models followed by a post-hoc Hansen’s J test which assists the researchers in determining which model is best fit for the data at hand (Green, 2007).

3. Results

The COVID-19 pandemic has had a substantial effect on the educational institutions across the world (World Bank, 2020). Through a series of t-tests and panel data regression analysis models, this study aims to determine whether the pandemic has affected enrollment in criminal justice programs in institutions of higher education in the United States, and how the impact that criminal justice programs have experienced compares to other educational programs, such as all social science combined, natural science, and engineering programs.

3.1. Descriptive analysis

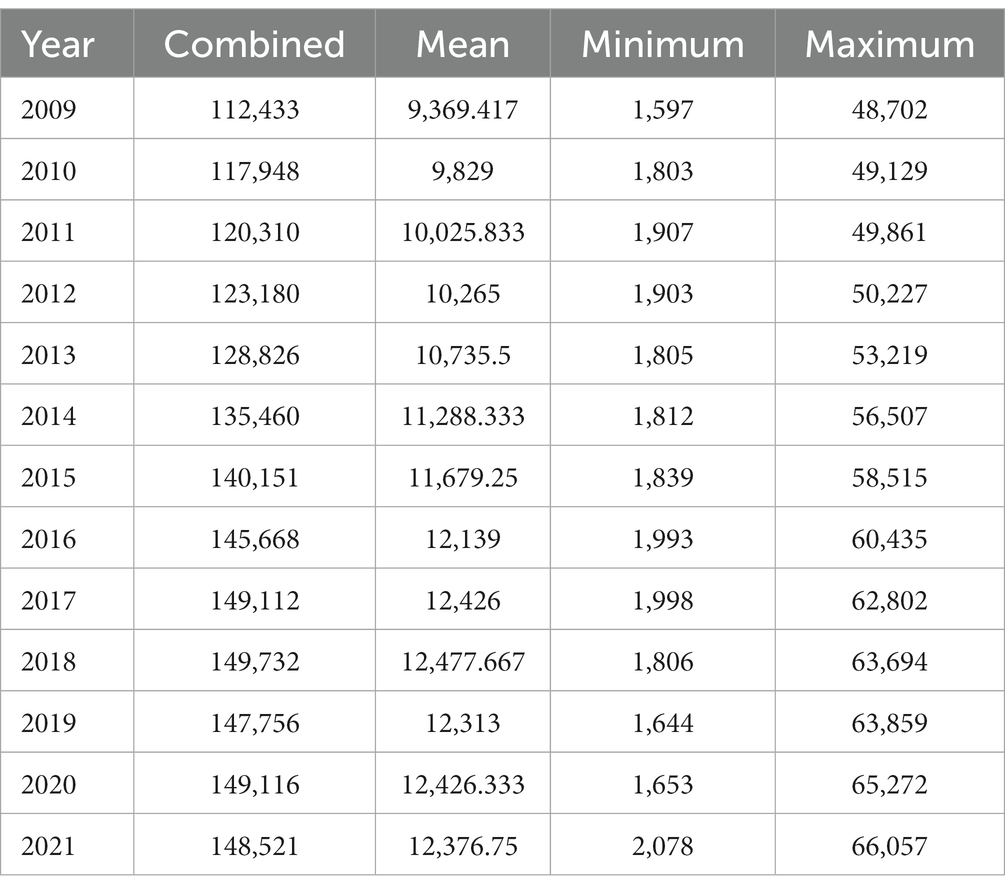

As discussed previously, Table 1 above lists the universities that are the subject of this study together with their mean, minimum, and maximum enrollment counts over the period of time from 2009 to 2021. Texas A&M University Texarkana and Texas A&M University at Galveston have the lowest mean enrollment counts of 1,927 and 1,995, respectively, while Texas A&M University has the highest mean enrollment count of 57,560 students. Most schools (8 out of 12) fall somewhere in the range between 5,000 and 12,000 students. Table 3 below demonstrates total enrollment counts across all institutions and all programs in each year from 2009 to 2021. It is clear from the table that overall universities have seen a steady growth in enrollment numbers with only two “dropbacks”—in 2019 (essentially the fall semester immediately preceding the beginning of the COVID-19 outbreak in the United States) enrollment went down to pre-2017 numbers, and in 2021 the total enrollment count dropped by a little over 500 students compared to the previous year.

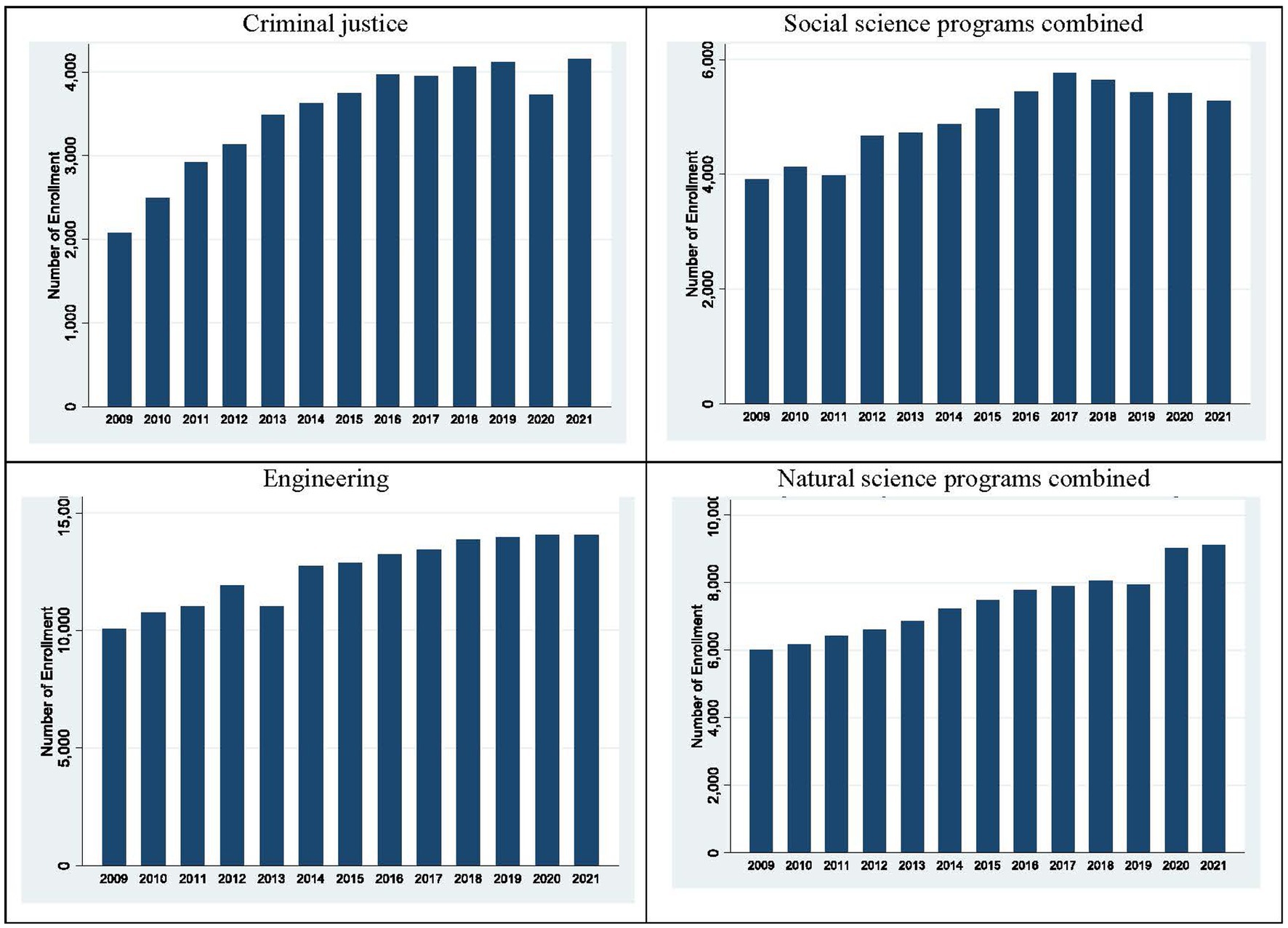

Figure 1 provides a visual representation of enrollment numbers for all programs under study (i.e., criminal justice, social sciences, engineering, and natural sciences). Overall, all programs have seen a steady growth over the period of time under study. The visual examination of enrollment trends does not appear to suggest that the COVID-19 pandemic has had any substantial impact on enrollment for the universities in the sample.

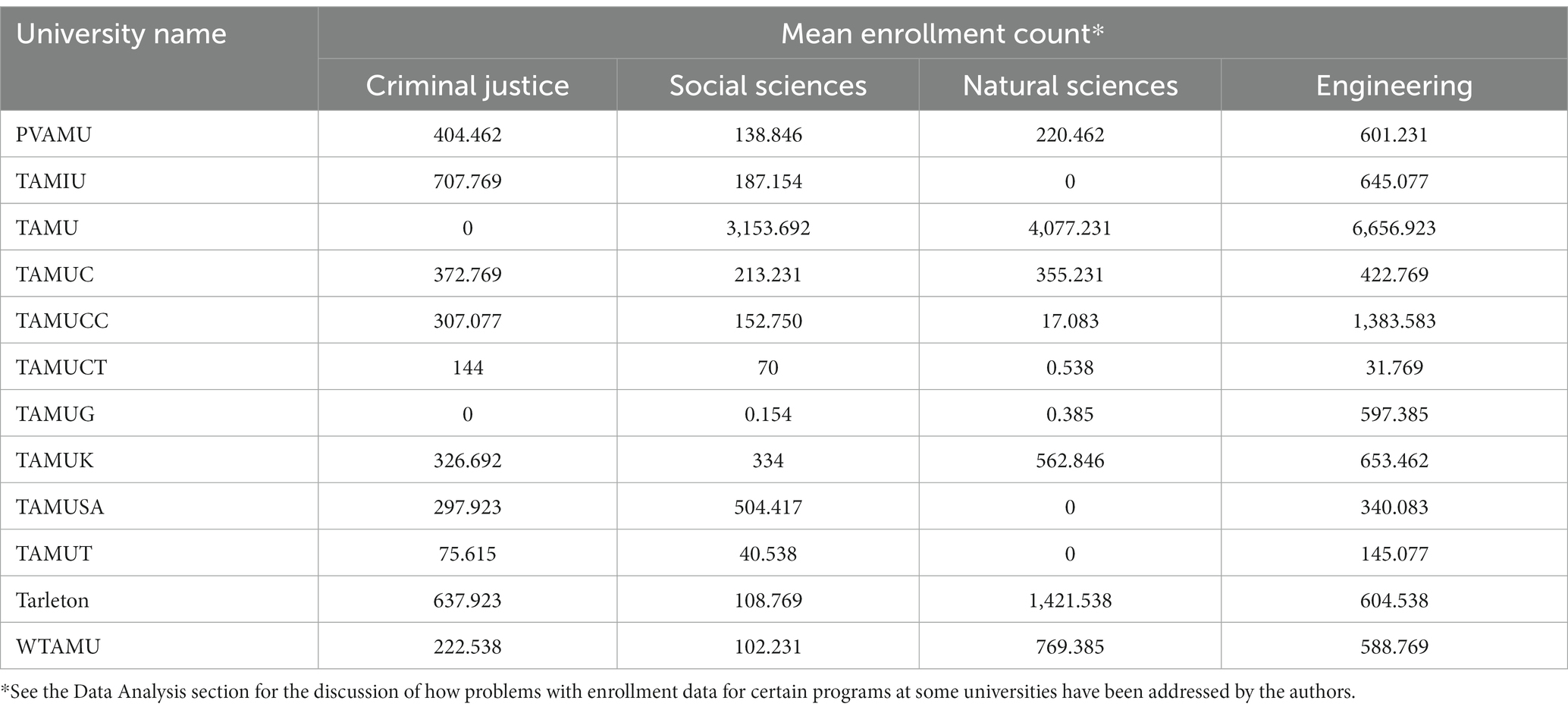

A close examination of line charts depicting enrollment counts for each specific program at each of the 12 universities (not included here for the sake of space but available via request from the authors) suggests that some universities do not have reliable enrollment data on specific programs. This is also evidenced by mean enrollment counts for each program type across all schools in the sample for the period from 2009 to 2021 listed in Table 4 below. A quick look at the table reveals problems with enrollment data for certain programs at some schools in the sample which warrants the removal of certain schools from the analyses involving programs for which such universities do not possess reliable data. As such, Texas A&M University and Texas A&M University Galveston are removed from the analysis of the impact of COVID-19 on enrollment in criminal justice programs; Texas A&M University Galveston is removed from the analysis of enrollment numbers for all social science programs combined; and Texas A&M International University, Texas A&M University Central Texas, Texas A&M University San Antonio, Texas A&M University Texarkana, and Texas A&M University Galveston are removed from the analysis of natural science programs’ enrollment counts. Further, all of these schools are removed from the analysis of the overall impact of the pandemic on all educational programs.

Table 4. Mean enrollment counts for each program type under the study across all universities from 2009 to 2021.

The removal of certain schools from the analysis reduces the number of universities in the sample to 11 for the analysis involving all social science programs, 10 for the analysis involving criminal justice enrollment counts, 7 for natural sciences, and 6 for all educational programs. The analysis of enrollment counts pre- and post-pandemic for engineering programs continues to be based on data from all 12 schools in the original sample as no issues with the data have been identified for this specific program.

3.2. Inferential analysis

To determine if the COVID-19 pandemic has had any effect on the criminal justice program enrollment and how that effect compares to the pandemic’s impact on enrollment in other educational programs, the researchers conducted two types of inferential analysis: a series of t-tests and panel data analysis.

3.2.1. T-tests

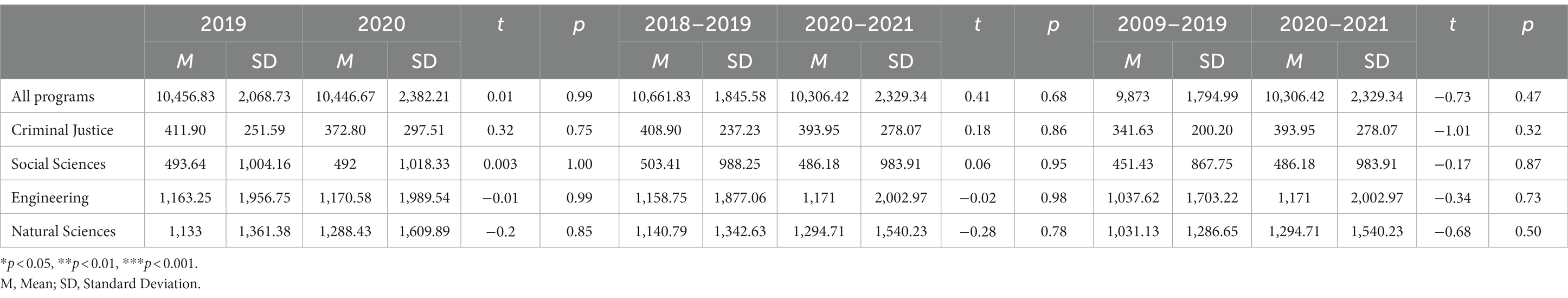

Prior to conducting t-test analysis, the researchers performed the Levine’s test for homogeneity of variance to ensure that the data are suitable for such analysis. A non-significant result for each of the models indicated the homogeneity of variances which is the main assumption of the t-test. Further, the histograms for each dependent variable have been examined to ensure that the dependent variable’s distribution is close to normal. Using 2020 as the year when the COVID-19 pandemic started, a series of t-tests has been conducted testing for the significance of the differences in enrollment counts across all programs for different time periods: (1) 2009–2019 vs. 2020–2021, (2) 2018–2019 vs. 2020–2021, and (3) 2019 vs. 2020. Then, in a similar fashion, the researchers conducted t-tests for each individual educational program comparing the same three time periods. The results of these tests are presented in Table 5 below.

Table 5. A series of t-tests comparing enrollment counts for various pre- and post-COVID-19 time periods.

Table 5 presents the results of three separate t-tests (in columns) for all educational programs as well as each of the individual programs of interest for this study (in rows). Comparing the means and standard deviations for each educational program of interest over different time periods makes it clear that pre- and post-pandemic values are very similar. Predictably, mean enrollment counts, for the most part, are slightly lower in 2020 and 2021 compared to the pre-pandemic numbers from 2018–2019. Aligning with the impression that one could get from simply eyeballing these enrollment counts come the results of the significance testing—none of the 15 models show any statistically significant differences in enrollment counts pre-pandemic vs. post-pandemic. While this could have been the end of the analysis, but considering the nature of the data at hand, a more elaborate type of analysis—panel data analysis—can be carried out. It is warranted here for two reasons. First, t-test does not account for unobserved variance (Hsiao, 2003). Second, t-test by its nature cannot account for individual heterogeneity (Torres-Reyna, 2007). In other words, t-test does not take into account how enrollment rates vary within each school for each of the educational programs studied herein. Lastly, t-test is merely a test of statistical significance, while panel data analysis makes use of measures of association. In contrast to the simple t-test, panel models account allow for controlling for certain known factors, account for unobserved variance, and measure the effect size for the relationship in question.

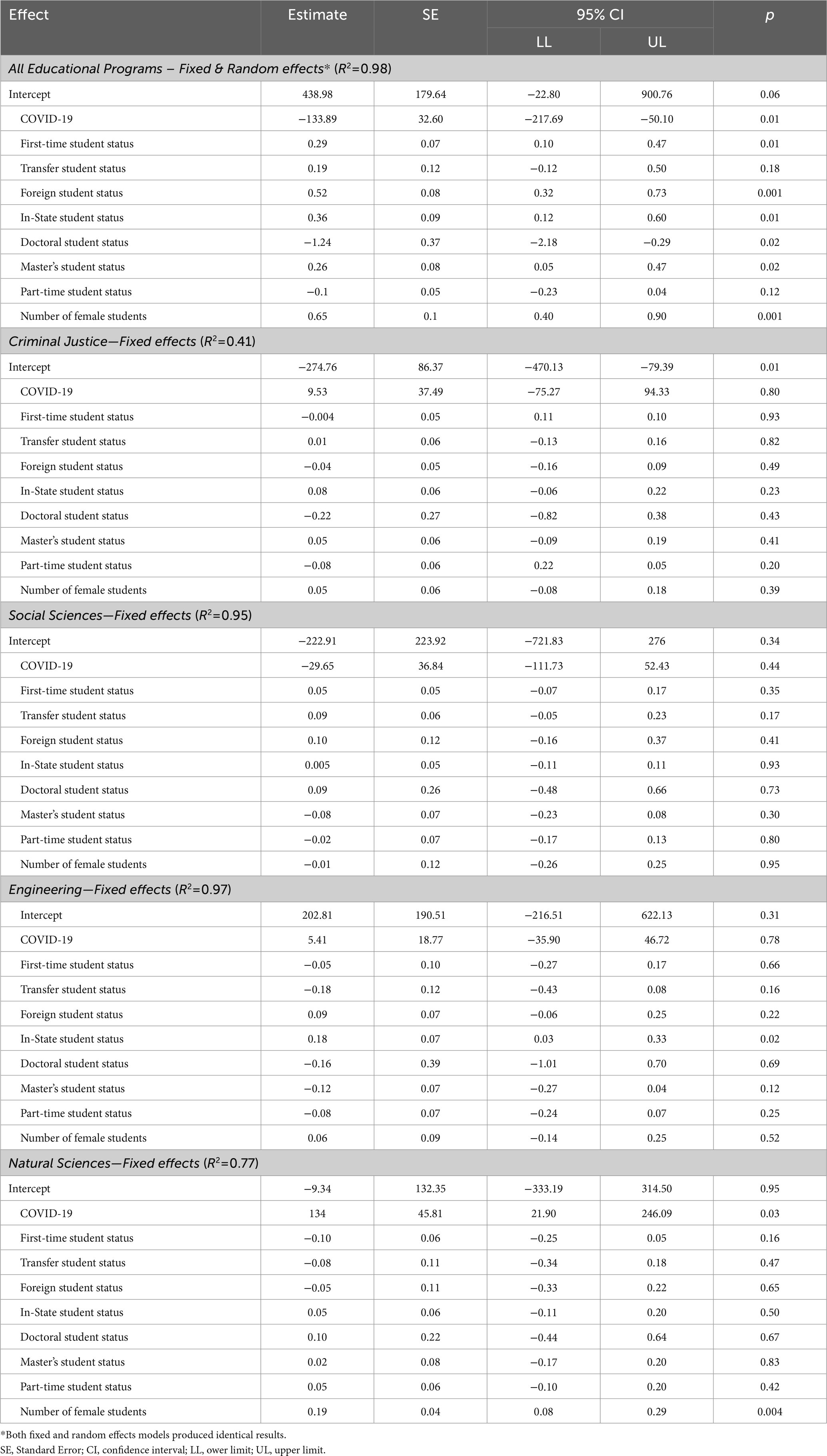

3.2.2. Regression analysis

As mentioned in the materials and methods section above, for this part of statistical analysis, the authors have run two sets of fixed and random effects regression models. Prior to running the main models, the researchers tested the model assumptions by conducting the Wald and Wooldridge tests for homoskedasticity and autocorrelation, respectively. In all of the panel analysis models either one of the two assumptions was violated which prompted the researchers to run fixed and random effects models with the “robust” option to overcome the problems stemming from the aforementioned assumption violations (Roodman, 2009). First, fixed and random effects models were conducted testing the relationship between the COVID-19 pandemic and enrollment in all educational programs. Then, they were followed by a series of models testing for the effect of the pandemic on enrollment counts in each individual program (i.e., criminal justice, social sciences, engineering, and natural sciences). Hansen’s J post-hoc test was used to assist the researchers in the determination of which analysis results in best model fit. If Hansen’s J shows correlation between the unobserved heterogeneity and independent variables, then the fixed effects regression should be used; if there is no correlation, random effects is the choice (Park, 2011). Hansen’s J was selected by the researchers over the more conventional Hausman test due to the Hausman test’s inability to work with the panel models that are run with the “robust” option in Stata (Drukker, 2003; Yaffee, 2003; Hoechle, 2007).

Table 6 presents the panel data analysis regression results. The R2 values representing the amount of variation in the dependent variable explained by the model are above 95% for the models analyzing the effect of the COVID-19 pandemic on enrollment in all educational programs, all social science programs, and engineering programs. The models with criminal justice programs only and natural science programs only have lower, but still very high, R2 values (41 and 77% respectively). At the very same time, COVID-19 pandemic, as the primary predictor used in this study, has a statistically significant association with enrollment counts only in two out of five models. In the model analyzing enrollment counts for all educational programs, the primary independent variable was found to have a statistically significant negative effect on the dependent variable (B = −133.89; p = 0.01). This can be interpreted as “on average, all educational programs in institutions of higher education in the United States have seen a decrease in enrollment by 133.89 students during the pandemic period.” The other model that found a statistically significant relationship between the pandemic and enrollment counts was the natural sciences model which unexpectedly suggested that the pandemic has a positive statistically significant effect on the dependent variable (B = 134; p = 0.03).

Table 6. Fixed and random effects model outputs for all educational programs and each individual type of educational program of interest.

4. Discussion

This study has examined the effect of COVID-19 pandemic on enrollment in criminal justice and compared it to the effects of the pandemic on enrollment in such programs as engineering, natural sciences, social sciences, as well as all educational programs combined. The examination has been carried out in two stages. First, a series of t-tests have been used to test for the significance of the differences between the pre-pandemic and post-pandemic periods of time measured in three different ways: 2019 vs. 2020; 2018–2019 vs. 2020–2021; and 2009–2019 vs. 2020–2021. None of the t-tests have shown any statistically significant differences in enrollment counts for any of the educational program types. This was perhaps unsurprising because a simple eyeballing of the bar charts showing enrollment trends (Figure 1), as well as the table listing enrollment numbers for every year from 2009 to 2021 (Table 2), suggests that changes in enrollment in 2020–2021 were not “out of line” compared to the changes in enrollment trends from 2009 to 2019.

At the same time, being merely a test of statistical significance, t-test is a fairly simplistic form of statistical analysis that does not account for individual heterogeneity and unobserved variance. Furthermore, it does not allow the researchers to estimate the effect size for the relationship of interest. Since the data at hand allowed for a more elaborate panel data analysis, the authors conducted a series of panel data analyses using both fixed and random effects models. These more elaborate statistical analyses have suggested that the COVID-19 pandemic has had a statistically significant negative effect on enrollment in all educational programs across the universities in the sample. This negative effect is not very large (B = −133.89) but certainly intuitive since it perhaps would be reasonable to assume that the pandemic has negatively impacted overall college enrollment. Thus, it can be said that this finding is both statistically significant (i.e., not likely to be due to random chance) and important. Based on the existing research overviewed in the literature review above that has come to the same finding, the reasons for the reduction in overall college enrollment due to the COVID-19 pandemic likely include the uncertainty about the future, lack of resources to start or continue education, and the lack of interest in online classes that have become the norm during the pandemic and remained in the curriculum of most colleges thereafter. The relatively small effect size of the pandemic’s impact on overall college enrollment aligns well with the existing research overviewed in the literature review section above. Clearly, those researchers who claim that as income levels drop, people tend to be more concerned with being able to make enough money for basic necessities rather than go to college (Scafidi et al., 2021) were slightly ahead of those who predicted that during the times of economic uncertainty, such as the uncertainty caused by the pandemic, people tend to go back to school to improve their job marketability (Long, 2004; Bell and Blanchflower, 2011). At the same time, it is likely that non-traditional students who are getting back to school to become more competitive on the job market have reduced the negative impact of the pandemic on enrollment for recent high school graduates who might have been in more economically disadvantaged position. Future research should examine more closely the differential effects of the pandemic on enrollment for recent high school graduates versus non-traditional students.

Somewhat counterintuitively, panel data analysis carried out here has also revealed that enrollment in natural science programs has been positively associated with the pandemic. The effect size is also not very large (B = 134), but this finding is perhaps less intuitive than the overall negative effect of the pandemic on all educational programs. The statistically significant increase in enrollment in natural science programs can perhaps be explained by the combination of shortages among healthcare occupations and an improved “prestige” of healthcare jobs in the eyes of people seeing that healthcare professionals selflessly fight the pandemic thereby saving lives of hundreds of thousands of people.

The remaining panel data analysis models examined the effect of the COVID-19 pandemic on enrollment in criminal justice, all social science programs combined, and engineering programs. No statistically significant relationships between the primary independent variable and dependent variables have been found here suggesting that whatever changes in enrollment counts we are observing in those programs may very well be due to random chance. This may indicate that either there was not enough data on these specific programs (as mentioned previously, not every panel data analysis model included all 12 universities for the entire period from 2009 to 2021) or there simply has not been a statistically effect on enrollment in these specific programs from the pandemic. Until more studies testing this association are done, these findings should be interpreted with caution.

To sum up the findings, the part of this analysis that essentially replicates existing research of the effect of the pandemic on overall college enrollment has come to the same conclusion as previous research—the pandemic has negatively impacted college enrollment. At the same time, the part of this research that represents the first attempt to examine the effect of the pandemic on enrollment in specific programs provides somewhat unexpected results—while natural science programs have seen growth as a result of the pandemic, all social science programs and criminal justice program individually, as well as engineering programs, have seen no statistically significant change in enrollment as a result of the COVID-19 pandemic.

4.1. Limitations

In the absence of research examining the coronavirus pandemic’s impact on enrollment counts in specific educational programs, this study offers unique value by giving the first insight into how various educational programs have been affected by the pandemic and laying the foundation for future research. At the same time, being the first article of its kind, this study has certain limitations that should be acknowledged. First and foremost, this research is based on a non-randomly selected sample of universities. All of the universities in the sample belong to the same university system (and therefore are governed by the same Texas A&M University System administrators) and are located in the same state. It might be that Texas A&M System universities possess characteristics different from other US institutions of higher education which may have resulted in a more or less significant impact that the COVID-19 pandemic has had on their enrollment. Official statistics suggest that the overall enrollment in Texas from Fall 2020 to Fall 2022 declined by 1.1%, which is 3.1% (or nearly four times) lower than the 4.2% decline seen across all public universities in the nation (National Student Clearinghouse Research Center, 2022). This underscores the importance of analyzing enrollment data from universities outside of Texas.

Second, this study is using a relatively small sample size. Although partly compensated by having 13 years’ worth of data, this research only uses data from 6 to 12 universities for each statistical analysis model (the actual number of universities varies because not all universities have accurate enrollment records for all educational programs studied herein). While having 13 years of records is beneficial for the statistical analysis used in this research, it is not the same as having access to data on a larger sample of universities (this study is analyzing up to 11 years of data pre-pandemic and only 2 years into the pandemic). Having data on more universities for a shorter period of time could likely provide more reliable findings on the effect of the pandemic on college enrollment.

Lastly, although panel data analysis generally accounts for unobserved variance, it is still recommended to incorporate ‘important’ factors as control variables into the regression analysis. Due to the nature of the dataset analyzed herein, this study had limited access to potential control variables which could be incorporated into the analysis. It would be beneficial to include into the analysis variables controlling for the political environment in the state and city/town where each of the universities are located as well as the political environment on campus. As existing literature has found more than once, political environment often is more important than actual danger from the virus or even perceived threat/fear on the part of people (Flanders, 2021; Kamssu and Kouam, 2021; Ogundari, 2022). Similarly, for the same reasons, it would be important to incorporate into analysis such characteristics of universities as the percentage of courses available online, percentage of online students, or a measure of success in adapting to the new circumstances which have been brought about by the COVID-19 pandemic.

4.2. Future research

Having acknowledged the limitations of this study, it is important to offer recommendations for future research. First of all, future research should focus on verifying the generalizability of these findings by analyzing enrollment data from other, preferably representative sample of, universities in the United States. Second, other studies should explore the pandemic’s effect on enrollment counts or rates in other educational programs which would provide university administrators and policymakers with a better understanding of which programs may be more prone to substantial declines in enrollment at the times of a healthcare crisis. Third, this line of research should be expanded into private universities, community colleges, technical schools, etc. This would help the education administrators and policymakers to better understand if the pandemic has similar effect on enrollment in all types of schools or some are more vulnerable than others. Lastly, using a mixed-method approach and including a qualitative component into an otherwise similarly designed study would allow the researchers to get an insight from school administrators and students on why in the specific schools/programs selected for research certain trends are observed (e.g., a stakeholders’ perspective on why there is a positive effect of the pandemic on enrollment in natural science programs or no statistically significant effect on criminal justice enrollment). Lastly, it would be very beneficial to explore the impact of the COVID-19 pandemic on retention of students and determine what presents a bigger threat to the educational institutions—the lack of new students or problems retaining the ones that have already enrolled into the school but have been impacted by the pandemic.

5. Conclusion

While the COVID-19 era declines in enrollment seem to be leveling off, there are still issues of concern regarding enrollment that will need to be addressed by university administrators to ensure that US universities are prepared to operate in the “pandemic mode,” whether it is because of the current COVID-19 pandemic or any other healthcare crisis. At this time, we do not know how long-lasting coronavirus-related issues may continue impacting college enrollment. Although it is clear that researchers will study the COVID-19 pandemic’s various effects on education in the United States and across the globe for many decades ahead, it is important to prioritize research on the most pressing issues. This study’s objective was to provide education administrators and policymakers with a much-needed understanding of the differential effects of the pandemic on various educational programs. Based on the analysis of enrollment data from 12 universities comprising the Texas A&M University System, the authors have found that the pandemic did in fact impact enrollment counts differently for various programs. Natural science programs have been found to have benefitted from the pandemic, while all educational programs combined were found to have been negatively impacted by the crisis. While it is important to keep in mind the findings of statistical significance, it is as important to understand the effect size of each association. The overall effects in both of the above-referenced relationships were found to be relatively small. The overall reduction in enrollment caused by the pandemic for all educational programs is only approximately 134 students. The increase in enrollment seen in natural science programs is very similar in size.

Using the findings from this study as the foundation, researchers should continue exploring the differential effects of the pandemic on various educational programs thereby assisting school administrators and policymakers in managing the process of adaptation of universities to the new realities.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

SK took ownership of the introduction, literature review, methodology, results, discussion, and conclusion sections. RD provided general guidance on the project, offered education administrator insights on the research topic, assisted with the interpretation of the findings and contributed to the literature review, and discussion sections of the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Andreß, H. J., Golsch, K., and Schmidt, A. W. (2013). Applied Panel Data Analysis for Economic and Social Surveys. Berlin, Heidelberg: Springer Science & Business Media.

Belfield, C., and Brock, T. (2020). Behind the Enrollment Numbers: How COVID has Changed Students’ Plans for Community College. New York, NY: Community College Research Center.

Bell, D. N., and Blanchflower, D. G. (2011). Young people and the great recession. Oxf. Rev. Econ. Policy 27, 241–267. doi: 10.1093/oxrep/grr011

Chatterji, P., and Li, Y. (2021). Effects of COVID-19 on school enrollment. Econ. Educ. Rev. 83:102128. doi: 10.1016/j.econedurev.2021.102128

Crawford, J., Butler-Henderson, K., Rudolph, J., Malkawi, B., Glowatz, M., Burton, R., et al. (2020). COVID-19: countries’ higher education intra-period digital pedagogy responses. J. Appl. Learn. Teach. 3, 1–20. doi: 10.37074/jalt.2020.3.1.7

Drukker, D. M. (2003). Testing for serial correlation in linear panel-data models. Stata J. 3, 168–177. doi: 10.1177/1536867X0300300206

Fischer, K. (2020). Confronting the seismic impact of COVID-19: the need for research. J. Int. Stud. 10, i–ii. doi: 10.32674/jis.v10i2.2134

Flanders, W. (2021). Opting out: enrollment trends in response to continued public school shutdowns. J. School Choice 15, 331–343. doi: 10.1080/15582159.2021.1917750

Greene, W. H. (2007). Fixed and Random Effects Models for Count Data. New York, NY: New York University Stern School of Business.

Hoechle, D. (2007). Robust standard errors for panel regressions with cross-sectional dependence. Stata J. 7, 281–312. doi: 10.1177/1536867X0700700301

Kamssu, A. J., and Kouam, R. B. (2021). The effects of the COVID-19 pandemic on university student enrollment decisions and higher education resource allocation. J. High. Educ. Theory Pract. 21, 143–153. doi: 10.33423/jhetp.v21i12.4707

Korn, M. (2020). Choosing a college during coronavirus: how four students decided. Wall Street J. Available at: https://www.wsj.com/articles/choosing-a-college-duringcoronavirus-how-four-students-decided-11600315318

Krantz, L., and Fernandes, D. (2020). At Harvard, Other Elite Colleges, More Students Deferring their First Year. Boston, MA: Boston Globe.

Leider, J. P., Plepys, C. M., Castrucci, B. C., Burke, E. M., and Blakely, C. H. (2018). Trends in the conferral of graduate public health degrees: a triangulated approach. Public Health Rep. 133, 729–737. doi: 10.1177/0033354918791542

Long, B. T. (2004). How have college decisions changed overtime? An application of the conditional logistic choice model. J. Econ. 121, 271–296. doi: 10.1016/j.jeconom.2003.10.004

Mau, W. C. J. (2016). Characteristics of US students that pursued a STEM major and factors that predicted their persistence in degree completion. Univ. J. Educ. Res. 4, 1495–1500. doi: 10.13189/ujer.2016.040630

National Center for Education Statistics (2021). Impact of the coronavirus pandemic on fall plans for postsecondary education. Available at: https://nces.ed.gov/programs/coe/pdf/2021/tpb_508c.pdf

National Student Clearinghouse Research Center. (2022). COVID-19: Stay Informed with the Latest Enrollment Information. Herdon, VA: National Student Clearinghouse Research Center.

Ogundari, K. (2022). A note on the effect of COVID-19 and vaccine rollout on school enrollment in the US. Interchange 53, 233–241. doi: 10.1007/s10780-021-09453-1

Park, H. M. (2011). Practical guides to panel data modeling: a step-by-step analysis using Stata. Public Manag. Policy Analysis Program, Graduate School Int. Relations, Int. Univ. Japan 12, 1–52.

Prescott, B. T. (2021). Post-COVID enrollment and postsecondary planning. Magazine High. Learn. 53, 6–13. doi: 10.1080/00091383.2021.1906132

Roodman, D. (2009). How to do xtabond2: an introduction to difference and system GMM in Stata. Stata J. 9, 86–136. doi: 10.1177/1536867X0900900106

Scafidi, B., Tutterow, R., and Kavanagh, D. (2021). This time really is different: the effect of COVID-19 on independent K-12 school enrollments. J. School Choice 15, 305–330. doi: 10.1080/15582159.2021.1944722

Schudde, L., Castillo, S., Shook, L., and Jabbar, H. (2022). The age of satisficing? Juggling work, education, and competing priorities during the COVID-19 pandemic. Socius 8:23780231221088438. doi: 10.1177/23780231221088438

SimpsonScarborough. (2020). The Impact of COVID-19 on Higher Education. Available at: https://impact.simpsonscarborough.com/covid/

Steimle, L. N., Sun, Y., Johnson, L., Besedes, T., Mokhtarian, P., and Nazzal, D. (2022). Students’ perceptions for returning to colleges and universities during the COVID-19 pandemic: a discrete choice experiment. Socio Econ. Plan. Sci. 82, 101266–101219. doi: 10.1016/j.seps.2022.101266

Toquero, C. M. (2020). Challenges and opportunities for higher education amid the COVID-19 pandemic: the Philippine context. Pedagogical Res. 5, 1–5. doi: 10.29333/pr/7947

Torres-Reyna, O. (2007). Panel Data Analysis Fixed and Random Effects using Stata (v. 4.2, Vol. 112) Princeton, NJ: Data & Statistical Services, Princeton University, 49.

World Bank (2020). The COVID-19 Pandemic: Shocks to Education and Policy Responses. Washington, D.C.: World Bank.

Keywords: COVID-19, coronavirus, college enrollment, student enrollment, criminal justice programs, natural science programs, social science programs, higher education

Citation: Korotchenko S and Dobbs R (2023) The effect of COVID-19 pandemic on college enrollment: How has enrollment in criminal justice programs been affected by the pandemic in comparison to other college programs. Front. Educ. 8:1136040. doi: 10.3389/feduc.2023.1136040

Edited by:

Carolyn Gentle-Genitty, Indiana University Bloomington, United StatesReviewed by:

Pinaki Chakraborty, Netaji Subhas University of Technology, IndiaSergio Longobardi, University of Naples Parthenope, Italy

Copyright © 2023 Korotchenko and Dobbs. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Stan Korotchenko, skorotchenko@tarleton.edu

Stan Korotchenko

Stan Korotchenko Rhonda Dobbs2

Rhonda Dobbs2