Abstract

The novel coronavirus SARS-CoV-2 and the COVID-19 illness it causes have inspired unprecedented levels of multidisciplinary research in an effort to address a generational public health challenge. In this work we conduct a scientometric analysis of COVID-19 research, paying particular attention to the nature of collaboration that this pandemic has fostered among different disciplines. Increased multidisciplinary collaboration has been shown to produce greater scientific impact, albeit with higher co-ordination costs. As such, we consider a collection of over 166,000 COVID-19-related articles to assess the scale and diversity of collaboration in COVID-19 research, which we compare to non-COVID-19 controls before and during the pandemic. We show that COVID-19 research teams are not only significantly smaller than their non-COVID-19 counterparts, but they are also more diverse. Furthermore, we find that COVID-19 research has increased the multidisciplinarity of authors across most scientific fields of study, indicating that COVID-19 has helped to remove some of the barriers that usually exist between disparate disciplines. Finally, we highlight a number of interesting areas of multidisciplinary research during COVID-19, and propose methodologies for visualising the nature of multidisciplinary collaboration, which may have application beyond this pandemic.

Similar content being viewed by others

Introduction

The scientific response to the SARS-CoV-2 pandemic has been unprecedented with researchers from several surprising fields—e.g. artificial intelligence (Nguyen et al., 2021), economics (Nicola et al., 2020), and particle physics (Lustig et al., 2020)—contributing to solving the many and varied clinical and societal challenges arising from the pandemic. As a result, by January 2021, The Allen Institute for AI (Allen Institute, 2021) and the World Health Organisation (WHO, 2021) had identified over 166,000 research papers relating to SARS-CoV-2 and the COVID-19 illness it causes, highlighting an unprecedented period of scientific productivity. In this study we analyse this body of work to better understand the scale and nature of the collaboration and fields of study that have defined this research.

The benefits of collaboration during scientific research are well documented and widely accepted and in recent years there has been steady growth in research team size across all scientific disciplines (Leahey, 2016; Youngblood and Lahti, 2018), which has been shown to correlate positively with research impact (Lariviere et al., 2014; Porter and Rafols, 2008; Wuchty et al., 2007). Moreover, multidisciplinary science, which brings together researchers from many disparate subject areas has been shown to be among the most successful scientific endeavours (Lariviere et al., 2015; Okamura, 2019). Indeed, multidisciplinary research has been highlighted as a key enabler when it comes to addressing some of the most complex challenges facing the world today (Leahey, 2016). Not surprisingly then, there have been numerous attempts to encourage and promote collaboration and cooperation in the fight against COVID-19: the World Health Organisation maintains a COVID-19 global research database; scientific journals have published explicit calls for teamwork and cooperation (Budd et al., 2020; Chakraborty et al., 2020); in many cases COVID-19-related research has been made freely available to the public and the scientific community; comprehensive datasets have been created and shared; and reports from the International Chamber of Commerce (ICC) and the Organisation for Economic Co-operation and Development (OECD) have argued for international and multidisciplinary collaboration in the response to the pandemic.

Although early studies have found that the pandemic has generated a significant degree of novel collaboration (Liu et al.; 2020, Porter and Hook, 2020) other research has suggested that COVID-19 research have been less internationally collaborative than expected, compared with recent research from the years immediately prior to the pandemic (Fry et al., 2020; Porter and Hook, 2020). There is also some evidence that COVID-19 teams have been smaller than their pre-2020 counterparts (Cai et al., 2021, Fry et al., 2020). Thus, despite calls for greater collaboration, the evidence points to less collaboration in COVID-19 related research, perhaps due to the startup and coordination costs associated with multidisciplinary research (Cai et al., 2021; Fry et al., 2020; Porter and Hook, 2020) combined with the urgency of the response to the pandemic.

In this study we evaluate the scale and nature of collaboration in COVID-19 research during 2020, using scientometric analysis techniques to analyse COVID and non-COVID publications before (non-COVID) and during (COVID and non-COVID) the pandemic. We determine the nature of collaboration in these datasets using three different collaboration measures: (i) the Collaboration Index (CI) (Youngblood and Lahti, 2018), to estimate the degree of collaboration in a body of research; (ii) author multidisciplinarity to estimate the rate at which authors publish in different disciplines; and (iii) team multidisciplinarity to estimate subject diversity across research teams. We find a lower CI for COVID-related research teams, despite an increasing CI trend for non-COVID work, before and during the pandemic, but COVID-related research is associated with higher author multidisciplinarity and more diverse research teams. This research can help us to better understand the nature of the research that has been conducted under pandemic conditions, which may be useful when it comes to coordinating similar large-scale initiatives in the future. Moreover, we develop a number of techniques for exploring the nature of collaborative research, which we believe will be of general interest to academics, research institutions, and funding agencies.

Methods

In this section we describe our methods for evaluating scientific collaboration in COVID-19 research. We describe the data that we use throughout our analysis, and we outline three approaches used to evaluate collaboration activity.

Datasets

The COVID-19 Open Research Dataset (CORD-19) (Lu Wang et al., 2020) comprises more than 400,000 scholarly articles, including over 150,000 with full text, all related to COVID-19, SARS-CoV-2, and similar coronaviruses. CORD-19 papers are sourced from PubMed, PubMed Central, bioRxiv, medRxiv, arXiv, and the World Health Organisation’s COVID-19 database. We generate a set COVID-19-related research by excluding articles dated prior to 2020 and the resulting dataset contains CORD-19 metadata for 166,356 research papers containing the terms "COVID", "COVID-19", "Coronavirus", "Corona virus", "2019-nCoV", "SARS-CoV", "MERS-CoV", "Severe Acute Respiratory Syndrome" or "Middle East Respiratory Syndrome". We supplement this metadata with bibliographic information from the Microsoft Academic Graph (MAG) (Sinha et al., 2015).

Notably, we use the MAG fields of study (FoS) to categorise research papers. The MAG uses hierarchical topic modelling to identify and assign research topics to individual papers, each of which represents a specific field of study. To date, this approach has identified a hierarchy of over 700,000 topics within the Microsoft Academic Knowledge corpus. In our dataset of 166,356 COVID-19 research articles, the average paper is associated with 9 FoS from different levels in this hierarchy and in total, 65,427 unique fields are represented. To produce a more useful categorisation of articles, we first reduce the number of topics by replacing each field with its parent and then consider topics at two levels in the FoS hierarchy: (i) the 19 FoS at level 0, which we refer to as ’disciplines’, and (ii) the 292 FoS at level 1, which we refer to as ‘sub-disciplines’. In this way, each article is associated with a set of disciplines (e.g. ’Medicine’, ’Physics’, ’Engineering’) and sub-disciplines (e.g. ’Virology’, ’Particle Physics’, ’Electronic Engineering’), which are identified by traversing the FoS hierarchy from the fields originally assigned to the paper.

We further extend this dataset with any additional research published by the authors in the COVID-related dataset. Thus, for each author, we include MAG metadata from any available articles dated after 2015. The final dataset consists of metadata for 5,389,445 research papers, which we divide into three distinct groups as follows; see Table 1 with further detail provided in the Supplementary materials that accompany this article (Supplementary Tables 1–3).

-

1.

2020-COVID-related research: the 166,356 COVID-related articles published during the pandemic (2020);

-

2.

Pre-2020 research: 4,017,655 non-COVID-related articles published before the pandemic, that is during 2016–2019, inclusive;

-

3.

2020-non-COVID research: 1,205,434 non-COVID-related articles published during the pandemic period and which are not in the CORD dataset.

Collaboration Index

The Annual Collaboration Index (CI) is defined, for a body of work, as the ratio of the number of authors of co-authored articles to the total number of co-authored articles (Youngblood and Lahti, 2018). Since larger (more collaborative) teams have been shown to be more successful than smaller teams (Klug and Bagrow, 2014; Lariviere et al., 2014; Leahey, 2016), we can use CI to compare COVID-related research to non-COVID baselines. However, CI is sensitive to the total number of articles in the corpus. Therefore, to address this bias and facilitate comparison across our COVID and non-COVID baselines, we generate a CI distribution for each dataset by re-sampling 50,000 papers 1000 times, without replacement, from each year, and we calculate the sample distribution for these CI values for each year in our dataset.

Author multidisciplinarity

To evaluate the multidisciplinarity of individual authors, we consider the extent to which they publish across multiple disciplines, based on a network representation of their publications. An un-weighted bipartite network, populated by research fields and authors, links researchers to subjects (that is, based on the subjects of their publications). A projection of this network produces a dense graph of the 292 sub-disciplines at level 1 in the MAG FoS hierarchy, in which two sub-disciplines/fields are linked if an author has published work in both. We refer to this projection as a field of study network. In such a network, the edges between fields are weighted according to the number of authors publishing in both fields. Due to the large number of researchers, and the relatively small number of sub-disciplines, the resulting graph is almost fully connected. Thus, the edge weights are an important way to distinguish between edges. Using the MAG FoS hierarchy, we divide the network nodes into 19 overlapping "communities”, based on their assignment to level 0 fields of study. This facilitates the characterisation of the edges in the graph: an edge within a community represents an author publishing in two sub-disciplines within the same parent discipline, while an edge between communities represents an author publishing in two sub-disciplines from different parent disciplines. For example, if an author publishes research in ’Machine Learning’ and ’Databases’, the resulting edge is considered to be within the community/discipline of ’Computer Science’. Conversely, if an author publishes in ’Machine Learning’ and ’Radiography’, the resulting edge is considered to be between the ’Medicine’ and ’Computer Science’ communities. An edge between disciplines may represent either a single piece of interdisciplinary research or an author publishing separate pieces of research in two different disciplines. To evaluate the effect of COVID-19 on author multidisciplinarity, we produce a field of study network for each year in our dataset and calculate the proportion of the total edge weights that exist between communities. In the special case of 2020 we also explore this proportion for non-COVID research, (i.e., after we remove COVID-19 research from the graph).

Research team disciplinary diversity

In addition to author multidisciplinarity, we also consider the multidisciplinarity of the research teams, by calculating their disciplinary diversity. To do this we compare the research backgrounds of different authors using publication vectors based on the proportions of a researcher’s work published across different fields (Feng and Kirkley, 2020). Specifically, we construct publication vectors for authors in our dataset using the 19 MAG disciplines. Thus, an author’s publication vector is a 19-dimensional vector, with each value indicating the proportion of the author’s research published in the corresponding domain. For example, an author who has 50 publications classified under ’Computer Science’, 30 publications classified under ’Mathematics’, and 20 publications classified under ’Biology’ would have a publication vector with values {0.5,0.3,0.2} for the entries corresponding to these disciplines respectively, and zeros elsewhere. By using publication vectors to represent an individual’s research profile, we can quantify the disciplinary diversity of a research team using Eq. (1) from (Feng and Kirkley, 2020).

Note, in Eq. (1) ∣p∣ refers to the size of the research team and Sij is the cosine similarity of the publication vectors for authors i and j. The team research similarity score for an article is a normalised sum of the pairwise cosine similarities for all authors of the article. In cases where we find no available research for a particular author, that author is excluded from the disciplinary similarity calculation. That is, they contribute no publication vector and the disciplinary similarity score is normalised according to an updated team size which excludes that author.

To evaluate research team disciplinary diversity, we compute the teams’ disciplinary similarity based on publication vectors from pre-2020 research, and we report 1−Steam as the teams’ diversity. The year of the paper is excluded from the publication vector to avoid reducing team diversity with the common publication. As such, team disciplinary diversities for COVID-related research (and non-COVID research from 2020) are calculated from publication vectors which exclude work from 2020. We compare these scores with disciplinary diversity scores for research in 2019 when, similarly, the publication vectors exclude work from 2019 and 2020. As the potential for disciplinary diversity in research teams is limited by the number of team members, we compare diversity by team size.

Case studies of multidisciplinarity in COVID-19 research

The field of study network structure used to calculate author multidisciplinarity encodes relationships between fields of study, with respect to the authors who publish in them. Since these relationships are altered in COVID-related research, we propose a modified network structure to explore the changes to these relationships visually, and to highlight interesting case studies of multidisciplinary research in the COVID-19 literature. In this modified network structure, COVID-related research articles contribute directed edges (SDA, SDB) to the graph, for all sub-disciplines SDA in which the authors publish in their pre-2020 work, and all sub-disciplines SDB which relate to the article. For example, an edge between the pair of sub-disciplines ’Machine Learning’ and ’Radiology’ represents an author who published in the field of ’Machine Learning’ in their pre-2020 work (2016–2019), publishing COVID-19 research in the field of ’Radiology’. We produce networks of this structure from different subsets of COVID-related research articles, which we will visualise using flow diagrams, where the pre-2020 sub-disciplines are on the left and the COVID-related disciplines are on the right.

Results

Research team size and Collaboration Index

Figure 1 reports the mean Collaboration Index for the samples of 50,000 research papers taken from each year in the dataset. Mean values for samples of COVID-19 research articles are also included. The Collaboration Index increases year-on-year, indicating a move towards larger research teams. This trend has been noted across many disciplines of academic research (Lariviere et al., 2014; Leahey, 2016; Porter and Rafols, 2008).

1000 samples are taken from each year (2016–2020). Collaboration index increases annually, r2 = 0.94, and the CI for COVID-19 articles is significantly less that the CI associated with non-COVID 2020 research; in fact the mean COVID-19 CI is 25 standard deviations below the mean of of non-COVID samples taken from 2020. Thus, research teams publishing COVID-19 research are significantly smaller than expected for research teams in 2020 containing the same authors.

COVID-19 research presents with a very different CI (~5.6), however, indicating that COVID-19 research teams are significantly smaller than expected for research conducted by the same authors in 2020. This result is robust with respect to re-sampling size and in Supplementary materials that accompany this article (see Supplementary Fig. 1) we report comparable results using sample sizes n = 10,000 and n = 100,000.

Author multidisciplinary publication

We quantify author multidisciplinarity in a year of research by measuring the proportion of the total number of edges in an author-FoS network that are between communities (i.e., disciplines). We find that this proportion is increasing slowly over time when we produce FoS networks for each year in our data. Figure 2 reports the odds ratio effect size when the proportion of the edges that are between communities in a given year is compared with that of the previous year. These scores are reported for each community and for the entire network. The proportion of external edges in the entire network is shown to increase increase significantly each year, with the greatest increase coming in 2020. In the case of 2020 we also report the odds ratio achieved when we compare 2019 with 2020-non-COVID research i.e., after we remove COVID-19 research from the graph. Figure 2 shows a significant increase in multidisciplinary publication in 2020 across almost all disciplines. The increase in author multidisciplinarity is much greater when we include COVID-19 research in the graph. Despite representing <20% of the work published in 2020, COVID-19 research contributes greatly to the proportion inter-disciplinary edges in the FoS network.

A score of 1 indicates that authors are no more likely to publish in other disciplines than they were in the previous year. Error bars are used to plot a 95% confidence interval and solid points indicate a statistically significant increase in interdisciplinary publication (p < 0.05) according to Fisher’s Exact test.

Research team disciplinary diversity

When we compare authors by their publication backgrounds, encoded as publication vectors, we find COVID-19 research teams to be more diverse than equivalently-sized research teams who published before 2020. Figure 3 presents the relative increase in mean research team disciplinary diversity for different team sizes, when research teams from 2020 are compared with teams from 2019. We divide 2020 research into two sets: (i) 2020-COVID-related; (ii) 2020-non-COVID research and report relative increases in team diversity for each set. Independent t tests show COVID-19 research teams to be significantly more diverse than both pre-2020 and 2020-non-COVID research teams of the same size (p < 0.01, see Supplementary Table 5).

Discussion

Despite the recent trend towards larger, more collaborative research teams (Feng and Kirkley, 2020; Lariviere et al., 2014; Leahey, 2016; Porter and Rafols, 2008), COVID-19 research appears to have significantly fewer authors than other publications by the same researchers, during 2020. This may be a concerning finding amid evidence that larger teams produce more impactful scientific research (Lariviere et al., 2014): it may have limited the value of the research produced, notwithstanding the incredible achievements that have been made, or it may be a reality of working under the constraints of a global pandemic. We do see some examples of larger teams and their greater potential for research impact in our analysis: 20% of COVID-19 research papers have more than 8 listed authors and this portion of the dataset accounts for over 60 of the 100 most cited publications relating to the coronavirus. Yet, the majority of COVID-19 research papers (53%) have 4 or fewer authors. We find no evidence that the reduced Collaboration Index of COVID-19 research is due to working conditions and restrictions during the pandemic. Despite a global shift towards remote work, research in 2020 continues the recent trend of increasing collaboration. The preference for smaller research teams appears to be specific to COVID-19 research and not simply a factor of research during COVID-19.

The prevalence of smaller research teams is important to understand about COVID-19 research. Smaller teams have been shown to play a different role to larger teams in both research and technology (Wu et al., 2019). In an analysis of research collaborations, Wu et al. show that small research teams can disrupt science and technology by exploring and amplifying promising ideas from older and less-popular work, while large teams develop on recent successes by solving acknowledged problems (Wu et al., 2019). The definition by Wu et al. of disruptive articles relates closely to the metric of betweenness centrality for citation networks. That is, disruptive papers can connect otherwise separate communities in a research network. We find some evidence that COVID-19 research may be increasing the connectivity between disciplines, as authors are more likely to publish across multiple fields and research teams are more diverse. A trend towards greater levels of multidisciplinary collaboration has been identified in many scientific disciplines (Porter and Rafols, 2008). This trend is evident in the non-COVID-19 portions of our dataset. Research teams of fewer than 10 members publishing in 2020 exhibit greater disciplinary diversity than similarly-sized teams publishing in 2019, for example. Likewise, the number of authors publishing in multiple disciplines is increasing steadily year-on-year. In COVID-19 research, the increase in multidisciplinarity (of both teams and individuals) exceeds the established trend. This may be evidence of the disruptive nature of COVID-related research. Below, we use flow diagrams to explore author multidisciplinarity in specific topics in the COVID-related research dataset.

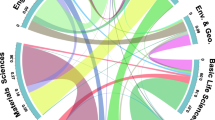

Figures 4–7 present four selected case studies of author multidisciplinarity in COVID-related research in 2020. To provide a clear visualisation of the strongest trends that exist, each FoS network shows only the 50 edges with the greatest weights. We choose Virology as a case study because it is largest subset in COVID-related research, while Computer Science and Materials Science were chosen to show considerable increases in author multidisciplinarity in 2020 (see Fig. 2), and Development Economics presents with a very diverse set of contributing disciplines. For example, Figure 4 shows the intersection between Medicine, Biology and Chemistry in COVID-19 research relating to Virology. Sub-disciplines Molecular Biology, Biochemistry, Immunology, and Virology all appear closely related in this graph. They are strongly interconnected, indicating many instances of authors publishing between disciplines and each acts as both a source and as a destination in the network, as authors who publish in any of these sub-disciplines prior to COVID-19 are likely to publish in the others during COVID-19. Figure 5 illustrates the multidisciplinary nature of Computer Science research in COVID-19. Unlike the Virology graph in Fig. 4, there are only two destinations in this network: Computer Science and Medicine. Computer Science research in the COVID-19 dataset is primarily focused on Machine Learning solutions to automating COVID-19 detection from medical images (Nguyen et al., 2021) (see Supplementary Table 7(a)). This effort is evident in the graph, as Computer Science research in COVID-19 is most commonly characterised within the sub-disciplines Machine Learning, Artificial Intelligence, Pathology, Surgery and Algorithm. Also evident is the multidisciplinary nature of the effort, as researchers with backgrounds in many of the STEM fields are shown to contribute. Figure 6 reports the FoS network for COVID-19 research relating to Materials Science. The graphs illustrates an intersection between the fields of Physics, Chemistry, Engineering and Materials Science as researchers from each of these disciplines contributes to coronavirus research. Many of the most cited articles in this subset relate to airborne particles and the efficacy of face masks (Lustig et al., 2020), along with the use of electrochemical biosensors for pathogen detection (Cesewski and Johnson, 2020) (see Supplementary Table 7(a)). Figure 7 presents the FoS network for the COVID-19-related research papers in the field of Development Economics. Some of the most cited articles in this subset concern studies of the socio-economic implications and effects of the pandemic globally (Nicola et al., 2020; Walker et al., 2020), and of health inequity in low- and middle-income countries (Patel et al., 2020; Wang and Tang, 2020) (see Supplementary Table 8(a)). Research in this subset is characterised by the diverse set of sub-disciplines shown on the left of the figure, as authors with backgrounds in social science, social psychology, medicine, statistics, economics, and biology are all found to contribute.

The graph relates an author’s research background to the fields they publish in COVID-related articles. This network is produced from 22,561 COVID-related research papers which were assigned the MAG field `Virology'. Pre-COVID sub-disciplines (common in the research backgrounds of the authors) are shown on the left and COVID-related sub-disciplines (common in the article subset) are shown on the right. Sub-disciplines are coloured by their parent disciplines and edges are assigned the colour of the pre-2020 node. Edges are weighted by the numbers of authors who published in both of the corresponding sub-disciplines. The bi-gram terms which occurred most frequently in the titles of these papers were: COVID-19 pandemic, coronavirus disease, SARS-CoV-2 infection and novel coronavirus. (see Supplementary Table 6).

This network is produced from 9004 COVID-related research papers which were attributed the MAG field `Computer Science'. The bi-gram terms which occurred most frequently in the titles of these papers were: COVID-19 pandemic, deep learning, neural network, machine learning, contact tracing and chest x-ray. *The MAG sub-discipline 'Algorithm' is a level 1 parent for any algorithms identified in the fields of study. The most frequently occurring children of the Algorithm field in this subset are 'artificial neural network', 'cluster analysis', 'inference', and ’support vector machine' (see Supplementary Table 7).

This network is produced from 1229 COVID-related research papers which were attributed the MAG field `Materials science'. The bi-gram terms which occurred most frequently in the titles of these papers were: filtration efficiency, additive manufacturing, and face mask (see Supplementary Table 8).

The graph relates an author’s research background to the fields they publish in COVID-related articles. This network is produced from 1564 COVID-related research papers which were attributed the MAG field 'Development Economics'. The most cited articles in this subset concern studies of the socio-economic implications and effects of the pandemic globally (Nicola et al., 2020; Walker et al., 2020), and of health inequity in low- and middle-income countries (Patel et al., 2020; Wang and Tang, 2020) (see Supplementary Table 9).

The methods outlined in this work could be applied in future scientometric analyses to assess and visualise multidisciplinarity in a body of research. This may be of interest to researchers seeking to understand the evolution of their own field of study, or to funding agencies who recognise the established benefits of multidisciplinary collaboration. In the case of this work, we show COVID-19 research teams to be smaller yet more multidisciplinary than non-COVID-19 teams. It is suggested in early work that authors publishing COVID-19 research favoured smaller, less international collaborations in order to reduce co-ordination costs and contribute to the public health effort sooner (Fry et al., 2020). We would like to elaborate on this characterisation of collaboration in COVID-19 research; adding that authors sought to minimise the limitations of working in smaller teams by collaborating with scientists from diverse research backgrounds. That is to say, in the urgency of the pandemic, scientists favour smaller, more multidisciplinary research teams in order to collaborate more efficiently.

Data availability

The data used in our study can be reproduced from the set of Microsoft Academic Graph article IDs available at https://doi.org/10.7910/DVN/ACSGKS.

Change history

08 November 2021

A Correction to this paper has been published: https://doi.org/10.1057/s41599-021-00957-w

References

Allen Institute (2021) Allen Institute for A.I. https://allenai.org. Accessed 16 Sep 2021

Budd J et al. (2020) Communication, collaboration and cooperation can stop the 2019 coronavirus. Nat Med 26(2):151–151

Cai X, Fry CV, Wagner CS (2021) International collaboration during the COVID-19 crisis: Autumn 2020 developments. Scientometrics 126(4):3683–3692

Cesewski E, Johnson BN (2020) Electrochemical biosensors for pathogen detection. Biosens Bioelectron 159:112214

Chakraborty C et al. (2020) Extensive partnership, collaboration, and teamwork is required to stop the COVID-19 outbreak. Arch Med Res 51(7):728–730

Feng S, Kirkley A(2020) Mixing patterns in interdisciplinary co-authorship networks at multiple scales. Sci Rep 10(1):1–11

Fry CV et al. (2020) Consolidation in a crisis: patterns of international collaboration in early COVID-19 research. PLoS ONE 15(7):e0236307–e0236307

Klug M, Bagrow JP(2016) Understanding the group dynamics and success of teams. Royal Soc Open Sci 3(4):160007

Larivière V, Haustein S, Börner K (2015) Long-distance interdisciplinarity leads to higher scientific impact. PLoS ONE 10(3):e0122565–e0122565

Larivière V et al.(2015) Team size matters: Collaboration and scientific impact since 1900. J Assoc Info Sci Technol 66(7):1323–1332

Leahey E (2016) From sole investigator to team scientist: trends in the practice and study of research collaboration. Annu Rev Sociol 42(1):81–100

Liu M et al. (2020) Can pandemics transform scientific novelty? Evidence from COVID-19. CoRR https://arxiv.org/abs/2009.12500

Lu Wang L et al. (2020) CORD-19: the Covid-19 open research dataset. Preprint at https://arxiv.org/abs/200410706v2https://pubmed.ncbi.nlm.nih.gov/32510522

Lustig S et al. (2020) Effectiveness of common fabrics to block aqueous aerosols of COVID virus-like nanoparticles. ACS Nano 14(6):7651–7658

Nguyen T T et al. (2021) Artificial intelligence in the battle against coronavirus (COVID-19): a survey and future research directions. Preprint at https://arxiv.org/abs/200807343

Nicola M et al. (2020) The socio-economic implications of the coronavirus pandemic (COVID-19): a review. Int J Surg 78:185–193

Okamura K (2019) Interdisciplinarity revisited: evidence for research impact and dynamism. Palgrave Commun 5(1):141

Patel JA et al. (2020) Poverty, inequality and COVID-19: the forgotten vulnerable. Public Health 183:110–111

Porter A, Ràfols I (2008) Is science becoming more interdisciplinary? Measuring and mapping six research fields over time. Scientometrics 81:719–745

Porter SJ, Hook DW (2020) How COVID-19 is changing research culture. Digital Science, London

Sinha A et al. (2015) An overview of Microsoft Academic Service (MAS) and applications. In: (ed Gangemi A) Proceedings of the 24th international conference on World Wide Web. Association for Computing Machinery, New York, pp. 243–246, https://books.google.ie/books/about/Proceedings_of_the_24th_International_Co.html?id=xvFxAQAACAAJ&redir_esc=y

Walker PGT et al. (2020) The impact of COVID-19 and strategies for mitigation and suppression in low- and middle-income countries. Science 369(6502):413–422

Wang Z, Tang K (2020) Combating COVID-19: health equity matters. Nat Med 26(4):458–458

WHO (2021) World Health Organization. https://who.int. Accessed 16 Sep 2021

Wu L, Wang D, Evans JA (2019) Large teams develop and small teams disrupt science and technology. Nature 566(7744):378–382

Wuchty S, Jones B, Uzzi B (2007) The increasing dominance of teams in production of knowledge. Science 316:1036–9

Youngblood M, Lahti D (2018) A bibliometric analysis of the interdisciplinary field of cultural evolution. Palgrave Commun 4:1–9

Acknowledgements

This research was supported by Science Foundation Ireland (SFI) under Grant Number SFI/12/RC/2289_P2.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cunningham, E., Smyth, B. & Greene, D. Collaboration in the time of COVID: a scientometric analysis of multidisciplinary SARS-CoV-2 research. Humanit Soc Sci Commun 8, 240 (2021). https://doi.org/10.1057/s41599-021-00922-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-021-00922-7

This article is cited by

-

Artificial intelligence centric scientific research on COVID-19: an analysis based on scientometrics data

Multimedia Tools and Applications (2023)

-

Leadership and international collaboration on COVID-19 research: reducing the North–South divide?

Scientometrics (2023)

-

How the Covid-19 crisis shaped research collaboration behaviour

Scientometrics (2022)