Peer Reviewed

The battleground of COVID-19 vaccine misinformation on Facebook: Fact checkers vs. misinformation spreaders

Article Metrics

18

CrossRef Citations

Altmetric Score

PDF Downloads

Page Views

Our study examines Facebook posts containing nine prominent COVID-19 vaccine misinformation topics that circulated on the platform between March 1st, 2020 and March 1st, 2021. We first identify misinformation spreaders and fact checkers,1fact checker in our study is defined as any public account (including both individual and organizational accounts) that posts factual information about COVID-19 vaccine or posts debunking information about COVID-19 vaccine misinformation. further dividing the latter group into those who repeat misinformation to debunk the false claim and those who share correct information without repeating the misinformation. Our analysis shows that, on Facebook, there are almost as many fact checkers as misinformation spreaders. In particular, fact checkers’ posts that repeat the original misinformation received significantly more comments than posts from misinformation spreaders. However, we found that misinformation spreaders were far more likely to take on central positions in the misinformation URL co-sharing network than fact checkers. This demonstrates the remarkable ability of misinformation spreaders to coordinate communication strategies across topics.

Research Questions

- What types of information sources discuss COVID-19 vaccine-related misinformation on Facebook?

- How do Facebook users react to different types of Facebook posts containing COVID-19 vaccine misinformation in terms of behavioral engagement and emotional responses?

- How do these sources differ in terms of co-sharing URLs?

Essay Summary

- This study used social network analysis and ANOVA tests to analyze posts (written in English) on public Facebook accounts that mentioned COVID-19 vaccines misinformation posted between March 1st, 2020 and March 1st, 2021, and user reactions to such posts.

- Our analysis found that approximately half of the posts (46.6%) that discussed COVID-19 vaccines were misinformation, and the other half (47.4%) were fact-checking posts. Of the fact-checking posts, 28.5% repeated the original false claim within their correction, while 18.9% listed facts without misinformation repetition.

- Additionally, we found that people were more likely to comment on fact-checking posts that repeated the original false claims than other types of posts.

- Fact checkers’ posts were mostly connected with other fact checkers rather than misinformation spreaders.

- The accounts with the largest number of connections, and that were connected with the most diverse contacts, were fake news accounts, Trump-supporting groups, and anti-vaccine groups.

- This study suggests that when public accounts debunk misinformation on social media, repeating the original false claim in their debunking posts can be an effective strategy at least to generate user engagement.

- For organizational and individual fact checkers, they need to strategically coordinate their actions, diversify connections, and occupy more central positions in the URL co-sharing networks. They can achieve such goals through network intervention strategies such as promoting similar URLs as a fact checker community.

Implications

Overview

The spread of misinformation on social media has been identified as a major threat to public health, particularly in the uptake of COVID-19 vaccines (Burki, 2020; Loomba et al., 2021). The World Health Organization warned that the “infodemic,”2According to the World Health Organization (2020), an infodemic is “an overabundance of information, both online and offline. It includes deliberate attempts to disseminate wrong information to undermine the public health response and advance alternative agendas of groups or individuals.” that is, the massive dissemination of false information, is one of the “most concerning” challenges of our time alongside the pandemic. Rumor-mongering in times of crisis is nothing new. However, social media platforms have exacerbated the problem to a different level. Social media’s network features can easily amplify the voice of conspiracy theorists and give credence to fringe beliefs that would otherwise remain obscure. These platforms can also function as an incubator for anti-vaxxers to circulate ideas and coordinate their offline activities (Wilson & Wiysonge, 2020).

Our study addresses a timely public health concern regarding misinformation about the COVID-19 vaccine circulating on social media and provides four major implications that can inform the strategies that fact checkers adopt to combat misinformation. Our findings also have implications for public health authorities and social media platforms in devising intervention strategies. Each of the four implications is discussed below.

Prevalence of COVID-19 misinformation

First, our study confirms the prevalence of COVID-19 vaccine misinformation. Approximately 10% of COVID-19 vaccine-related engagement (e.g., comments, shares, likes) on Facebook are made to posts containing misinformation. A close look at the posts shared by public accounts containing vaccine misinformation suggests that there are about an equivalent number of posts spreading misinformation and combating such rumors. This finding contrasts with prior research describing the misinformation landscape heavily outnumbered by anti-vaxxers (Evanega et al., 2020; Shin & Valente, 2020; Song & Gruzd, 2017).3Our study sample features public accounts. The conditions may differ among private accounts. This result may be because we focused on popular misinformation narratives that received much attention from fact checkers and health authorities. Additionally, due to social pressure, social media platforms such as Facebook have been taking action to suspend influential accounts that share vaccine-related misinformation. We acknowledge that fact-checking posts do not necessarily translate into better-informed citizens. Prior research points to the limitations of fact-checking in that fact-checking posts are selectively consumed and shared by those who already agree with the post (Brandtzaeg et al., 2018; Shin & Thorson, 2017). Thus, more efforts should be directed towards reaching a wider audience and moving beyond preaching to the choir. Nonetheless, our study reveals a silver lining: social media platforms can serve as a battleground for fact checkers and health officials to combat misinformation and share facts. This finding calls for social media platforms and fact checkers to continue their proactive approach by providing regular fact-checking, promoting verified information, and educating the public about public health knowledge.

Repeater fact checkers are most engaging

Second, our study reveals that, on social media, fact checkers’ posts that repeated the misinformation were significantly more likely to receive comments than the posts about misinformation. It is likely that posts that contain both misinformation and facts are more complex and interesting, and therefore invite audiences to comment on and even discuss the topics with each other. In contrast, one-sided posts, such as pure facts or straightforward misinformation, may provide little room for debates. This finding offers some evidence that fact-checking can be more effective in triggering engagement when it includes the original misinformation. Future research may further examine if better engagement leads to cognitive benefits such as long-term recollection of vaccine facts.

This finding also has implications for fact checkers. One concern for fact checkers has been whether to repeat the original false claim in a correction. Until recently, practitioners were advised not to repeat the false claim due to the fear of backfire effects, whereby exposure to the false claim within the correction inadvertently makes the misconception more familiar and memorable. However, recent studies show that backfiring effects are minimal (Ecker et al., 2020; Swire-Tompson et al., 2020). Our analysis, along with other recent studies, suggests that the repetition can be used in fact-checking, as long as the false claim is clearly and saliently refuted.

Non-repeater fact checkers’ posts tend to trigger sad reactions

Third, our study finds that posts that provide fact-checking without repeating the original misinformation are most likely to trigger sad reactions. Emotions are an important component of how audiences respond to and process misinformation. Extensive research shows that emotional events are remembered better than neutral events (Scheufele & Krause, 2019; Vosoughi et al., 2018). In addition, the misinformation literature has well documented the interactions between misinformation and emotion. For example, Scheufele and Krause (2019) found that people who felt anger from misinformation were more likely to accept it. Vosoughi et al. (2018) found that misinformation elicited more surprise and attracted more attention than non-misinformation, which may be explained by humans having, potentially, evolutionarily developed an attraction to novelty. Vosoughi et al. (2018) also found that higher sadness responses were associated with truthful information. Our study finds similar results in that non-repeater fact checkers’ posts are significantly more likely to trigger feelings of sadness. One possible explanation is that the sad reaction may be associated with the identities (the type of accounts such as nonprofits, media, etc.) of post providers. Our analysis shows that, among all types of accounts, healthcare organizations and government agencies are most likely to provide fact-checking without repeating the original misinformation. The sadness reaction may be a sign of declining public trust in these institutions or the growing pessimism over the pandemic. Future studies may compare a range of posts provided by healthcare organizations and government agencies to see if their posts generally receive more sad reactions. Overall, since previous studies suggest that negative emotions often lead people’s memories to distort facts (Porter et al., 2010), it is likely that non-repeaters’ posts may not lead to desirable outcomes in the long run.

Taken together, our findings suggest that, on a platform such as Facebook, fact-checking with repetition may be an effective messaging strategy for achieving greater user engagement. Despite the potential to cause confusion, the benefits may outweigh the costs.

Network disparity

Finally, our study finds that, despite the considerable presence of fact checkers in terms of their absolute numbers, misinformation spreaders are much better coordinated and strategic. It is important to note that the spreading and consumption of misinformation is embedded in the complex networks connecting information and users on social media (Budak et al., 2011). URLs are often incorporated into Facebook posts to provide in-depth information or further evidence to support post providers’ views. It is a way for partisans or core community members to express their partisanship and promote their affiliated groups or communities. The structure of the URL network is instrumental for building the information warehouses that power selective information sharing.

We find that those public accounts4It is necessary to note that our sample mainly features public accounts belonging to organizations (81.5%). The observed coordination may or may not be applicable to individual accounts. that spread misinformation display a strong community structure, likely driven by common interests or shared ideologies. In comparison, public accounts engaging in fact-checking seem to mainly react to different misinformation while lacking coordination in their rebuttals. Johnson et al. (2020) found that anti-vaccination clusters on Facebook occupied central network positions, whereas pro-vaccination clusters were more peripheral and confined to small patches. Consistently, our study also finds this alarming structural pattern, which suggests that the posts of misinformation spreaders could penetrate more diverse social circles and reach broader audiences.

This network perspective is vital to examining misinformation on social media since misinformation on platforms such as Facebook and Twitter requires those structural conduits in order to permeate through various social groups, while fact checkers also need networks to counter misinformation with their posts (Del Vicario et al., 2016). Thus, contesting for strategic network positions is important because such positions allow social media accounts to bridge different clusters of publics and facilitate the spread of their posts.

Based on this finding, social media platforms might need to purposely break the network connections of misinformation spreaders by banning or removing some of the most central URLs. In addition, fact checkers should better coordinate their sharing behavior, and boost the overall centrality and connectivity of their content by embracing, for instance, the network features of social media, and leveraging the followership of diverse contacts to break insular networks. Fact checkers should go beyond simply reacting to misinformation. Such a reactive, “whac-a-mole” approach may largely explain why fact checkers’ networks lack coordination and central structure. Instead, fact checkers may coordinate their efforts to highlight some of the most important or timely facts proactively. This recommendation extends beyond the COVID-19 context and applies to efforts aimed at combating misinformation in general (e.g., political propaganda and disinformation campaigns).

Findings

Finding 1: The landscape of misinformation and fact-checking posts is very much intertwined.

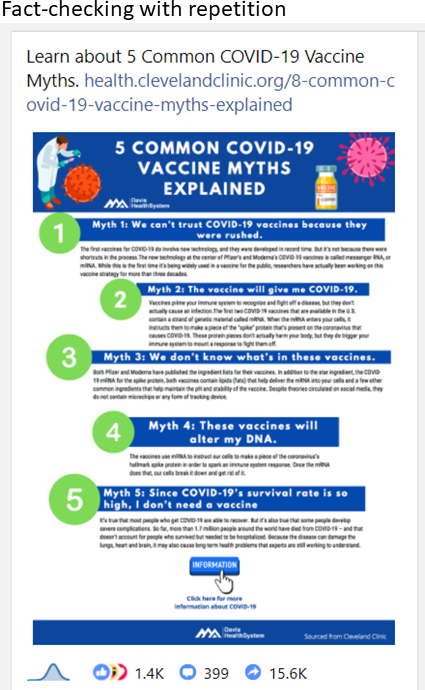

We found that the landscape of vaccine misinformation on Facebook was almost split in half between misinformation spreaders and fact checkers. 46.6% of information sources (N = 707) addressing COVID-19 vaccine misinformation were misinformation spreaders, referring to accounts that distribute false claims about COVID-19 vaccine without correcting them. The other 47.4% were fact checkers with 28.5% of them (N = 462) repeating the original misinformation and 18.9% (N = 307) reporting facts without repeating misinformation (see Figure 1 for example posts). There were 3.5% of accounts that have been deleted by the time of data analysis.

In addition, among public accounts that discussed COVID-19 vaccine misinformation, 81.5% were organizational accounts, with 25.1% nonprofits and 21.7% media (media here is broadly defined to include any public account that claims to be news media or perform news media functions based on their self-generated descriptions) as the most prominent organizations. A factorial ANOVA—i.e., an analysis of variance test that includes more than one independent variable, or “factor“—found significant differences among organization types and their attitudes towards misinformation (F(9, 1428) = 29.57, p <.001). Healthcare agencies and government agencies were most likely to be fact checkers without repeating misinformation, whereas anti-vaxxers and the news media were most likely to be misinformation spreaders. Among the 15.9% individual public accounts, the most prominent individuals were journalists (4.1%) and politicians (2.1%).

Finding 2: Different types of posts trigger different engagement responses.

We also found that different information sources’ posts yielded different emotional and behavioral engagement outcomes. Under each Facebook post, the public could respond by clicking on different emojis. Each emoji is mutually exclusive, meaning that if a user clicks, for instance, on the sad emoji, they cannot click on another emoji, such as haha. Specifically, we ran an ANOVA test to see if there were significant differences in terms of the public responses to the posts from different sources. Among behavioral responses, we found significant differences in terms of comments (F(3,732) = 2.863, p = .036). A Tukey post-hoc test (used to assess the significance of differences between pairs of group means) revealed that there was a significant difference (p = .003) between the number of public comments on misinformation spreaders (M = 1.114) and fact checkers who repeat (M = 1.407). That is, the publics were more likely to comment on posts from fact checkers who repeat misinformation and then correct it. We also found that different types of misinformation posts yielded different public emotional responses. Among emotional responses, there was a significant difference in terms of sadness reaction (F(3, 355) = 3.308, p = .02). A Tukey post-hoc test revealed that there was a significant difference (p = .003) between how people responded with sad emoji to misinformation spreaders (M = .617) and non-repeater factcheckers (M = .961). Another significant difference (p = .031) was also observed between how people responded to non-repeater fact checkers (M = .961) and fact checkers who repeated misinformation (M = .682). In general, Facebook users were most likely to respond with the sad emoji to non-repeater fact checkers.

Finding 3: Different types of accounts held different network positions.

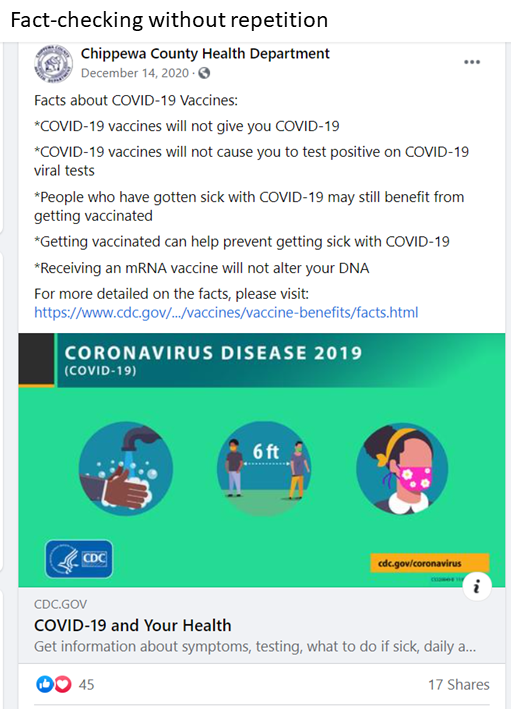

Results showed that different types of accounts held different network positions on Facebook. More specifically, as Figure 2 illustrates, we found that misinformation spreaders (green dots) occupied the most coordinated and centralized positions in the whole network, whereas fact checkers with repetitions (yellow dots) took peripheral positions. Importantly, fact checkers without repetitions (red dots, and many of them are healthcare organizations and government agencies) were mostly talking to themselves, exerting little influence on the overall URL co-sharing network, and conceding important network positions to misinformation spreaders.

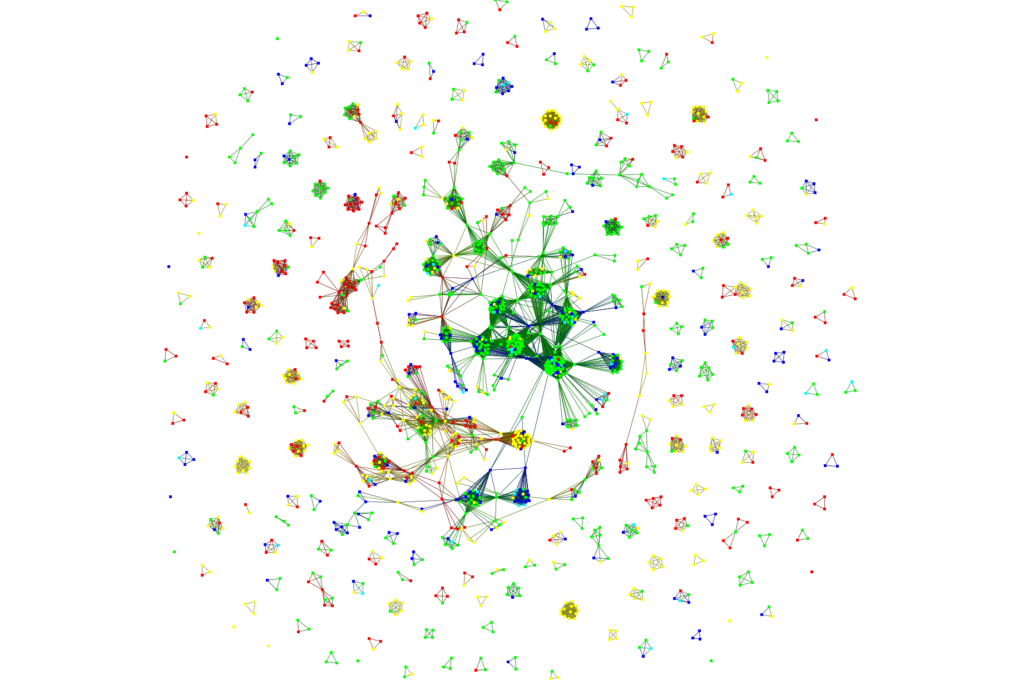

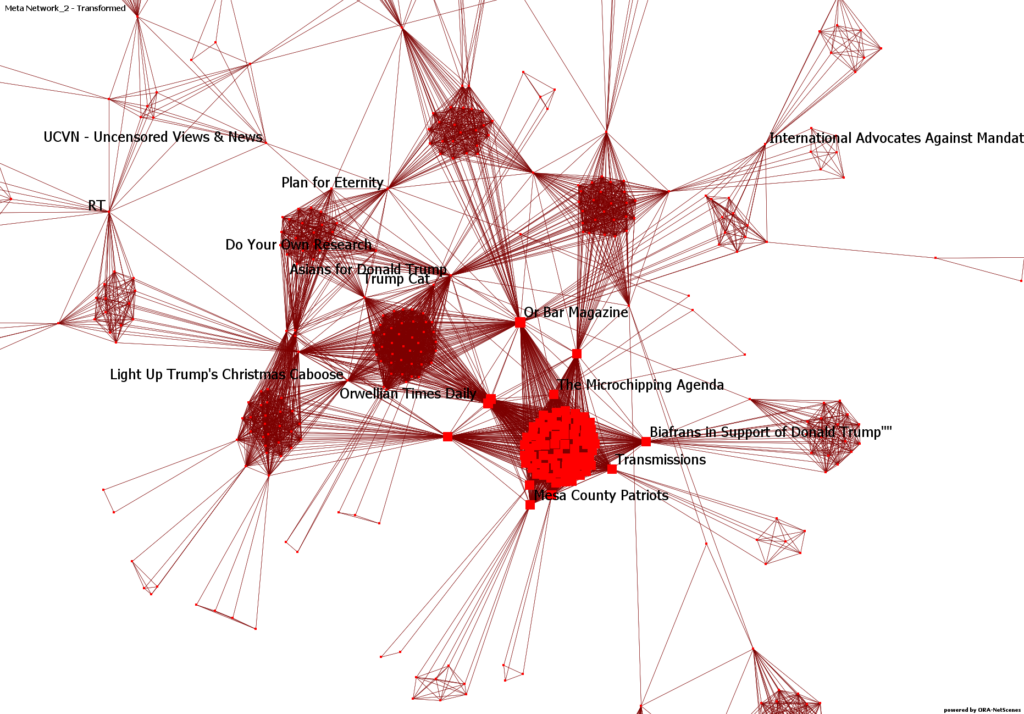

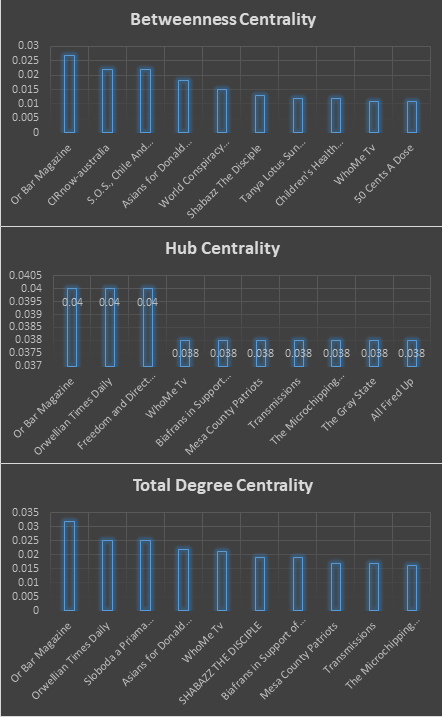

Additionally, Figure 3 visualizes the whole network of accounts that connected multiple misinformation themes. Interestingly, the accounts that enjoyed central positions were fake news accounts that spread conspiracy theories (e.g., “Or Bar Magazine,” “Orwellian Times Daily”), or groups that support Donald Trump (e.g., “Biafrans in Support of Donald Trump,” “Trump Cat,” “Asians for Donald Trump,” “Light up Trump’s Christmas Caboose,” “Mesa County Patriots”). To further examine their network characteristics, we calculated betweenness (i.e., the extent to which an account in the network lies between other accounts), hub centrality (i.e., the extent to which an account is connected to nodes pointing to other nodes), and total degree centralities (i.e., the extent to which an account is interconnected to others) of each account.

In addition, as Figure 4 illustrates, in terms of three different network measures (betweenness centrality, hub centrality, and total degree centrality),5Betweenness centrality indicates the degree to which accounts bridge from one part of a network to another. Accounts with high hub centralities are connected with many authoritative sources of topic-specific information. Total degree centrality is the total number of connections linked to an account. the top accounts were fake news accounts, Trump supporting groups, and anti-vaccine groups (e.g., “Children’s health defense,” “The Microchipping Agenda”).

Overall, posts containing misinformation were prevalent on Facebook. Out of all COVID-19 vaccine-related posts extracted in a year (between March 1st, 2020 to March 1st, 2021), 8.97% of the posts were identified to contain misinformation. We note that even though the amount of COVID-19 vaccine misinformation found in this study (8.97%) is concerning, the number is still relatively low compared to what previous studies have found. For instance, according to a systematic review of 69 studies (Suarez-Lledo & lvarez-Galvez, 2021), the lowest level of health misinformation circulating on social media was 30%. It is possible that this difference is due to the fact that our study focused on public accounts rather than private accounts. Public accounts may care more about their reputation than private accounts. In addition, the heightened attention to popular COVID-19 misinformation during the pandemic may have motivated official sources and fact checkers to fight vaccine misinformation with fact-checking to a greater extent. Finally, it may also be due to targeted efforts made by Facebook to combat vaccine-related misinformation.

Methods

Sample

To identify COVID-19 vaccine-related misinformation, we first reviewed popular COVID-19 vaccine misinformation mentioned by the most recent articles and CDC (Centers for Disease Control and Prevention, 2021; Hotez et al., 2021; Loomba et al., 2021) and identified nine popular themes. We then used keywords associated with these themes to track all unique Facebook posts that contained these keywords over the one-year period of March 1st, 2020 to March 1st, 2021 (between when COVID-19 was first confirmed in the U.S. and when the COVID-19 vaccine became widely available in the country).6Facebook is chosen for two reasons. First, previous studies confirm that there is a substantial volume of misinformation circulating on Facebook (Burki, 2020; World Health Organization, 2020). Second, Facebook’s user base is one of the largest and most diverse among all social media accounts, which makes this platform ideal for studying infodemics. See Table 3 for a summary of these themes and associated keywords. The tracking was done through Facebook’s internal data archive hosted by CrowdTangle, which hosts over 7 million public accounts’ communication records on public Facebook pages, groups, and verified profiles.7As an internal service, CrowdTangle has full access to Facebook’s stored historical data on public accounts. Any public accounts that mentioned these keywords in English during the search period have been captured by our data collection. Our sample is thus representative of public accounts that have mentioned the keywords listed in Table 2. Overall, 53,719 unique public accounts mentioned these keywords. Among them, 5,597 unique accounts shared URLs.

| Misinformation themes | Boolean search keywords | Total related posts # | *Total interactions # | |

| 1 | Vaccines alter DNA | COVID-19 vaccine AND (alter DNA OR DNA modification OR change DNA) | 18,897 | 4,295,053 |

| 2 | Vaccines cause autism | COVID-19 vaccine AND autism | 1,355 | 156,249 |

| 3 | Bill Gates plans to put microchips in people through vaccines | COVID-19 vaccine AND Bill Gates AND microchips | 460 | 121,983 |

| 4 | Vaccines contains baby tissue | COVID-19 vaccine AND (baby tissue OR aborted baby OR aborted fetus) | 9,308 | 3,320,472 |

| 5 | Vaccination is a deep state plan for depopulation | COVID-19 vaccine AND (depopulation OR population control) | 78,732 | 15,111,536 |

| 6 | Vaccines cause infertility | COVID-19 vaccine AND infertility | 1,388 | 212,811 |

| 7 | Big government will force everyone to get vaccinated | COVID-19 vaccine AND (forced vaccination OR forced vaccine) | 19,123 | 4,296,536 |

| 8 | Vaccines kill more people than COVID-19 | COVID-19 vaccine AND (massive death rate OR cover up death OR thousands die) | 71,353 | 24,158,927 |

| 9 | Vaccination is a Big Tech propaganda | COVID-19 vaccine AND (big tech crackdown OR big tech propaganda) | 1,277 | 321,122 |

| 10 | Total COVID-19 related posts and interactions | COVID-19 | 2,250,095 | 447,923,466 |

Research questions

Our research questions are: 1) Which types of accounts engage in spreading and debunking vaccine misinformation? 2) How do the publics react to different types of accounts in terms of emotional responses and behavioral responses? And 3) Are there any differences among these accounts in occupying positions in the URL-sharing network?

Analytic strategies

To answer these questions, we identified accounts that have shared at least one URL across the nine themes and revealed 5,597 unique accounts that met the criteria. Next, we manually coded all accounts into one of three categories: 1) misinformation spreaders, referring to accounts that distribute false claims about COVID-19 vaccine without correcting it, 2) fact checkers who debunk false claims while repeating the original false claim, and 3) fact checkers who provide accurate information about COVID-19 vaccine without repeating misinformation. There were 3.5% accounts that have been deleted by the time of data analysis.

Further, when two accounts shared the same URL, we considered two accounts as forming a co-sharing tie. We constructed a one-mode network based on co-sharing ties, which form a sparse network (ties=28,648, density=.00091) with many isolates or accounts connected to only another account (known as pendants). Although isolates and pendants also shared URLs, with such low centrality, those URLs were unlikely to be influential. As such, we removed isolates, pendants, and self-loop (accounts sharing the same URL more than one time), which revealed a core network of 1,648 accounts connected by 23,940 ties (density=.00942). This core network was the focus of our analysis. Together, the 1,648 accounts had a total of 245,495,995 followers (Mean=30,331, SD=62853.345).8Previous studies found that the average Facebook user has about 100 followers. Among public accounts, previous studies suggest that the average followership is about 2,106 (SD = 529) (Keller & Kleinen-von Königslöw, 2018). In comparison, it seems that the accounts featured in the current study are elite accounts in terms of followership and therefore are likely to be exceptionally influential in an infodemic.

To understand how these accounts discuss misinformation and influence the public’s engagement outcomes,9In this study, engagement outcomes are measured by Facebook users’ interactions such as comments, shares, likes, or emotional reactions (i.e., “love,” “wow,” “haha,” “sad,” or “angry”). Such interactions or emotional reactions do not necessarily indicate attitude change. we ran ANOVA tests and examined if there were significant differences in how the publics respond to different misinformation posts. To compare these accounts’ network positions, we calculated the accounts’ network measures and also used network visualization to illustrate how the accounts were interconnected via link sharing.

Topics

Bibliography

Brandtzaeg, P. B., & Følstad, A. (2018). Chatbots: changing user needs and motivations. Interactions, 25(5), 38–43. https://doi.org/10.1145/3236669

Budak, C., Agrawal, D., & El Abbadi, A. (2011, March 28). Limiting the spread of misinformation in social networks. Proceedings of the 20th International Conference on World Wide Web (WWW ’11), 665–674. https://doi.org/10.1145/1963405.1963499

Burki, T. (2020). The online anti-vaccine movement in the age of COVID-19. The Lancet Digital Health, 2(10), e504–e505. https://doi.org/10.1016/S2589-7500(20)30227-2

Centers for Disease Control and Prevention. (2021). Myths and facts about COVID-19 vaccines. https://www.cdc.gov/coronavirus/2019-ncov/vaccines/facts.html

Del Vicario, M., Bessi, A., Zollo, F., Petroni, F., Scala, A., Caldarelli, G., Stanley, H. E., & Quattrociocchi, W. (2016). The spreading of misinformation online. Proceedings of the National Academy of Sciences of the United States of America, 113(3), 554–559. https://doi.org/10.1073/pnas.1517441113

Ecker, U. K., Lewandowsky, S., & Chadwick, M. (2020). Can corrections spread misinformation to new audiences? Testing for the elusive familiarity backfire effect. Cognitive Research: Principles and Implications, 5(1), 1–25. https://doi.org/10.1186/s41235-020-00241-6

Evanega, S., Lynas, M., Adams, J., Smolenyak, K. (2020). Coronavirus misinformation: Quantifying sources and themes in the COVID-19 ‘infodemic’. The Cornell Alliance for Science, Department of Global Development, Cornell University. https://allianceforscience.cornell.edu/wp-content/uploads/2020/10/Evanega-et-al-Coronavirus-misinformation-submitted_07_23_20-1.pdf

Hotez, P. J., Cooney, R. E., Benjamin, R. M., Brewer, N. T., Buttenheim, A. M., Callaghan, T., Caplan, A., Carpiano, R. M., Clinton, C., DiResta, R., Elharake, J. A., Flowers, L. C., Galvani, A. P., Lakshmanan, R., Maldonado, Y. A., McFadden, S. M., Mello, M. M., Opel, D. J., Reiss, D. R., … Omer, S. B. (2021). Announcing the Lancet commission on vaccine refusal, acceptance, and demand in the USA. The Lancet, 397(10280), 1165–1167. https://doi.org/10.1016/S0140-6736(21)00372-X

Johnson, N. F., Velásquez, N., Restrepo, N. J., Leahy, R., Gabriel, N., El Oud, S., Zheng, M., Manrique, P., Wuchty, S., & Lupu, Y. (2020). The online competition between pro- and anti-vaccination views. Nature, 582(7811), 230–233. https://doi.org/10.1038/s41586-020-2281-1

Keller, T. R., & Kleinen-von Königslöw, K. (2018). Followers, spread the message! Predicting the success of Swiss politicians on Facebook and Twitter. Social Media + Society, 4(1), 2056305118765733. https://doi.org/10.1177/2056305118765733

Loomba, S., de Figueiredo, A., Piatek, S. J., de Graaf, K., & Larson, H. J. (2021). Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nature Human Behaviour, 5(3), 337–348. https://doi.org/10.1038/s41562-021-01056-1

Porter, S., Bellhouse, S., McDougall, A., ten Brinke, L., & Wilson, K. (2010). A prospective investigation of the vulnerability of memory for positive and negative emotional scenes to the misinformation effect. Canadian Journal of Behavioural Science / Revue canadienne des sciences du comportement, 42(1), 55–61. https://doi.org/10.1037/a0016652

Scheufele, D. A., & Krause, N. M. (2019). Science audiences, misinformation, and fake news. Proceedings of the National Academy of Sciences, 116(16), 7662–7669. https://doi.org/10.1073/pnas.1805871115

Shin, J., & Thorson, K. (2017). Partisan selective sharing: The biased diffusion of fact-checking messages on social media. Journal of Communication, 67(2), 233–255. https://doi.org/10.1111/jcom.12284

Shin, J., & Valente, T. (2020). Algorithms and health misinformation: A case study of vaccine books on amazon. Journal of Health Communication, 25(5), 394–401. https://doi.org/10.1080/10810730.2020.1776423

Song, M. Y. J., & Gruzd, A. (2017, July). Examining sentiments and popularity of pro- and anti-vaccination videos on YouTube. Proceedings of the 8th International Conference on Social Media & Society, 1–8. https://doi.org/10.1145/3097286.3097303

Swire-Thompson, B., DeGutis, J., & Lazer, D. (2020). Searching for the backfire effect: Measurement and design considerations. Journal of Applied Research in Memory and Cognition, 9(3), 286–299. https://doi.org/10.1016/j.jarmac.2020.06.006

Suarez-Lledo, V., & Alvarez-Galvez, J. (2021). Prevalence of health misinformation on social media: Systematic review. Journal of Medical Internet Research, 23(1), e17187. https://doi.org/10.2196/17187

Wilson, S. L., & Wiysonge, C. (2020). Social media and vaccine hesitancy. BMJ Global Health, 5(10), e004206. https://doi.org/10.1136/bmjgh-2020-004206

World Health Organization (2020). Managing the COVID-19 infodemic: Promoting healthy behaviours and mitigating the harm from misinformation and disinformation. https://www.who.int/news/item/23-09-2020-managing-the-covid-19-infodemic-promoting-healthy-behaviours-and-mitigating-the-harm-from-misinformation-and-disinformation

Vosoughi, S., Roy, D., & Aral, S. (2018). The spread of true and false news online. Science, 359(6380), 1146–1151. https://doi.org/10.1126/science.aap9559

Funding

There is no funding to support this research. The data access is supported by CrowdTangle, which is affiliated with Facebook.

Competing Interests

There is no potential conflict of interest.

Ethics

The research protocol was approved by an institutional review board.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

Given Facebook’s data sharing policy, we believe that the most ethical and legal way to facilitate future replication of our study is to share our research keywords with other researchers and then they can download the data directly from CrowdTangle. Table 2 in the article lists all the misinformation themes that we monitored for the current study. Using the same Boolean search keywords, others could obtain exactly the same data. Other researchers can apply for CrowdTangle access and then obtain data with CrowdTangle’s consent. Below is the link to apply for access to CrowdTangle: https://legal.tapprd.thefacebook.com/tapprd/Portal/ShowWorkFlow/AnonymousEmbed/68eda334-b9f1-4f28-8c6d-9c1e0ecc3f91