Abstract

Our aim was to develop practical models built with simple clinical and radiological features to help diagnosing Coronavirus disease 2019 [COVID-19] in a real-life emergency cohort. To do so, 513 consecutive adult patients suspected of having COVID-19 from 15 emergency departments from 2020-03-13 to 2020-04-14 were included as long as chest CT-scans and real-time polymerase chain reaction (RT-PCR) results were available (244 [47.6%] with a positive RT-PCR). Immediately after their acquisition, the chest CTs were prospectively interpreted by on-call teleradiologists (OCTRs) and systematically reviewed within one week by another senior teleradiologist. Each OCTR reading was concluded using a 5-point scale: normal, non-infectious, infectious non-COVID-19, indeterminate and highly suspicious of COVID-19. The senior reading reported the lesions’ semiology, distribution, extent and differential diagnoses. After pre-filtering clinical and radiological features through univariate Chi-2, Fisher or Student t-tests (as appropriate), multivariate stepwise logistic regression (Step-LR) and classification tree (CART) models to predict a positive RT-PCR were trained on 412 patients, validated on an independent cohort of 101 patients and compared with the OCTR performances (295 and 71 with available clinical data, respectively) through area under the receiver operating characteristics curves (AUC). Regarding models elaborated on radiological variables alone, best performances were reached with the CART model (i.e., AUC = 0.92 [versus 0.88 for OCTR], sensitivity = 0.77, specificity = 0.94) while step-LR provided the highest AUC with clinical-radiological variables (AUC = 0.93 [versus 0.86 for OCTR], sensitivity = 0.82, specificity = 0.91). Hence, these two simple models, depending on the availability of clinical data, provided high performances to diagnose positive RT-PCR and could be used by any radiologist to support, modulate and communicate their conclusion in case of COVID-19 suspicion. Practically, using clinical and radiological variables (GGO, fever, presence of fibrotic bands, presence of diffuse lesions, predominant peripheral distribution) can accurately predict RT-PCR status.

Similar content being viewed by others

Introduction

Coronavirus disease 2019 (COVID-19) is a viral disease caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2). It was identified in Wuhan, China, in December 2019 and has rapidly spread worldwide1. As of February 13th, 2021, approximately 108 million patients have been reported worldwide, including 3,390,952 patients in France, with 80,404 deaths2. Real-time reverse transcription polymerase chain reaction (RT-PCR) is the gold standard for the acute diagnosis of SARS-CoV-2 in upper and lower respiratory specimens, despite possible inaccurate results (false negative and false positive)3,4,5,6. However, many hospitals have limited or delayed access to molecular testing. Conversely, chest CT is routinely performed at most institutions and can provide a result, or at least a diagnostic orientation, in less than an hour, which could help in patients’ triage.

Previous studies have highlighted typical COVID-19 patterns consisting of peripheral, multifocal ground-glass opacities (GGO), with a sensitivity of 60–98%3,5,7,8. Relying on these radiological features, the French Society of Radiology (SFR) has published educational webinars and a standardized report including a four-point scale to categorize the risk of COVID-19 on chest CT, namely: highly suspicious, compatible/indeterminate, not suspicious and normal (https://ebulletin.radiologie.fr/comptes-rendus-covid-19). Similar initiatives have been proposed by other radiological societies, such as the COVID-19 Reporting and Data System (CO-RADS) for the Dutch radiological society9,10,11. Overall, the specificity and sensitivity of these semi-quantitative scoring systems ranged from 0.45 (in asymptomatic patients) to 0.92, and from 0.66 to 0.94, respectively, when including the compatible/indeterminate category9,10,11,12,13,14.

In parallel, predictive models have been issued to facilitate and even automate the diagnosis of COVID-19 on chest CT with good performances and in an objective manner. Indeed, regarding deep-learning models, diagnostic performances (estimated with area under the receiver operating characteristics curves [AUC]) ranged from 0.70 to 0.95 in retrospective testing cohorts15,16,17,18,19,20. When detailed, sensitivity and specificity were 0.84–1 and 0.25–0.96, respectively. However, their implementation in practice requires either time or mathematical and computer sciences skills or graphics processing units. Alternatively, machine-learning models built on combinations of clinical, biological, radiological and even radiomics features have been developed21,22. Hence, Qin et al. have proposed a scoring system based on biological, clinical and radiological data with high performance (AUC = 0.91, sensitivity = 0.88, specificity = 0.92 in the independent validation cohort), but the added value to classical radiological assessment was not detailed22. Furthermore, some information required to run the model, notably a history of exposure or the leukocyte count, may not be systematically known by radiologists.

IMADIS Teleradiology is a French company dedicated to remote interpretation of emergency CT and MRI examinations. As of March 2020, IMADIS Teleradiology had partnerships with the emergency and radiological departments of 69 hospitals covering all French regions. During the coronavirus crisis, IMADIS has been widely involved in the diagnosis of COVID patients by remote interpretations of chest CT scans from partner centres. In order to rapidly homogenize the teleradiological managements of patients with a COVID-19 suspicion within our structure, a standardized and dedicated workflow and educational webinars were thus specifically developed, including the semi-quantitative scoring system derived from the SFR guidelines. Furthermore, through a systematic second reading of the teleradiological reports, the radiological semiology of each chest CT acquired in the workflow was collected.

Therefore, the first aim of our study was to elaborate and validate practical and simple classification models that could be used by any radiologist in any institution, without any mathematical background, based on easily available clinical and radiological features. Hence, in addition to semi-quantitative and subjective scoring systems, our models could provide a probability for a positive RT-PCR that could help modulating the traditional radiological assessment, improve the communication of the results to physicians and guide possible complementary diagnostic strategy in case of first negative swab. The second aim was to correlate the results of our models with those of the standardized conclusions of the IMADIS teleradiologists as given in a real-life emergency setting.

Materials and methods

Study design

This observational multicentric study was approved by the French Ethics Committee for the Research in Medical Imaging (CERIM) review board (IRB CRM-2007-107) according to good clinical practices and applicable laws and regulations. While the clinical and radiological data were prospectively collected, the study was designed retrospectively. Consequently, the written informed consent was waived due to the retrospective nature of the analysis. All data were anonymized before any analysis. All methods were performed in accordance with the relevant guidelines and regulations.

IMADIS Teleradiology is a medical company providing remote interpretation of emergency imaging examinations in dedicated on-call centres. As of March 2020, IMADIS Teleradiology had partnerships with the emergency and radiological departments of 69 hospitals covering all French regions.

Our study included all consecutive adult patients from 2020-03-13 to 2020-04-14 from 15/69 (21.7%) partner hospitals that regularly provided the RT-PCR results to IMADIS, as these patients fulfilled the following inclusion criteria: age above 18 years old, need for chest CT due to suspicion of COVID-19 according to a board-certified emergency physician, available chest CT, and available RT-PCR status (Fig. 1, Supplemental Data S1). We did not exclude patients because of their medical history.

Chest CT acquisition

Chest CT examinations were performed by using 16- or 64-detector row CT scanners with a standardized non-contrast enhanced COVID-19 chest CT protocol for all hospitals. Depending on the emergency centers, the slice thickness ranged from 1 to 1.25 for the lung kernel, and from 2 to 2.5 mm for the mediastinal kernel. If pulmonary embolism was suspected, CT pulmonary angiographic protocol with bolus tracking intravenous iodine contrast agent administration at a rate of 3–4 mL/s was used instead (Omnipaque 350, GE Healthcare, Princeton, New Jersey; Iomeron 400, Bracco Diagnostics, Milan, Italy; and Ultravist 370, Bayer Healthcare, Berlin, Germany). In case of respiratory artifacts precluding the teleradiological interpretation, the acquisition was repeated, possibly on ventral decubitus.

Teleradiological interpretation protocol

The panel of IMADIS teleradiologists consisted of 109 senior radiologists with at least 5 years of emergency imaging experience (mean length of practice, 7 years) and 55 junior radiologists (i.e., residents) with 3–5 years of emergency imaging experience (mean length of practice, 4 years). Teleradiologists were on-call in groups of at least two teleradiologists per night in each of the two interpretation centres (Bordeaux and Lyon, France). All radiological reports involving COVID-19 made by junior teleradiologists were validated by a senior teleradiologist working in the same interpretation center.

The IMADIS teleradiology interpretation protocol met the French recommendations for teleradiology practice23. The reports and requests with clinical data (filled by emergency physicians) for COVID-19 Chest CT image interpretation were sent from the client hospitals to the IMADIS Teleradiology interpretation centres by using teleradiology software (ITIS; Deeplink Medical, Lyon, France). The images were securely transferred over a virtual private network to a local picture archiving and communication system for interpretation available on each teleradiological workstation from the two interpretation centers (Carestream Health 12, Rochester, NY). Images were immediately interpreted by on-call teleradiologists (OCTRs) and the interpretation was subsequently transmitted to the emergency physician without any delay.

CT examinations were systematically reviewed within one week following each on-call session by another senior teleradiologist (n = 15; mean length of practice, 12.1 years; mean number of reviews, 34 CTs) who was not involved during the on-call duty period, blinded to the RT-PCR result and the first reader’s report. All senior radiologists had a 2-h-long e-learning session on CT-Chest findings in COVID-19, which became publicly available on April 7 (Web-based e-learning, developed by IMADIS Radiologists, Deeplink Medical, Lyon, France and RiseUp, Paris, France: https://covid19-formation.riseup.ai/) in addition to the educational webinars (https://ebulletin.radiologie.fr/cas-cliniques-covid-19) and guidelines (https://ebulletin.radiologie.fr/covid19?field_theme_covid_19_tid=613) provided by the SFR.

Clinical data

Clinical information was provided by emergency physicians and collected through the teleradiology software in a standardized COVID-19 CT request form (ITIS; Deeplink Medical, Lyon, France), as follows: age; gender; active smoking; medical history, recent anti-inflammatory drugs intake; delay since onset of symptoms (categorized as: < 1 week, 1–2 weeks, ≥ 2 weeks); oxygen saturation (categorized as: ≥ 95%, 90–95% and < 90%); dyspnoea; fever (≥ 38 °C); cough; asthenia; headache; and ear, nose and throat symptoms.

The RT-PCRs were all performed on throat swab samples contemporary of the emergency room visit. Their results were retrospectively collected from the patients’ electronic medical records from each partner hospital.

Radiological data

At the end of the report, the OCTR had to propose a conclusion adapted from the SFR classification (https://ebulletin.radiologie.fr/actualit%C3%A9s-covid-19/compte-rendu-tdm-thoracique-iv-0), as follows: (1) normal, (2) abnormalities inconsistent with pulmonary infection; (3) abnormalities consistent with a non-COVID-19 infection; (4) indeterminate/compatible abnormalities; and (5) findings strongly suspicious of COVID-19.

The 2nd reading assessed the following radiological features: (a) underlying pulmonary disease (categorized as: emphysema, lung cancer, interstitial lung disease, pleural lesions, or bronchiectasis); (b) GGO pattern (categorized as: rounded or non-rounded GGO); (c) consolidation pattern (categorized as: rounded or non-rounded consolidations and fibrotic bands [defined as thick, dense bands generally extending from a visceral pleural surface and possibly responsible for architectural distortions]); (d) predominant pattern (categorized as: GGO or consolidation); (e) distribution pattern of lesions (categorized as: peripheral predominant [defined as located within 3 cm of a costal pleural surface], central predominant, or mixed); (f) bilateral lesions; (g) diffuse lesions (i.e., five lobes involved); (h) basal predominant lesions; (i) pleural effusion (categorized as: uni- or bilateral); (j) adenomegaly (defined as lymph node with short axis > 10 mm); (k) bronchial wall thickening (when each bronchial wall approximately exceeds about 33% of the internal bronchial luminal diameter, which was further categorized as lobar/segmental or diffuse); (l) airway secretions; (m) tree-in-bud micronodules, and (n) pulmonary embolism. Images for each radiological feature can be found in Supplemental Data S2.

Statistical analysis

Statistical analyses were performed using R (version 3.5.3, R Foundation for Statistical Computing, Vienna, Austria). All tests were two-tailed A p-value of less than 0.05 was deemed significant.

Univariate associations between clinical and radiological categorical variables and RT-PCR status were evaluated with Pearson Χ2 or Fisher exact tests, except for age, which was compared between the two groups with Student’s t-test (after assessing the normality of this numeric variable through Shapiro–Wilk test). Correlations between variables were evaluated with Spearman’s test in order to identify possibly redundant variables. For each significantly correlated pair of dummy variables extracted from the same initial multilevel categorical variable, the variable with the lowest p-value at univariate analysis was selected for the multivariable modelling.

Next, the study population was randomly partitioned into a training cohort (n = 412/513, ≈ 80%) and a validation cohort (n = 101/513, ≈ 20%), with a same prevalence of RT-PCR positivity (i.e. 196/412 [47.6%] and 48/101 [47.5%], respectively). We focused on two simple classifiers that do not require any computing interface to extract the probability for a positive RT-PCR, namely: classification and regression tree (CART, “rpart” R package) and stepwise backward-forward binary logistic regression (Step-LR—minimizing the Akaike information criterion, “MASS” R package). The models were built on the training cohort based on (i) either all dichotomized radiological variables or (ii) all dichotomized clinical + radiological variables—with a p-value < 0.05 at univariable analysis. The CART algorithm has one hyperparameter (i.e., a parameter that is set before the model building, while classical parameters are derived during the model building), named ‘complexity’, which controls the size of the tree and was selected following a cross-validation step in the training cohort as minimizing the classification error rate. Next, the tree was pruned following this optimal complexity hyperparameter. The minimal number of observations in the terminal node and the splitting criteria were set to 3 and the Gini index, respectively.

The performances of the CART-based and step-LR-based models were evaluated with AUC, i.e. by plotting the true positive rate (sensitivity) against the false positive rate (1—specificity) at various threshold settings and calculating the area under the curve. AUC was used to compare the models between themselves and to the prospective conclusions made by the OCTRs on the validation cohort. Accuracy (number of correctly classified patients divided by the total number of patients), sensitivity, specificity, negative predictive value (NPV) and positive predictive value (PPV) were estimated after dichotomizing predicted probabilities per a cut-off of 0.5. All results were given with a 95% confidence interval (95%CI). AUCs were compared using the pairwise Delong test (‘pROC’ R package).

Finally, we applied a decision curve analysis (DCA) to assess the clinical usefulness of the final models in the validation cohort. DCA consists of plotting the net benefit of applying the model for clinically reasonable risk thresholds compared with two alternative strategies: (i) to treat all patients as affected by COVID-19 or (ii) to treat none of the patients24. Herein, the net benefit of our models refers to the correct identification of patients with a positive or a negative RT-PCR, and the risk threshold can be seen as the harm-to-benefit ratio or the risk at which patients are indifferent about COVID-1925. Hence, a low risk threshold would correspond to patients who are particularly worried about the disease26.

Results

Study population

Table 1 summarizes the descriptive features of the study population. Overall, 513 patients were included, with a median age of 68.4 years (range 18–100) and 241/513 women (47%). Ninety-nine out of 513 (19.3%) of patients had a pre-existing lung chronic disease on chest CT. The prevalence of RT-PCR positivity was 244/513 (47.6%).

Univariate analysis

The following clinical variables were associated with RT-PCR positivity (RT-PCR +): delay since onset of symptoms ≥ 1 week, oxygen saturation < 95%, oxygen saturation < 90%, presence of fever, cough, asthenia and myalgia (p = 0.04, 0.03, 0.005, < 0.001, 0.02, 0.001 and 0.008, respectively) (Table 2). On the contrary, the presence of a dyspnea, headache and ear, nose, throat symptoms did not correlate with the RT-PCR status (p = 0.16, 0.5 and 0.6, respectively).

The following radiological variables were positively associated with RT-PCR +: presence of GGO, non-rounded GGO, rounded GGO, presence of consolidation, non-rounded consolidation, fibrotic bands, intralobular reticulations, fibrosis, GGO predominant pattern, peripheral predominant location, bilateral lesions, diffuse lesions, basal predominant lesions, and low, moderate and high extent of abnormalities (all p-values < 0.001) (Table 3). The following radiological variables were negatively correlated with RT-PCR + : consolidation predominant pattern, central predominant location, mixed predominant location, airway secretion, bronchial wall thickening, either lobar/segmental or diffuse, and tree-in-bud micronodules (p = 0.02, 0.001, 0.002, < 0.001, < 0.001, < 0.001, < 0.001, < 0.001, respectively). On the contrary, the presence of rounded consolidation, pleural effusion and adenomegaly did not correlate with the RT-PCR status (p = 0.6, 0.2 and 0.3, respectively).

Multivariate models

The correlation matrix of the relevant dichotomized variables is shown in Fig. 2. Analysis of the correlations between similar explanatory variables enabled the selection of ‘presence of GGO’ (over ‘rounded GGO’ and ‘non-rounded GGO’), ‘peripheral predominant location’ (over ‘central predominant location’ and ‘mixed predominant location’), ‘fibrotic band consolidation’ (over ‘non-rounded consolidation’ and ‘presence of consolidation’), ‘moderate to severe extension’ (over ‘low to severe extension’ and ‘severe extension’), and ‘bronchial wall thickening’ (over ‘diffuse bronchial wall thickening’ and ‘focal/segmental bronchial wall thickening’). Thus, the total numbers of variables ultimately entered in the multivariate radiological and clinical-radiological models were set to 13 and 19 dichotomized variables, respectively.

Correlation matrix of the 24 dichotomized radiological variables with a significant association with the RT-PCR status at univariate analysis. The colour-coding only corresponds to significant correlation (p < 0.05 according to Spearman test), from red (positive correlations) to blue (negative correlations). GGO ground-glass opacity.

Figure 3 shows the final decision trees. Regarding the best tree relying on radiological variables, six nodes corresponding to six questions were used. Fewer nodes were needed with the clinical-radiological final tree model (i.e. five nodes) Hence, the probability for positive RT-PCR ranged from 0.07 to 0.89, and from 0.06 to 0.93, respectively.

Regarding the Step-LR models, Table 4 shows the matrices enabling the calculation of the probability for RT-PCR + depending on radiological and clinical-radiological variables. These models also enable to identify independent predictors for RT-PCR + , that-is-to-say: fever, fibrotic bands, a GGO predominant pattern, a peripheral predominant distribution, diffuse lesions, intralobular reticulations and absence of bronchial wall thickening (range of p-value = < 0.001–0.03).

Performances of the models and conclusions by on-call teleradiologists

To evaluate the performances of the models, trained models were tested on the external validation cohort. Table 5 shows the results while results in the training cohort are given in Supplemental Data S3.

Regarding radiological models, the highest performances were reached with the CART model (AUCvalidation = 0.91, 95%CI = [0.86–0.98] and accuracyvalidation = 0.86, 95%CI = [0.78–0.92], versus AUCvalidation = 0.90, 95%CI = [0.84–0.96] and accuracyvalidation = 0.83, 95%CI = [0.74–0.90] in Step-LR model).

Regarding clinical-radiological models, the highest performances were reached with the Step-LR model (AUCvalidation = 0.93, 95%CI = [0.87–0.98] and accuracyvalidation = 0.86, 95%CI = [0.76–0.93], versus AUCvalidation = 0.90, 95%CI = [0.83–0.97] and accuracyvalidation = 0.83, 95%CI = [0.72–0.91] in CART model).

Regarding teleradiologists in real-life setting, in the whole study population and in the validation subcohort, the AUCs of the OCTRs’ conclusions (sub-categorized as (1–2–3) or (4) or (5)) were 0.89 (95%CI = [0.86–0.92]) and 0.88 (95%CI = [0.72–0.88]), respectively.

The ROC curves in the validation cohort are displayed on Fig. 4. None of the AUCvalidations were significantly different (lowest p-value = 0.07 regarding the comparisons between the Step-LR clinical-radiological model and the OCTRs’ conclusions).

Usefulness of the best model in case of indeterminate teleradiological conclusions

In the subgroup of patients with an indeterminate conclusion (4), the probabilities for RT-PCR + with the clinical-radiological Step-LR model were significantly higher for patients with RT-PCR + than for patients with RT-PCR− (0.63 ± 0.28 versus 0.39 ± 0.27, respectively, p = 0.004). Figure 5 illustrates the potential application of this model for patients with an indeterminate/compatible conclusion. An excel macro is provided in Supplementary Data 4 so that the interested reader can test the Step-LR models.

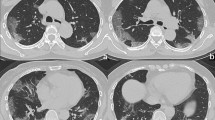

Added value of the final clinical-radiological model for patients with indeterminate/compatible radiological conclusions. (a) A 50-year-old woman presented at the emergency with chest pain, fever, and cough for less than one week. Chest CT showed a basal-predominant peripheral ground-glass opacity (GGO—white arrow) in the lower right lobe. The probability for RT-PCR + was 68.9%. (b) A 71-year-old woman presented at the emergency with cough, dyspnoea, fever and asthenia for 1–2 weeks. Chest CT showed bilateral peripheral fibrotic bands (white arrowheads) with a peripheral right GGO (white arrow). The probability for RT-PCR + was 65%. (c) A 61-year-old woman with a medical history of active smoking, emphysema and chronic obstructive pulmonary disease presented at the emergency with a cough, dyspnoea, fever and asthenia. Chest CT showed peripheral predominant intralobular reticulations in the lower left lobe (black arrowheads) with a single area of non-rounded GGO (white arrow). The probability for RT-PCR + was 57.9%. In the three cases, the RT-PCR was indeed positive.

Misclassifications with the best model

Regarding the outliers of the model with the highest AUC (i.e., clinical-radiological step-LR), 3 out of the 32 patients (9.4%) with a negative RT-PCR in the validation cohort were classified positively by the model. Of these 3 patients, 2 were highly suspicious of COVID-19 and 1 was indeterminate according to the OCTRs. Conversely, 7 out of the 39 patients (17.9%) with a positive RT-PCR in the validation cohort were classified negatively by the model. Of these 7 patients, 4 had conclusions of (1), (2) or (3) according to the OCTRs.

Clinical usefulness and decision curve analysis of the final models in the validation cohort

The DCAs showed that the OCTRs’ conclusion and the final models added more benefit than the ‘treat all approach’ above a risk threshold of approximately 0.05 (Fig. 6). The two final models added more net benefit than the ‘treat all’ strategy and the OCTRs’ conclusion for threshold probability above 0.43.

Decision curve analysis (DCA) of the final models and conclusions of the on-call teleradiologists (OCTRs) in the validation cohort, depending on data on which they were trained: (a) radiological and (b) clinical + radiological. The x-axis corresponds to the odds or risk threshold at which a patient would opt for COVID-19 management. The y-axis corresponds to the overall net benefit. The black line represents the ‘treat all’ patients COVID-19 strategy. The grey horizontal line represents the ‘treat none’ of the patients strategy. For both sources of data, the added value of the models over the ‘treat all’ strategy and the OCTRs readings are highlighted for risk thresholds above 0.43 (arrows). CART classification and regression tree, Step-LR stepwise logistic regression.

Discussion

In this study, we developed practical and ready-to-use models to predict the RT-PCR status from categorical clinical and radiological variables that are routinely available or assessable by emergency physicians and radiologists without either expertise in thoracic imaging or computer science or additional blood samples. We purposely elaborated parsimonious models through a cautious variable selection including univariate filtering, assessment of correlations and stepwise selection. Our best models displayed high AUCs (> 0.90) and accuracy (> 0.85) on the external validation cohort, though they were not statistically different from those of OCTRs. Thus, these models could be helpful notably to weight indeterminate conclusions or to manage patients with a strong COVID-19 suspicion and negative (or lack of) RT-PCR3.

Our series represent a real-life multi-centric emergency population during the COVID-19 pandemic with well-balanced proportions of negative and positive RT-PCR results, making it appropriate for the development of predictive models. The clinical and radiological variables correlating with the RT-PCR status in the univariate analysis were consistent with the literature, namely, fever, asthenia, oxygen saturation, GGO, non-rounded consolidation, fibrotic bands, and intralobular reticulations with bilateral, diffuse, basal predominant and peripheral distributions27,28,29.

The aim of our models was to provide rapid assistance to non-specialized radiologists who may need support to conclude or modulate his report confidently without delaying patient management. We purposely chose classification algorithms that are easily explainable and do not require additional computing time, i.e., a binary logistic regression and a classification tree. These two algorithms are often used for benchmarking purposes in machine learning before using more complex models. In preliminary exploratory analyses, we actually tested other algorithms such as random forests or categorical boosted trees, which indeed showed slightly higher AUCs but could not be visually represented and explained to radiologists. Interestingly, similar performances of our models in the training and validation cohorts highlight the lack of overfitting and their good generalizability to new patients.

Improving COVID-19 diagnosis with deep learning frameworks has already been attempted with good results, but without remarkable added value compared with the diagnostic performances of traditional radiological assessment, OCTRs or our models as calculated in the independent validation set. Indeed, previous studies have found that the sensitivity, specificity and accuracy of radiologists on chest CT were 0.97, 0.56 and 0.72, respectively30. Using a quantitative assessment of the main COVID-19 radiological features (through volumes of GGO and fibrotic alterations) did not increase sensitivity and specificity (0.68 and 0.59 with GGO, respectively, and 0.86 and 0.44 with fibrotic alterations, respectively)31. The AUCs of these deep learning models mostly ranged between 0.70 and 0.95, while sensitivity and specificity, when given, ranged between 0.84 and 1, and 0.25 and 0.96, respectively15,16,17,18,19,20.

Other studies proposed practical scores such as the PSC-19, which relies on four variables (history of exposure, leukocyte count, peripheral lesion and crazy paving patterns), with AUC = 0.91, sensitivity = 0.88 and higher specificity of 0.9222. However, this score requires a blood sample and could be of limited use when investigating a patient in a new COVID-19 cluster of patients without proven exposure. Chen et al. also combined an explainable machine learning algorithm (i.e., penalized logistic regression) and bio-clinical-radiological variables21. They built three models: bio-clinical alone, radiological alone and bio-clinical-radiological models. Surprisingly, the results of the final models in the validation cohort showed the highest performances when radiological variables were not taken into account (AUCs = 0.97, 0.81 and 0.94, respectively). Ridge and/or LASSO penalized logistic regressions have also been investigated in our preliminary data exploration but did not show added value to Step-LR. While deep learning studies trained their models in large cohorts of hundreds of patients, it should be noted that these two practical studies did not exceed one hundred patients, questioning their validity. In addition, the performances of standardized radiological conclusions were missing in all these studies involving artificial intelligence. It should be noted that alternative measures could have been used to evaluate and compare classification models, instead of the AUC. We purposely chose the AUC because it was the most frequently used estimators in similar prior studies and it is adapted to well-balanced study population regarding the outcome.

Our results also highlight the very good performances of radiologists in daily practice, which can be explained by the considerable increase in knowledge of the COVID-19 radiological presentations since the first peer-reviewed studies in January–February 2020, attained through open-source educational publications, webinars and recommendations issued by national and international radiological societies, which were immediately relayed to the teleradiologists working at IMADIS. Indeed, comparisons of AUCs between best models and OCTRs’ conclusions did not reach significance, which could have been due to lack of power. Additionally, OCTRs could ask for their colleagues’ advice when facing a complicated case, as they were never alone in one of our two emergency interpretation centres during on-call duty. Therefore, though our predictive models showed comparable performances with other machine- and deep-learning models, and slightly higher performances than OCTRs’ conclusions, the gain was not striking, leading to no significant difference according to the Delong test. Few studies have focused on the specificity of teleradiologists compared with conventional radiologists. It should be noted that the organization of teleradiology could greatly vary from one group to one another. IMADIS has the particularity to gather teams of teleradiologists in interpretation centers, with the constant ability to interact with colleagues and solicit help. Banaste et al. have demonstrated that IMADIS structuration enables to organize systematic second readings of whole body CT scanners in multiple traumas patients, with good diagnostic performances of OCTRs whatever the amount of activity and the hours of the day32. Regarding the OCTRs’ accuracy for diagnosing COVID-19, Nivet et al. have also shown that implementing the semi-quantitative assessment as proposed by SFR was feasible and provided high sensitivity (0.92), specificity (0.75–0.84), PPV (0.77–0.84) and NPV (0.91–0.92) depending on the reading and when considering the indeterminate/compatible group as positive12.

To illustrate eventual clinical applications of the final models, we used DCAs, which are a popular alternative to cost-effectiveness studies24. Regarding the two settings (radiological data alone and clinical-radiological data), we found similar shapes of the DCAs for the OCTRs’ conclusion and the final models. Interestingly, at worst, the net benefits of the models were equivalent to those of OCTRs (for the intermediate risk threshold) and to the ‘treat all’ strategy (for the very low risk threshold). However, the machine learning models would be complementary to the OCTRs’ conclusion and would improve the net benefit for patients from intermediate- to high-risk thresholds. Practically, we believe that our models could be useful for patients with (i) high suspicion but non-available RT-PCR status or negative first RT-PCR, and (ii) indeterminate OCTR’s conclusion. A high probability per our models would lead to the isolation of the patients and the achievement of another RT-PCR.

The measurements of performance in our models should also be considered with the disease prevalence during our period of inclusion (≈ 48%) and the nature of the study population33. Indeed, although PPV and NPV are useful to rapidly sort patients with COVID-19 suspicion, they depend on this prevalence, which fluctuates depending on the country and public health measures.

Our study has certain limitations. First, the RT-PCR is an imperfect gold standard, which may explain why no study has ever reached perfect performance. Indeed, the analysis of the outliers of the best model in the validation cohort with fully available clinical-radiological variables (n = 10, 3 false positives and 7 false negatives) demonstrated that 6 of these patients either could have performed their chest CT before the disease manifestation or were false negative by RT-PCR. This finding stresses the risk of false negatives with chest CT due to the delay between the beginning of clinical symptoms and the appearance of the COVID-19 semiology on chest CT. Second, approximately 25–33% of clinical data were missing because they were collected in real-life emergency situations. Third, some radiological features were not evaluated because they were published after the beginning of the COVID-19 IMADIS workflow (for instance: multifocality and thickened vessels). Fourth, a history of exposure was rarely collected in our cohort although it could have been an important predictor. Fifth, biological markers were not available because they were rarely dosed before asking for the chest CT. Sixth, although the OCTRs’ conclusions were prospectively collected, the radiological features that were necessary to elaborate the predictive models were not collected in emergency situations, which could have led to the overestimation of the associations of these features with the RT-PCR status. Seventh, it is worth noting that our study did not propose specific models to differentiate non-COVID-19 infectious lung diseases from COVID-19. Though an important corollary question, this was not our first aim. Indeed, our population represents a real-life emergency cohort and some patients could have had non-infectious diseases (: conclusion (2)), or non-pulmonary infections for instance (: conclusion (1)), in addition to non-COVID-19 lung infectious diseases (: conclusion (3)). Finally, although widely used and significantly reproducible over multiple raters, the radiological features were qualitatively or semi-quantitatively assessed, which introduces a risk of subjectivity and could bias the performances of the model in other validation cohorts12.

To conclude, we presented one of the largest French multicentric emergency cohort including prospective standardized reports following national recommendations. Our findings illustrate the high diagnostic performances of the OCTRs working in a teleradiological structure entirely dedicated to emergency imaging, which promoted continuous training and collaborative work. This setting enables us to propose free and practical models built on easily available clinical and radiological data provided by emergency physicians and OCTRs. These models provide a probability for positive RT-PCR, which could be used by general radiologists in case of indeterminate radiological conclusions, and no/limited availability to RT-PCR in order to confidently conclude their reports in daily practice.

Data availability

The datasets analyzed during the current study will be publicly available (Being submitted to Springer Nature Database). Details regarding the statistical analysis can be made available from the corresponding author on reasonable request.

Abbreviations

- 95%CI:

-

95% Confidence interval

- AUC:

-

Area under the receiver operating characteristic curves

- CART:

-

Classification and regression tree

- COVID-19:

-

Coronavirus disease 2019

- CT:

-

Computed tomography

- DCA:

-

Decision curve analysis

- GGO:

-

Ground-glass opacities

- NPV:

-

Negative predictive value

- OCTR:

-

On-call teleradiologists

- PPV:

-

Positive predictive value

- RT-PCR:

-

Real-time reverse transcription polymerase chain reaction

- SARS-CoV-2:

-

Severe acute respiratory syndrome coronavirus 2

- SFR:

-

French society of radiology

- Step-LR:

-

Stepwise binary logistic regression

References

Zhu, N. et al. A novel coronavirus from patients with pneumonia in China, 2019. N. Engl. J. Med. https://doi.org/10.1056/NEJMoa2001017 (2020).

Hopkins., J. CSSE Coronavirus COVID-19 Global Cases (dashboard). https://gisanddata.maps.arcgis.com/apps/opsdashboard/index.html#/bda7594740fd40299423467b48e9ecf6 (2021).

Ai, T. et al. Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: A report of 1014 cases. Radiology https://doi.org/10.1148/radiol.2020200642 (2020).

Fang, Y. et al. Sensitivity of chest CT for COVID-19: Comparison to RT-PCR. Radiology https://doi.org/10.1148/radiol.2020200432 (2020).

Yang, S. et al. Clinical and CT features of early stage patients with COVID-19: A retrospective analysis of imported cases in Shanghai, China. Eur. Respir. J. https://doi.org/10.1183/13993003.00407-2020 (2020).

Kanne, J. P., Little, B. P., Chung, J. H., Elicker, B. M. & Ketai, L. H. Essentials for radiologists on COVID-19: An update-radiology scientific expert panel. Radiology https://doi.org/10.1148/radiol.2020200527 (2020).

Prevention., C. for D. C. Interim Guidelines for Collecting, Handling, and Testing Clinical Specimens from Persons Under Investigation (PUIs) for Coronavirus Disease 2019 (COVID-19). https://www.cdc.gov/coronavirus/2019-ncov/lab/guidelines-clinical-specimens.htlm (2020).

Fang, X., Li, X., Bian, Y., Ji, X. & Lu, J. Radiomics nomogram for the prediction of 2019 novel coronavirus pneumonia caused by SARS-CoV-2. Eur. Radiol. https://doi.org/10.1007/s00330-020-07032-z (2020).

Prokop, M. et al. CO-RADS: A categorical CT assessment scheme for patients suspected of having COVID-19: Definition and evaluation. Radiology https://doi.org/10.1148/radiol.2020201473 (2020).

De Smet, K. et al. Diagnostic performance of chest CT for SARS-CoV-2 infection in individuals with or without COVID-19 symptoms. Radiology https://doi.org/10.1148/radiol.2020202708 (2021).

Fujioka, T. et al. Evaluation of the usefulness of CO-RADS for chest CT in patients suspected of having COVID-19. Diagnostics (Basel) https://doi.org/10.3390/diagnostics10090608 (2020).

Nivet, H. et al. The accuracy of teleradiologists in diagnosing COVID-19 based on a French multicentric emergency cohort. Eur. Radiol. https://doi.org/10.1007/s00330-020-07345-z (2020).

Brun, A.-L. et al. COVID-19 pneumonia: high diagnostic accuracy of chest CT in patients with intermediate clinical probability. Eur. Radiol. https://doi.org/10.1007/s00330-020-07346-y (2020).

Ducray, V. et al. Chest CT for rapid triage of patients in multiple emergency departments during COVID-19 epidemic: Experience report from a large French university hospital. Eur. Radiol. https://doi.org/10.1007/s00330-020-07154-4 (2020).

Harmon, S. A. et al. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat. Commun. https://doi.org/10.1038/s41467-020-17971-2 (2020).

Lessmann, N. et al. Data system and chest CT severity scores in patients suspected of having COVID-19 using artificial intelligence. Radiology https://doi.org/10.1148/radiol.2020202439 (2021).

Bai, H. X. et al. AI Augmentation of radiologist performance in distinguishing COVID-19 from pneumonia of other etiology on chest CT. Radiology https://doi.org/10.1148/radiol.2020201491 (2020).

Ni, Q. et al. A deep learning approach to characterize 2019 coronavirus disease (COVID-19) pneumonia in chest CT images. Eur. Radiol. https://doi.org/10.1007/s00330-020-07044-9 (2020).

Li, L. et al. Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: Evaluation of the diagnostic accuracy. Radiology https://doi.org/10.1148/radiol.2020200905 (2020).

Pu, J. et al. Any unique image biomarkers associated with COVID-19?. Eur. Radiol. https://doi.org/10.1007/s00330-020-06956-w (2020).

Chen, X. et al. A diagnostic model for coronavirus disease 2019 (COVID-19) based on radiological semantic and clinical features: A multi-center study. Eur. Radiol. https://doi.org/10.1007/s00330-020-06829-2 (2020).

Qin, L. et al. A predictive model and scoring system combining clinical and CT characteristics for the diagnosis of COVID-19. Eur. Radiol. https://doi.org/10.1007/s00330-020-07022-1 (2020).

Site, S. F. de R. W. Qualité et Sécurité des Actes de Téléimagerie: Guide de Bonnes Pratiques. http://www.sfrnet.org/sfr/professionnels/2-infos-professionnelles/05-teleradiologie/index.phtml (2020).

Vickers, A. J. & Elkin, E. B. Decision curve analysis: A novel method for evaluating prediction models. Med. Decis. Mak. https://doi.org/10.1177/0272989X06295361 (2006).

Pauker, S. G. & Kassirer, J. P. Therapeutic decision making: A cost-benefit analysis. N. Engl. J. Med. https://doi.org/10.1056/NEJM197507312930505 (1975).

Vickers, A. J., van Calster, B. & Steyerberg, E. W. A simple, step-by-step guide to interpreting decision curve analysis. Diagn. Progn. Res. https://doi.org/10.1186/s41512-019-0064-7 (2019).

Wang, D. et al. Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus-infected pneumonia in Wuhan, China. JAMA https://doi.org/10.1001/jama.2020.1585 (2020).

Huang, Y., Cheng, W., Zhao, N., Qu, H. & Tian, J. CT screening for early diagnosis of SARS-CoV-2 infection. Lancet. Infect. Dis https://doi.org/10.1016/S1473-3099(20)30241-3 (2020).

Huang, C. et al. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet https://doi.org/10.1016/S0140-6736(20)30183-5 (2020).

Caruso, D. et al. Chest CT Features of COVID-19 in Rome, Italy. Radiology https://doi.org/10.1148/radiol.2020201237 (2020).

Caruso, D. et al. Quantitative Chest CT analysis in discriminating COVID-19 from non-COVID-19 patients. Radiol. Med. https://doi.org/10.1007/s11547-020-01291-y (2021).

Banaste, N. et al. Whole-body CT in patients with multiple traumas: Factors leading to missed injury. Radiology https://doi.org/10.1148/radiol.2018180492 (2018).

Eng, J. & Bluemke, D. A. Imaging publications in the COVID-19 Pandemic: Applying new research results to clinical practice. Radiology https://doi.org/10.1148/radiol.2020201724 (2020).

Acknowledgements

We would like to sincerely thank: all our partner centers and their collaborators involved in this project (CH AGEN, CH AVIGNON, CH BRIANCON, CH CARCASONNE, CH CHALON, CH CHAMBERY, CH COMPIEGNE, CH LE CREUSOT, CH LOURDES, CH MACON, CH NOYON, CH PARAY-LE-MONIAL, CH ROANNE, CH ST JULIEN-EN-GENEVOIS, CH ST JOSEPH ST LUC) and especially Drs John REFAIT, Sylvie ASSADOURIAN, Julie DONETTE, Soraya ZAID, Michèle CERUTI, Bernard BRU, Judith KARSENTY, Sandrine MERCIER, Quentin METTE, Arnaud VERMEERE-MERLEN, Pascal CAPDEPON, Mahmoud KAAKI, Xavier COURTOIS, and Mrs Joëlle CAUCHETIEZ. The support teams at Imadis Teleradiology: Audrey PARAS, Lucie VAUTHIER, Anne LE GUELLEC and Tiffany DESMURS. DeepLink Medical Team: Hugues LAJOIE, Marine CLOUX and Marie MOLLARD.

Author information

Authors and Affiliations

Contributions

All authors reviewed the manuscript and approved it. P.S., HN, NB participated in conception of the work, creation of database, interpretation of images, manuscript writing. ACr participated in creation of database, interpretation of images, data analysis, development of algorithms, manuscript writing. AB participated in creation of database. L.P., N.F., AC.h., F.A.L., E.Y., A.B.C., J.B., B.P., F.P., G.B., C.M., F.B., J.F.B., V.T. Participated in interpretation of images. G.G. participated in conception of the work, manuscript writing, supervision of work.

Corresponding author

Ethics declarations

Competing interests

LP, NF, ACh, EY, ABC, JB, BP, CM, JFB, VT, and GG have shares in DeepLink Medical. AB is an employee of DeepLink Medical. The other authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schuster, P., Crombé, A., Nivet, H. et al. Practical clinical and radiological models to diagnose COVID-19 based on a multicentric teleradiological emergency chest CT cohort. Sci Rep 11, 8994 (2021). https://doi.org/10.1038/s41598-021-88053-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-88053-6

This article is cited by

-

Emergency department CT examinations demonstrate no evidence of early viral circulation at the start of the COVID-19 pandemic—a multicentre epidemiological study

Insights into Imaging (2024)

-

Emergency teleradiological activity is an epidemiological estimator and predictor of the covid-19 pandemic in mainland France

Insights into Imaging (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.